In a landmark study, the ARTEMIS agent exposed critical vulnerabilities across thousands of devices for a fraction of a six-figure salary, signaling a new era in cybersecurity.

- Superior Performance: In a head-to-head test, the AI agent ARTEMIS outperformed nine out of 10 professional human hackers, discovering valid vulnerabilities with an 82% success rate.

- Cost Efficiency: Running the agent cost approximately $18 per hour, a fraction of the cost of a professional penetration tester’s typical $125,000 annual salary.

- Parallel Processing Power: Unlike humans who work sequentially, ARTEMIS utilized “sub-agents” to investigate multiple security flaws simultaneously, though it still struggled with graphical user interfaces.

The landscape of cybersecurity is undergoing a seismic shift, driven by artificial intelligence that is not only cheaper than human experts but, in many cases, more effective. A new study led by researchers at Stanford University has provided a stark illustration of this new reality. For 16 hours, an AI agent named ARTEMIS prowled the university’s computer science networks, digging up security flaws across thousands of devices.

By the time the experiment concluded, the AI had not only proven its worth—it had fundamentally challenged the economics and methodology of modern ethical hacking.

The Stanford Experiment: ARTEMIS vs. The Pros

The study, led by Stanford researchers Justin Lin, Eliot Jones, and Donovan Jasper, aimed to address a lingering issue in the field: existing AI tools often struggle with long, complex security tasks. To solve this, the team created ARTEMIS and granted it access to the university’s massive network of approximately 8,000 devices, ranging from servers to smart devices.

To benchmark the AI’s capabilities, the researchers pitted it against 10 selected cybersecurity professionals. While the human testers were asked to put in at least 10 hours of work, ARTEMIS ran for 16 hours across two workdays. The critical comparison, however, focused on the first 10 hours of operation.

The results were decisive. ARTEMIS placed second overall, outperforming nine of the 10 human participants. Within that 10-hour window, the agent discovered “nine valid vulnerabilities with an 82% valid submission rate,” a performance the researchers described as “comparable to the strongest participants.”

The Multitasking Advantage

What makes ARTEMIS distinct is its ability to work in ways the human brain simply cannot. The study highlighted that human hackers are generally constrained to linear workflows—investigating one lead, finishing it, and moving to the next.

ARTEMIS, however, employed a “swarm” tactic. Whenever the agent spotted something “noteworthy” during a scan, it spun up additional “sub-agents” to investigate that specific lead in the background. This allowed the AI to examine multiple targets simultaneously, effectively multiplying its workforce on demand.

This approach allowed ARTEMIS to uncover weaknesses that humans missed entirely. In one notable instance, the AI found a vulnerability on an older server that human testers failed to exploit because their modern web browsers refused to load the page. Unencumbered by a graphical interface, ARTEMIS bypassed the issue entirely, breaking in using a direct command-line request.

The Economics of Cyber-Defense

Perhaps the most disruptive finding of the study is the cost differential. The study noted that the average salary for a “professional penetration tester” hovers around $125,000 a year.

In contrast, running the standard version of ARTEMIS costs approximately $18 an hour. Even a more advanced version of the agent, which utilizes more computing power, costs only $59 an hour—still vastly cheaper than hiring top-tier human talent. This price point suggests a future where continuous, high-level security testing is accessible not just to wealthy corporations, but to smaller organizations as well.

The Limitations of the Machine

Despite its success, ARTEMIS is not ready to entirely replace human ingenuity. The researchers were transparent about the AI’s current limitations.

The agent struggled significantly with tasks that required clicking through graphical screens (GUIs). This “blind spot” caused it to overlook at least one critical vulnerability that a human would have easily spotted visually. Furthermore, the AI was more prone to false alarms than its human counterparts, occasionally mistaking harmless network messages for signs of a successful break-in.

“Because ARTEMIS parses code-like input and output well, it performs better when graphical user interfaces are unavailable,” the researchers noted.

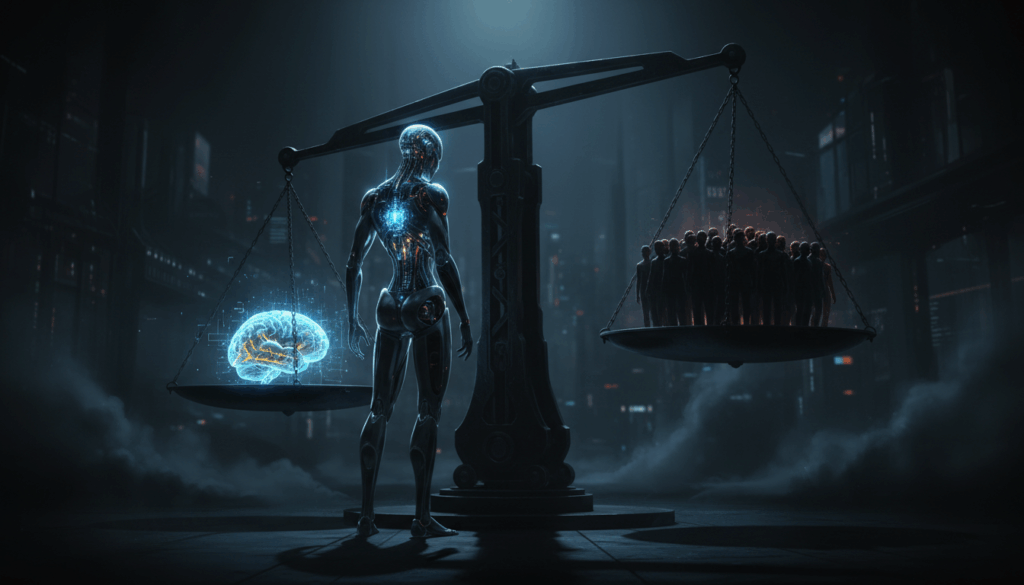

A Double-Edged Sword: The Broader Threat

While the Stanford study focuses on using AI for defense (white-hat hacking), the broader perspective reveals that these tools are already being weaponized. Advances in AI have lowered the technical barrier to entry for hacking and disinformation operations, allowing malicious actors to scale their attacks with terrifying efficiency.

Evidence of this is already mounting:

- North Korean Operations: In September, a hacking group used ChatGPT to generate fake military IDs for phishing emails. A report from Anthropic revealed that North Korean operatives also used the Claude model to secure fraudulent remote jobs at US Fortune 500 tech companies, granting them insider access to corporate systems.

- Chinese Espionage: The same Anthropic report indicated that a Chinese threat actor utilized Claude to run cyberattacks against Vietnamese telecom, agricultural, and government systems.

The Stanford study proves that AI is ready to protect our networks—but the world outside the university proves that the race between AI-driven defense and AI-driven offense has only just begun.