Moving beyond simple prototyping, Amazon’s trio of new “frontier agents” promises to handle backlog tasks, security reviews, and DevOps automation with minimal human supervision.

- Autonomous Autonomy: AWS introduced three “frontier agents,” headlined by Kiro, a coding tool capable of learning a team’s specific workflow and operating independently for days at a time.

- The Complete Pipeline: The suite includes a Security Agent and a DevOps Agent, creating a closed loop that writes, secures, tests, and deploys code without constant human intervention.

- Persistent Context: Solving the “memory loss” issue of traditional LLMs, these agents maintain context across sessions, pushing the industry closer to AI acting as reliable co-workers rather than tools requiring constant babysitting.

The dream of the AI “co-worker”—a digital entity that doesn’t just assist but actually completes work while you sleep—took a significant step closer to reality this week. At the AWS re:Invent conference on Tuesday, Amazon Web Services announced a suite of three new AI tools dubbed “frontier agents.” While the tech world is accustomed to AI coding assistants, AWS is making a bold new promise: these agents are designed to understand your specific work style and operate independently for days at a time.

Preview versions of these agents are available now, handling distinct but interconnected pillars of software development: writing code, managing security processes, and automating DevOps tasks.

Kiro: The Agent That Doesn’t Forget

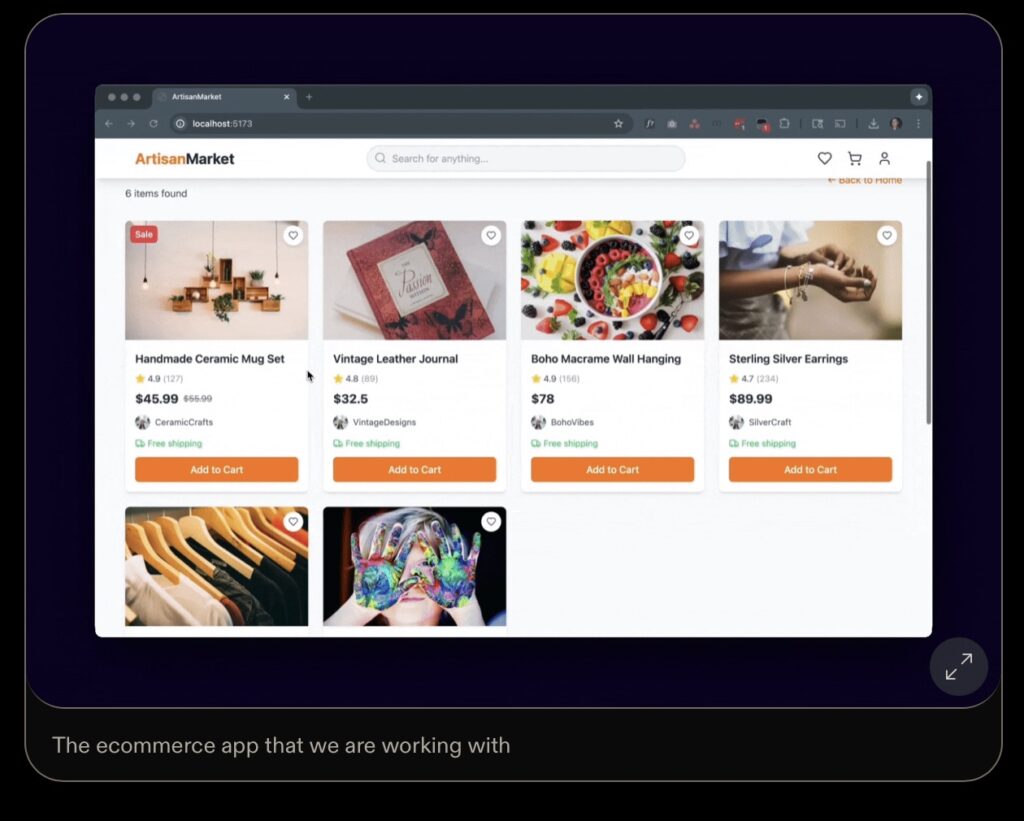

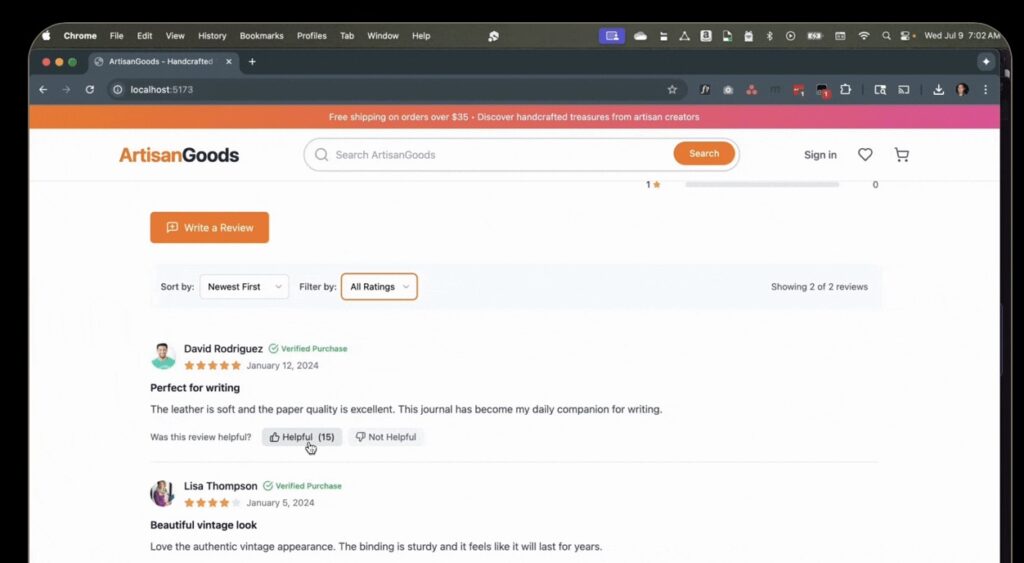

The centerpiece of the announcement is the “Kiro autonomous agent.” This is a significant evolution of AWS’s existing AI coding tool, Kiro, which was originally announced in July. While the earlier iteration was suitable for “vibe coding”—essentially rapid prototyping—the new autonomous Kiro is built for operational rigor. It is designed to produce reliable software ready to be pushed live.

The secret to this reliability is what AWS calls “spec-driven development.” Kiro doesn’t just guess; it learns. As the agent codes, it prompts the human developer to instruct, confirm, or correct its assumptions. Through this interaction, specifications are created. Kiro actively scans existing code and watches how the team operates within various tools.

“It actually learns how you like to work, and it continues to deepen its understanding of your code and your products and the standards that your team follows over time,” said AWS CEO Matt Garman during his keynote.

Crucially, AWS claims Kiro maintains “persistent context across sessions.” Unlike standard Large Language Models (LLMs) that run out of memory or “forget” previous instructions, Kiro retains its grasp on the project. This allows it to be handed a complex task from the backlog and left to work alone for hours or even days. Garman illustrated this with a practical example: instead of a developer manually updating a piece of critical code used across 15 different corporate software applications, Kiro can be assigned to fix all 15 instances in a single prompt, verifying its own work along the way.

Securing and Deploying the Code

To ensure that the code Kiro writes is safe and functional, AWS introduced two supporting agents to complete the automation circle.

The AWS Security Agent works independently alongside the coding process. It identifies security vulnerabilities as the code is being written, tests the software after the fact, and offers suggested fixes, effectively acting as an automated code reviewer.

Rounding out the trio is the DevOps Agent. Once code is written and secured, this agent automates the operational heavy lifting. It tests the new code for performance issues and checks for compatibility with other hardware, software, or cloud settings to prevent incidents when the new code is pushed live.

The Race for Long-Term Context

Amazon is not alone in the pursuit of agents with longer attention spans. For example, OpenAI recently stated that its agentic coding model, GPT‑5.1-Codex-Max, is also designed for long runs of up to 24 hours. However, the industry faces a common hurdle: trust.

Currently, LLMs still suffer from accuracy issues and “hallucinations,” forcing developers to act as “babysitters” who must constantly verify short bursts of output. This friction has historically limited the utility of autonomous agents. Developers often prefer assigning short, verifiable tasks rather than trusting an AI with a multi-day project.

For AI to transition from a tool to a true co-worker, the “context window”—the ability to work continuously without stalling or losing the thread—must expand. With Kiro’s promise of persistent context and multi-day autonomy, AWS is betting that its frontier agents represent the next great leap in that direction.