Raspberry Pi’s latest $130 accessory promises local Generative AI with 8GB of dedicated RAM, but real-world testing reveals a solution still searching for its problem.

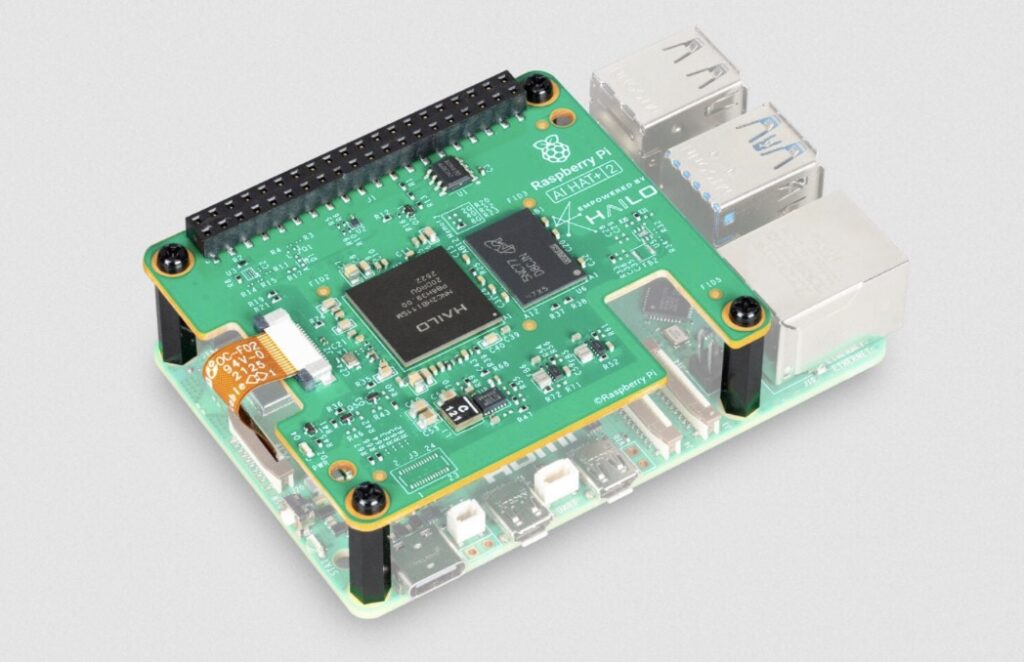

- The Hardware: The new AI HAT+ 2 features a Hailo-10H accelerator and 8GB of dedicated LPDDR4X RAM, offering 40 TOPS of performance to run Generative AI locally without draining the Pi’s system memory.

- The Promise: It is marketed as a total edge AI solution capable of “Mixed Mode”—running computer vision and Large Language Models (LLMs) simultaneously on a tight power budget (under 3W).

- The Reality: While technically impressive, the device is currently hampered by immature software, and for many users, simply buying a Raspberry Pi 5 with 16GB of RAM offers a more flexible and stable experience for running local LLMs.

Raspberry Pi has officially launched the AI HAT+ 2, a $130 add-on board designed to bridge the gap between simple maker projects and the booming world of Generative AI. Following up on the previous AI HAT, this new iteration upgrades the silicon to a Hailo-10H neural network accelerator.

The headline feature here is the addition of 8GB of on-board LPDDR4X RAM. Unlike its predecessor, which relied on the host Raspberry Pi’s memory, the AI HAT+ 2 is self-contained. This allows the accelerator to handle larger models—specifically Generative AI workloads—while theoretically freeing up the Raspberry Pi 5’s CPU and system RAM for other tasks.

Unleashing Generative AI at the Edge

On paper, the specifications are enticing. The Hailo-10H chip delivers 40 TOPS (Tera Operations Per Second) of INT8 inference performance. This is a significant step up from the 26 TOPS found in the previous Hailo-8 hardware.

Raspberry Pi pitches this as the key to unlocking “cloud-free AI computing.” Out of the box, the hardware supports installing and running popular small language models, including:

- DeepSeek-R1-Distill (1.5 billion parameters)

- Llama 3.2 (1 billion parameters)

- Qwen 2.5 (Instruct and Coder variants)

Because the processing happens locally, users gain data privacy and low latency without subscription fees. The device also supports LoRA (Low-Rank Adaptation) fine-tuning, allowing developers to customize these models for specific tasks using the Hailo Dataflow Compiler.

The Problem with Real-World Performance

However, when moving from marketing copy to the workbench, the AI HAT+ 2 feels like a piece of hardware that arrived before its software was ready.

While the 8GB of dedicated RAM is a massive improvement, it creates an awkward value proposition. A standard Raspberry Pi 5 can now be purchased with 16GB of system RAM. In testing, running a model like Qwen 30B (heavily quantized) on a 16GB Pi 5 CPU often proves more flexible than trying to squeeze models into the HAT’s 8GB limit.

Many capable medium-sized models target 10-12GB of RAM usage to allow for context windows. Because the HAT is capped at 8GB, users are restricted to tiny 1-3 billion parameter models. While impressive that they run at all, these tiny models often struggle with complex logic that a larger quantized model running on the Pi’s native CPU could handle.

“Mixed Mode” and The Software Gap

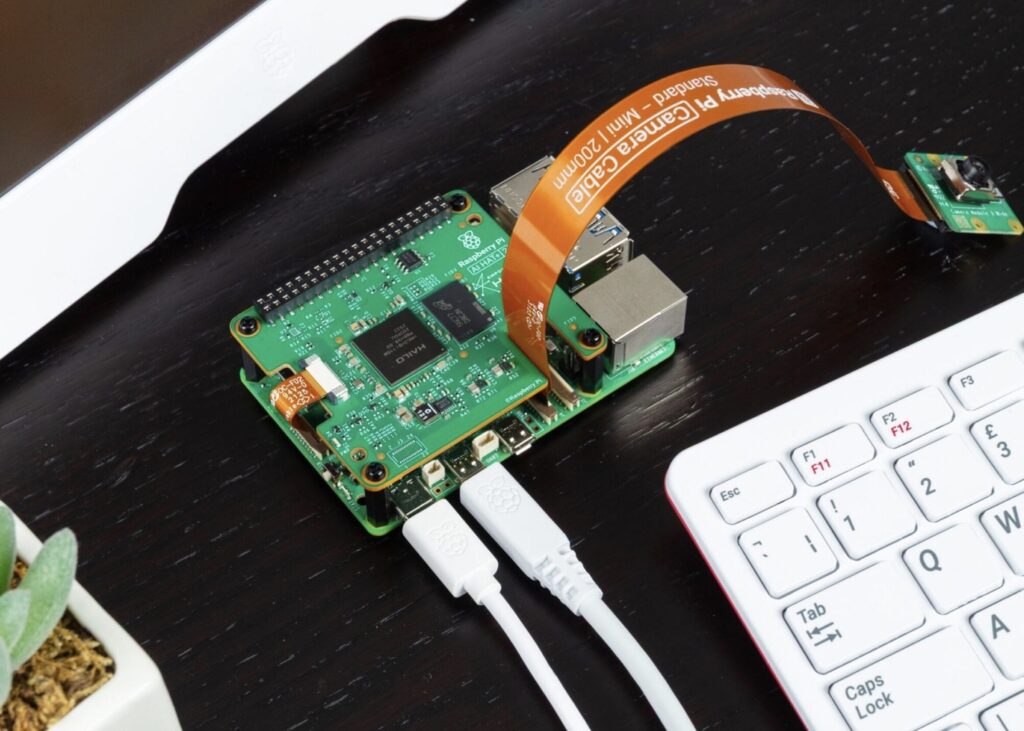

The “killer app” for this device is supposed to be “Mixed Mode”: the ability to process machine vision (like object detection) and run an LLM (like a chatbot) simultaneously. This would be perfect for a robot that sees a cup and can verbally describe it.

Unfortunately, early testing reveals significant growing pains. Attempting to run vision and inference models simultaneously resulted in segmentation faults and “device not ready” errors. The software examples provided by Hailo were often outdated or failed to load on the new hardware. As is common in the AI hardware space, the physical board has launched, but the robust software required to make it accessible is lagging behind.

Vision Processing: Good, But Redundant?

If you ignore the LLM capabilities and focus purely on computer vision, the AI HAT+ 2 is a beast. It can identify objects—keyboards, monitors, phones—roughly 10x faster than the Pi’s CPU.

The problem is the price tag. You can achieve similar vision performance with the original $110 AI HAT or the even cheaper $70 AI Camera. If your project only requires a camera to detect shrinkage at a self-checkout (a Fujitsu demo use-case cited by Hailo), you likely don’t need the $130 HAT+ 2.

A Niche Solution for Developers

The Raspberry Pi AI HAT+ 2 is an impressive feat of engineering, packing 40 TOPS and 8GB of RAM into a low-power, compact form factor that is significantly cheaper than running an external GPU. However, for the average hobbyist, it currently feels like a “solution in search of a problem.”

If you need a general-purpose AI playground, a 16GB Raspberry Pi 5 offers more RAM flexibility for LLMs. If you need computer vision, the AI Camera is cheaper.

The AI HAT+ 2 is best suited for a very specific niche: industrial developers building embedded devices (like smart scanners) where power consumption must be kept under 10 watts, and where both vision and text processing are strictly required. For everyone else, it might be best to wait until the software catches up to the hardware.