As synthetic imagery outpaces human intuition, researchers warn that our overconfidence is a cybercriminal’s greatest tool.

- The Deception of Perfection: Modern AI-generated faces have moved past “glitchy” errors; they are now so statistically average and symmetrical that they appear more “real” than actual human photographs.

- The Overconfidence Gap: A new study reveals that while most people believe they can spot a fake, the average person performs only slightly better than a coin flip, and even “super recognizers” struggle to bridge the gap.

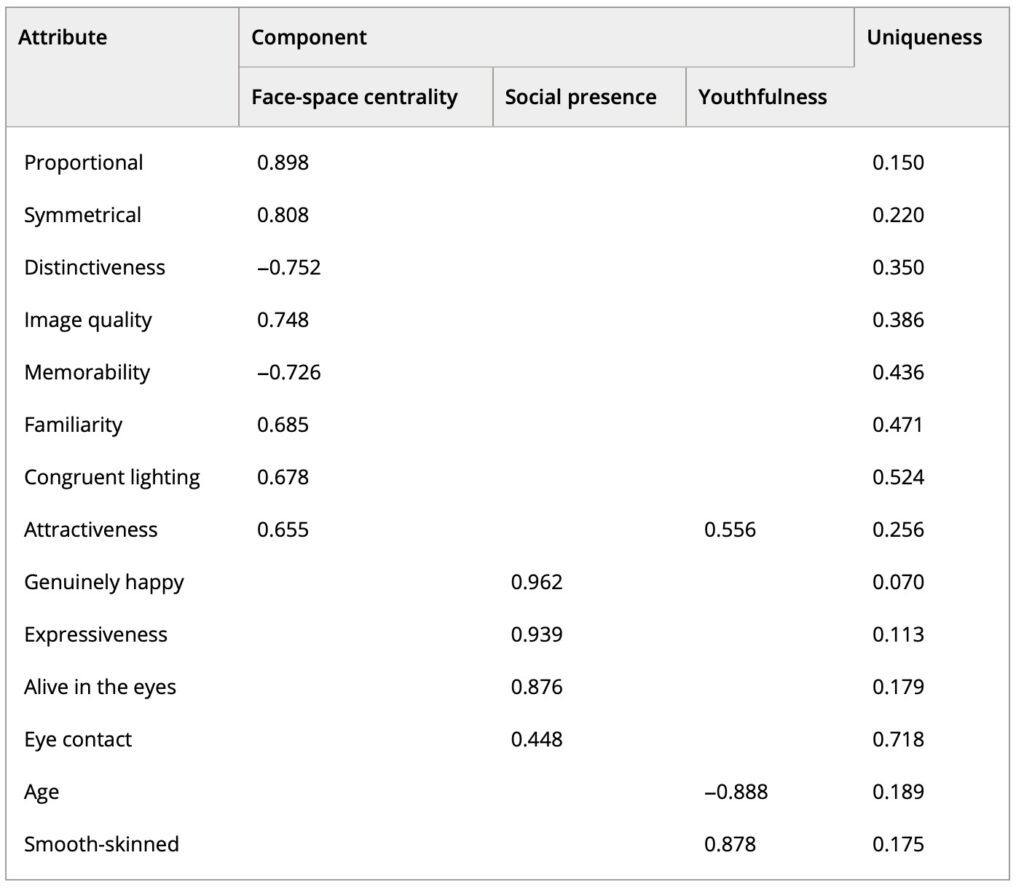

- A Shift in Strategy: Detecting fakes now requires looking for what is “too right” rather than what is “wrong,” shifting the focus to hyper-symmetry and lack of unique human imperfections.

For years, the internet found collective amusement in the “uncanny valley” of early AI-generated imagery. We looked for the six-fingered hands, the melting earlobes, and the warped backgrounds as surefire signs of a digital counterfeit. However, researchers from UNSW Sydney and the Australian National University (ANU) are now issuing a sobering warning: those days are over. AI faces have become “too good to be true,” reaching a level of hyper-realism that makes them virtually indistinguishable from real people to the naked eye.

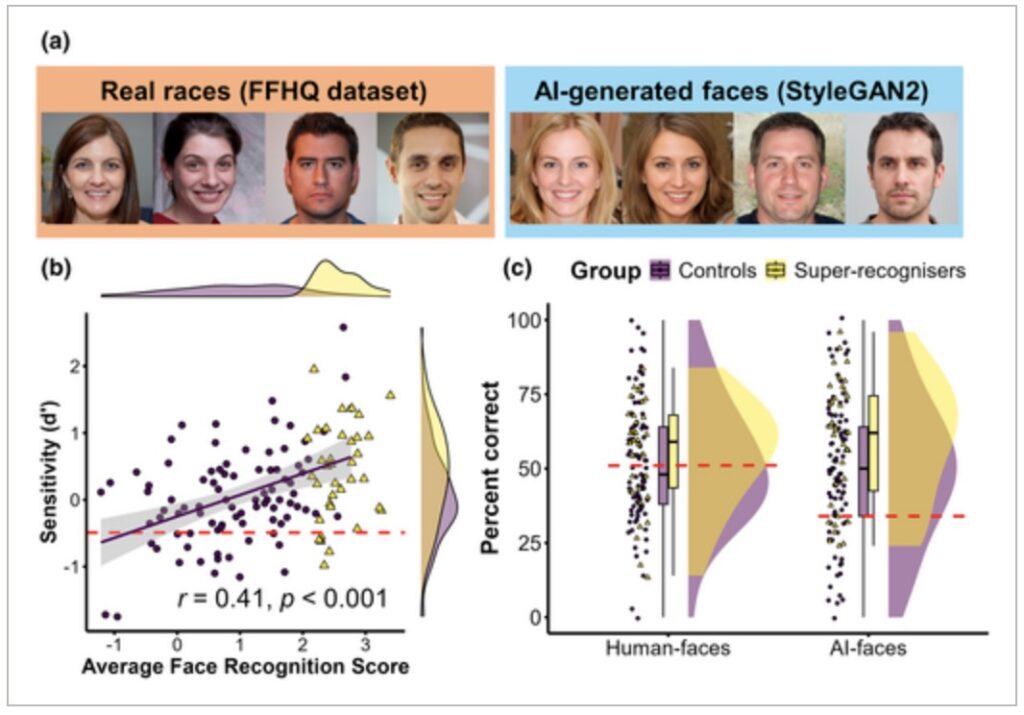

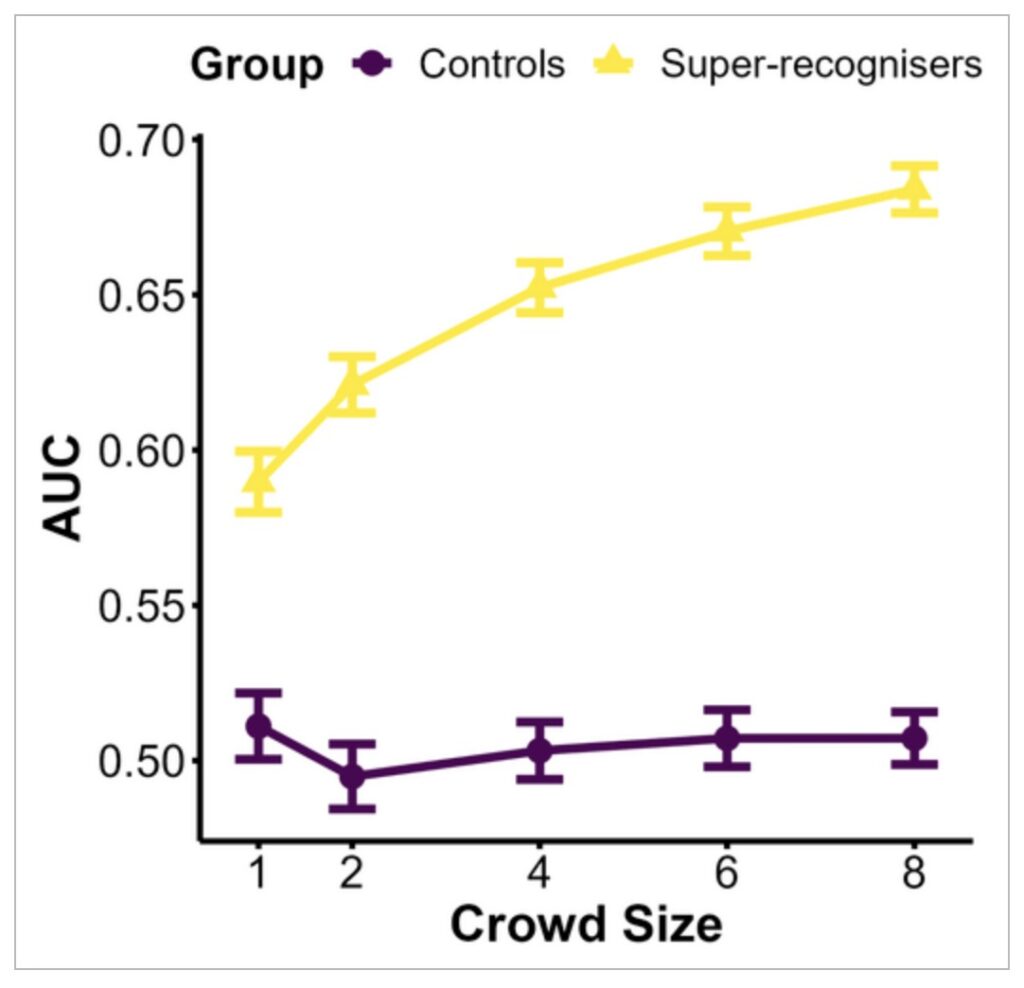

A recent study published in the British Journal of Psychology highlights a dangerous psychological trend: we are vastly overestimating our own detection skills. Led by researcher James Dunn, the team recruited 125 participants to put their eyes to the test. This group included 89 average individuals and 36 “super recognizers”—people who possess a rare, innate talent for remembering and identifying faces. The results were startling. The average person’s ability to spot a synthetic face was only marginally better than random chance, and even the elite super-recognizers outperformed the control group by a frustratingly small margin.

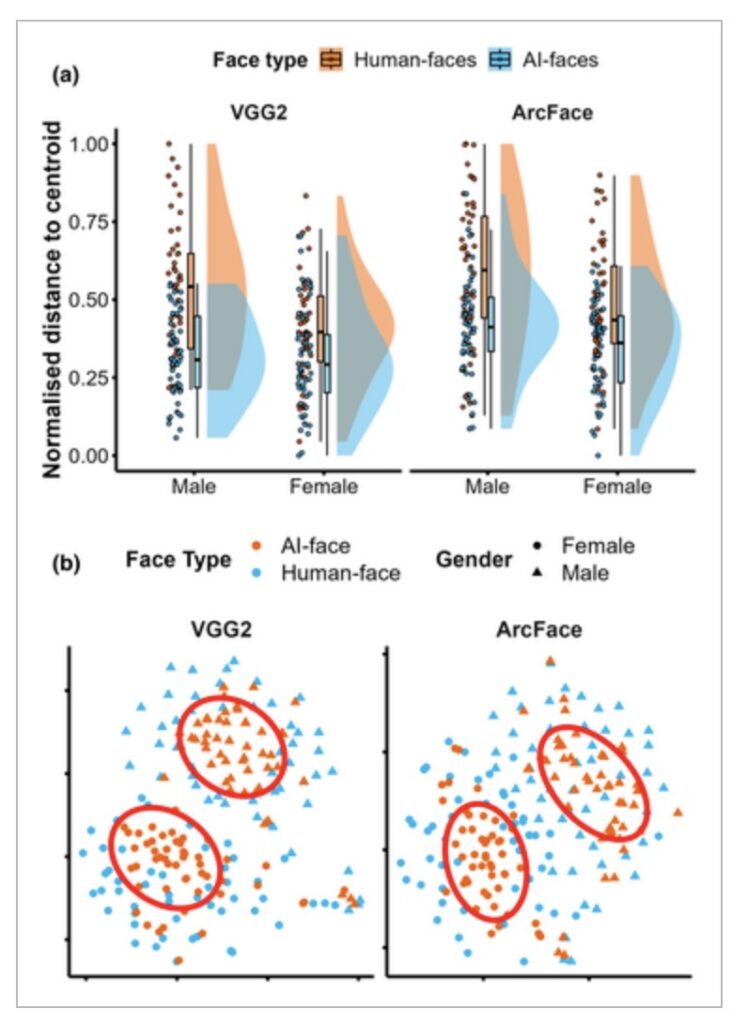

The core of the problem lies in what ANU Associate Professor Amy Dawel calls “hyper-realism.” Modern AI models are no longer giving themselves away through obvious glitches. Instead, they deceive us through statistical typicality. These faces are highly symmetrical, perfectly proportioned, and lack the subtle asymmetries—the slightly crooked nose or the uneven eyelid—that define a natural human face. In a strange twist of irony, the “perfect” face has become the new red flag. Because AI generates images based on the mathematical “average” of thousands of human features, the results are often more aesthetically “human” than a real photograph.

This evolution in technology poses a significant threat to security and identity verification. With participants in the study showing high levels of overconfidence despite their poor performance, the door is left wide open for fraudsters and cybercriminals. If we believe we cannot be fooled, we are less likely to employ the rigorous verification steps needed to thwart deepfakes. We are currently living in a window where the visual clues have not entirely disappeared, but they have become so subtle that they require specialized analysis to detect.

The researchers at UNSW and ANU are not giving up. They plan to study the small minority of individuals who can consistently identify these fakes to understand what specific patterns they are seeing. By decoding the secrets of these “super AI face detectors,” they hope to build better anti-fake technologies and public training programs. For now, the best defense is humility. If a face looks perfectly symmetrical and statistically flawless, it might just be a product of code rather than biology.