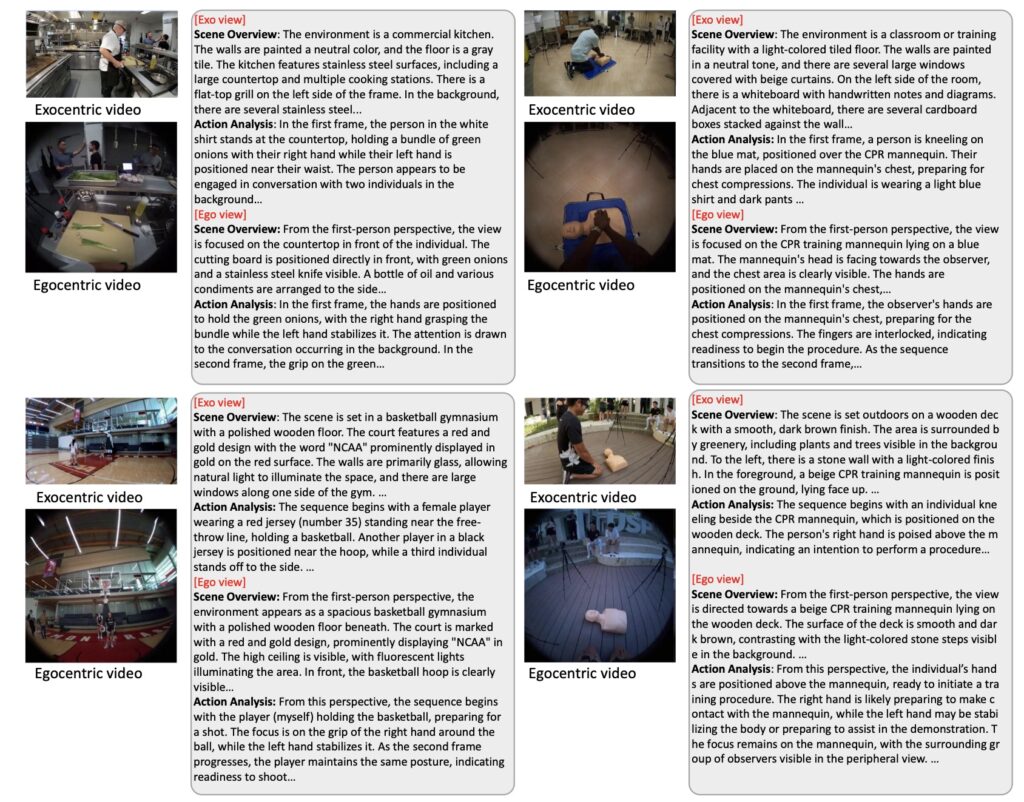

Unlocking the power of immersive storytelling, robotics, and AR by synthesizing realistic egocentric perspectives from standard footage.

- Immersive Transformation: EgoX is a groundbreaking framework that generates realistic first-person (egocentric) videos from a single third-person (exocentric) source, allowing viewers to step directly into the scene.

- Advanced Architecture: The system utilizes a novel combination of lightweight LoRA adaptation, unified conditioning strategies, and geometry-guided self-attention to maintain visual fidelity despite extreme changes in camera angles.

- Broad Applications: Beyond letting moviegoers “become” the main character, this technology is critical for advancing robotics and AR/VR by helping machines understand and imitate human interaction from a first-person viewpoint.

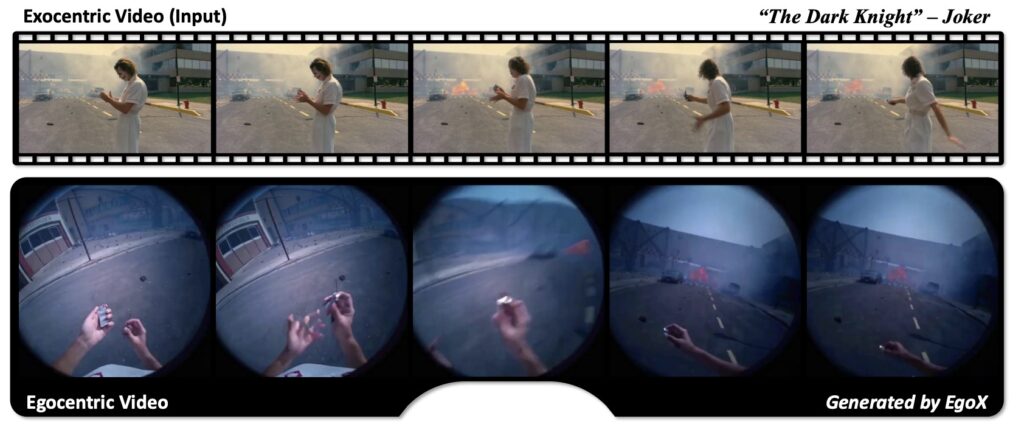

Have you ever watched a film like The Dark Knight and wished you could experience the chaos of Gotham not as a spectator, but through the eyes of the Joker himself? For decades, video consumption has been a passive activity; we watch scenes unfold from a detached, “exocentric” third-person perspective. However, a new frontier in artificial intelligence is emerging that promises to shatter this barrier. We are moving toward a world where viewers are no longer limited to the sidelines but can step onto the field as an MLB player or become the superhero in a blockbuster movie. This is the promise of EgoX, a novel framework designed to translate exocentric videos into immersive egocentric (first-person) experiences.

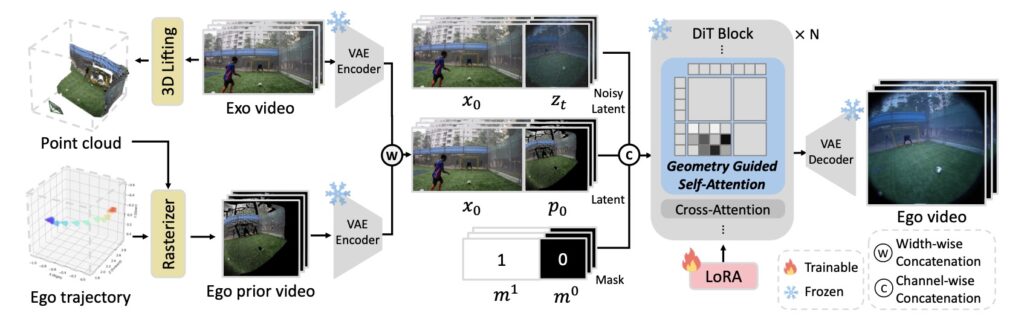

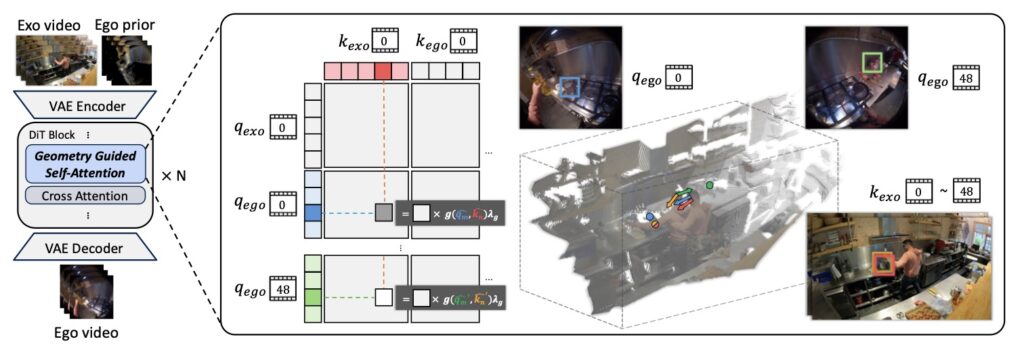

The challenge of converting a third-person view into a first-person view is immense. It requires more than just cropping or zooming; the AI must fundamentally understand the geometry of the world. Because the camera pose varies so wildly between a bystander’s view and the actor’s view, there is often minimal overlap in what is visible. The system faces the difficult task of faithfully preserving the content we can see while realistically synthesizing the regions we cannot see, all while maintaining geometric consistency. EgoX addresses this by leveraging the vast spatio-temporal knowledge embedded in large-scale video diffusion models, adapting them for this specific, complex task.

To achieve this high level of realism, EgoX employs a sophisticated technical architecture. It uses a lightweight adaptation method known as LoRA (Low-Rank Adaptation) to harness pre-trained models without becoming computationally unwieldy. The core innovation, however, lies in its “unified conditioning strategy.” This approach combines priors from both the exocentric and egocentric perspectives via width- and channel-wise concatenation. To ensure the video doesn’t become distorted or dream-like in the wrong ways, EgoX utilizes a geometry-guided self-attention mechanism. This allows the model to selectively focus on spatially relevant regions, ensuring that the generated world remains coherent and visually faithful to the original scene.

The implications of this technology extend far beyond entertainment. While the ability to turn a movie scene into a first-person experience is thrilling, the scientific applications are equally profound. Humans perceive and interact with the world strictly from an egocentric viewpoint. Therefore, in fields like robotics and Augmented/Virtual Reality (AR/VR), understanding the world from the “actor’s” point of view is crucial. By generating high-fidelity egocentric videos, EgoX provides the data necessary for robots to better imitate human actions, reason about their environment, and interact with objects more naturally.

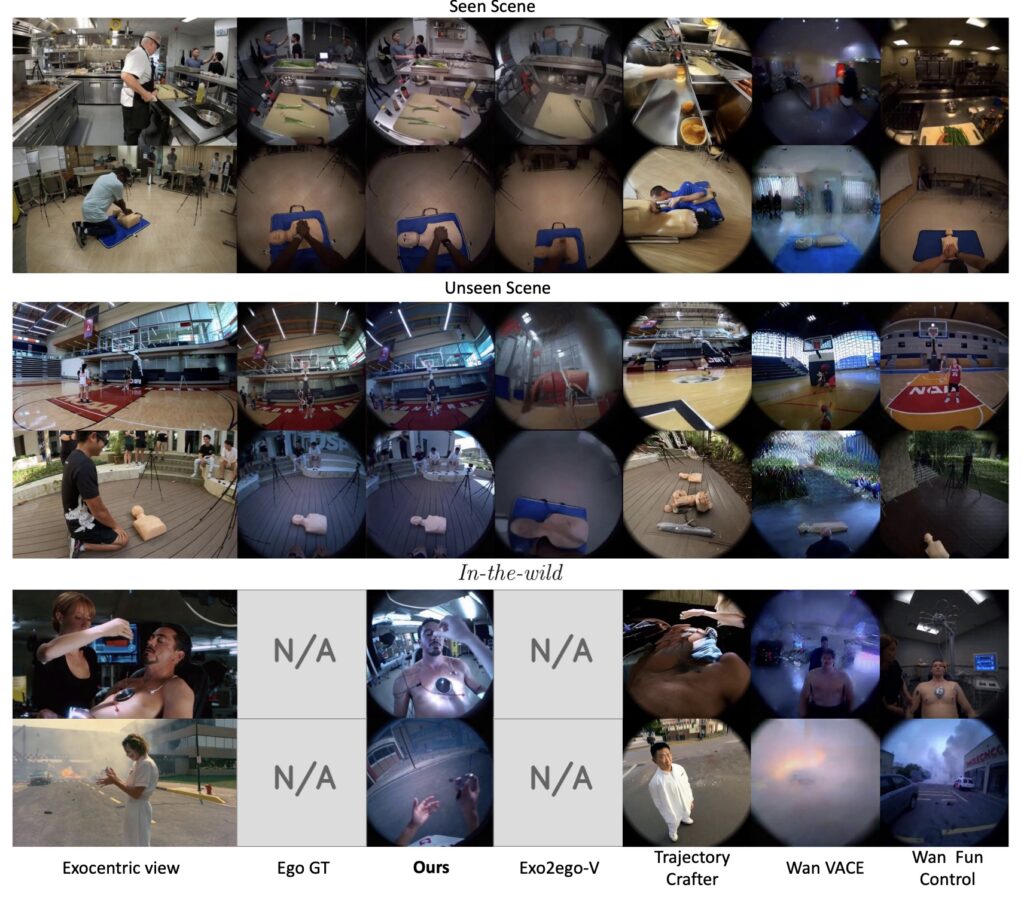

EgoX has demonstrated strong scalability and robustness, proving effective even on “in-the-wild” videos that it hasn’t seen before. However, the technology is still evolving. Currently, the framework requires the input of an egocentric camera pose—essentially telling the AI where the “head” is looking. While users can provide this interactively, the researchers behind EgoX note that incorporating an automatic head-pose estimation module is a valuable direction for future development. As this technology matures, the line between watching a story and living it will continue to blur, offering a new lens through which we can understand both our entertainment and our reality.