How xGen-MM is revolutionizing AI with cutting-edge datasets, powerful multimodal models, and open-source innovation.

- Advanced AI Framework: xGen-MM (BLIP-3) is a state-of-the-art framework for building Large Multimodal Models (LMMs) using meticulously curated datasets, optimized architectures, and streamlined training methods.

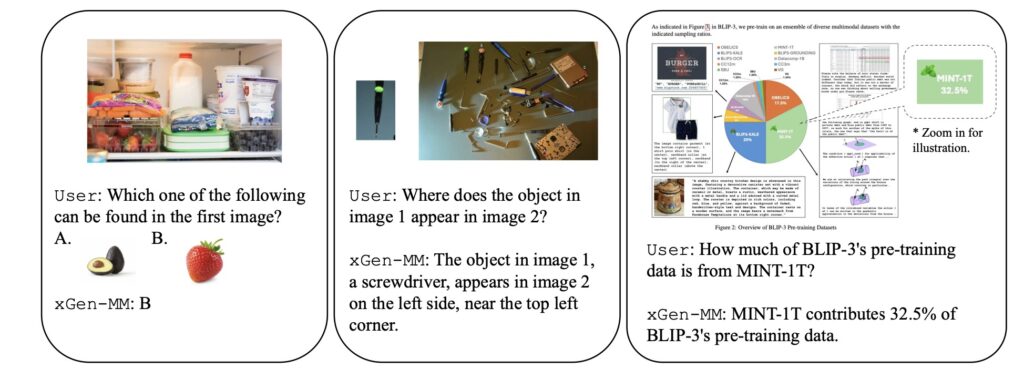

- Emerging Capabilities: These models showcase groundbreaking multimodal in-context learning and competitive performance across a variety of benchmarks, all while being open-source to foster continued research and innovation.

- Empowering the Community: With a focus on scaling high-quality data, xGen-MM makes large-scale datasets, models, and fine-tuning code available to the research community, bridging the gap between proprietary and open-source AI development.

As the AI landscape continues to evolve, one area making significant strides is Large Multimodal Models (LMMs)—powerful AI systems that can understand and generate across multiple forms of data, such as images, text, and more. Enter xGen-MM (BLIP-3), an innovative framework that is reshaping how we approach multimodal AI development. Building on the successes of previous models, xGen-MM takes a leap forward by introducing high-quality datasets, optimized training techniques, and scalable architectures, all while remaining open-source to empower researchers worldwide.

The xGen-MM Framework: Next-Level Multimodal AI

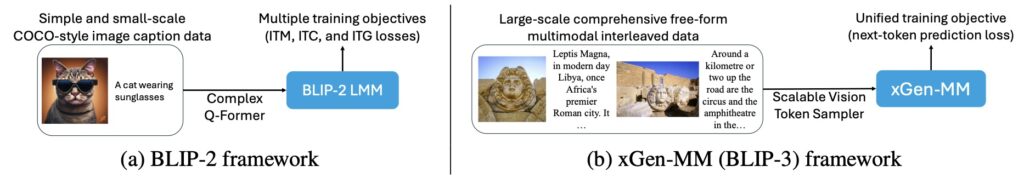

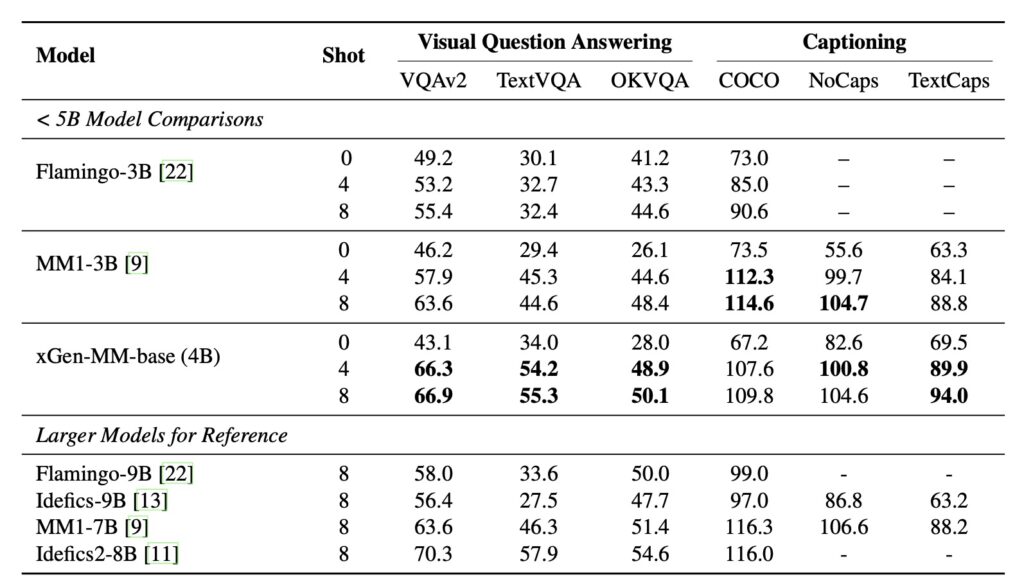

xGen-MM, short for xGen-MultiModal, is the latest offering from the Salesforce xGen initiative, which previously explored generative AI models in text and code generation. Now, with xGen-MM (also known as BLIP-3), the focus has shifted to developing Large Multimodal Models that integrate vision and language data, aiming for performance that rivals proprietary models. The xGen-MM framework includes an ensemble of curated datasets, a streamlined training recipe, and simplified model architectures that emphasize scalability and efficiency.

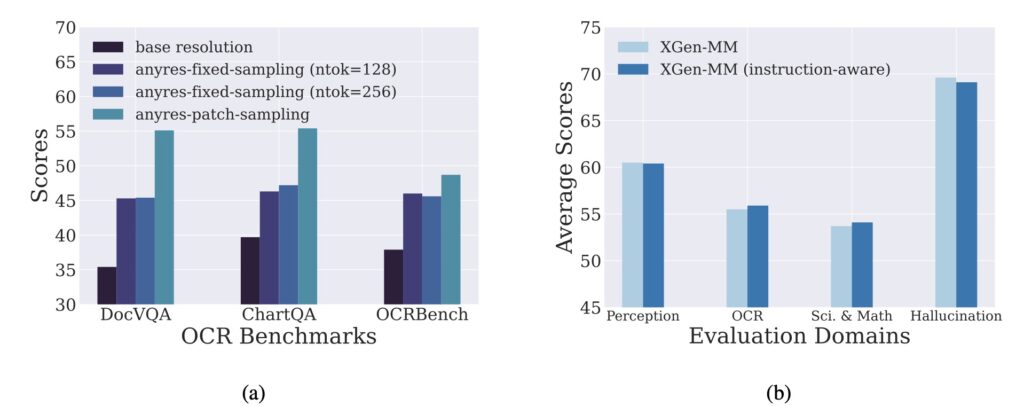

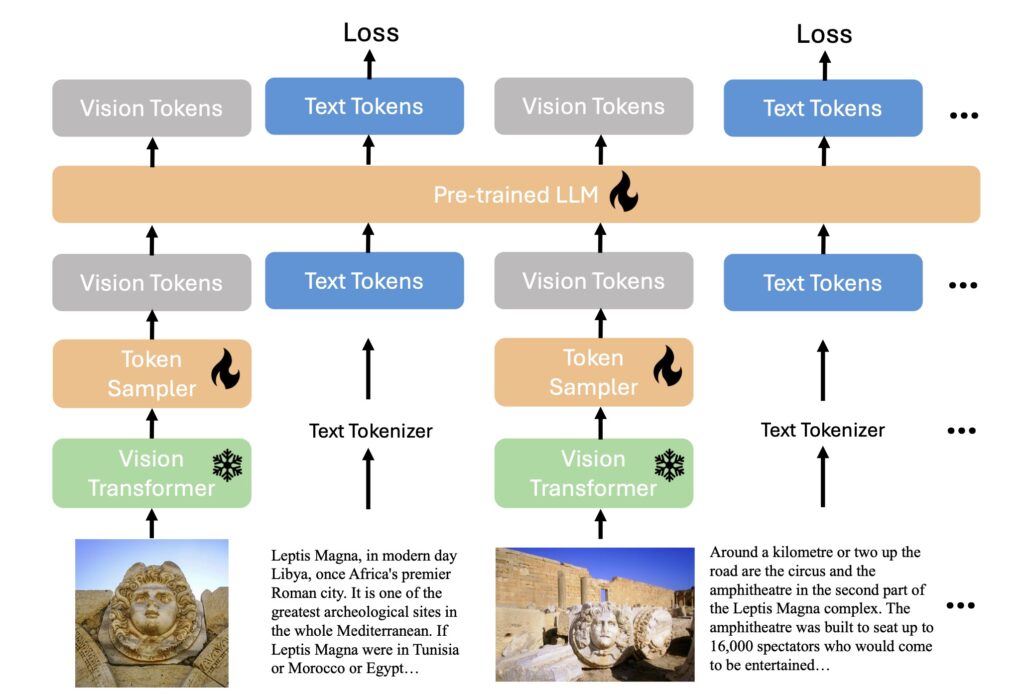

By replacing the complex Q-Former architecture seen in previous models like BLIP-2, xGen-MM introduces a more scalable vision token sampler, enabling smoother integration of visual and textual data. The framework eliminates unnecessary training objectives, focusing instead on auto-regressive text generation in multimodal contexts.

Raising the Bar with Superior Datasets

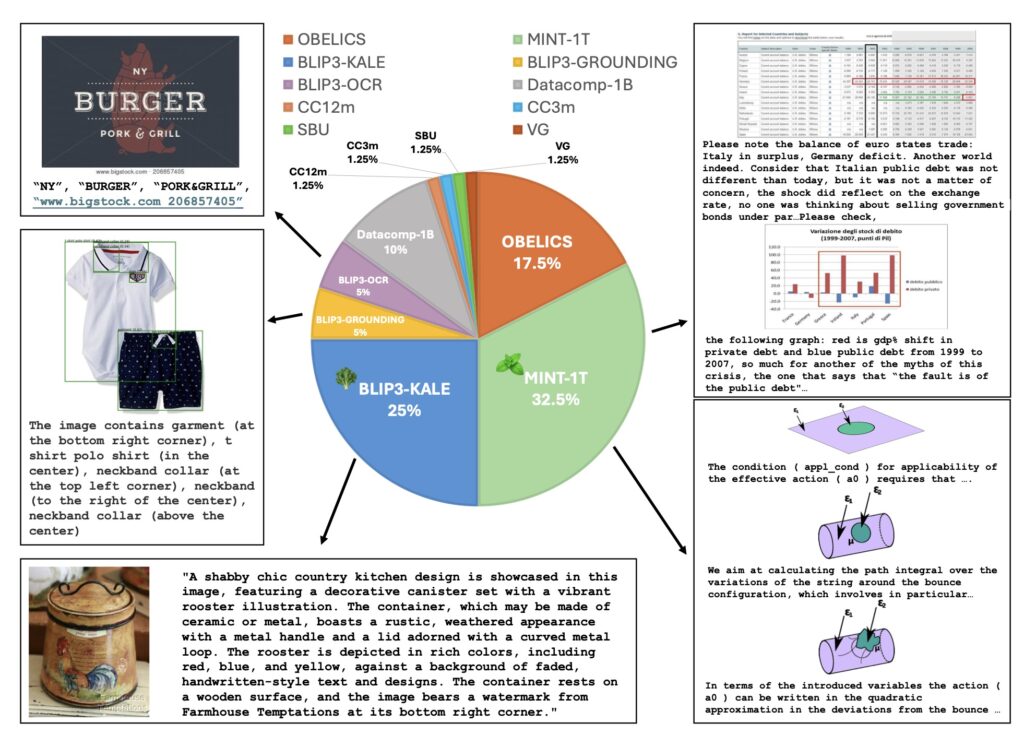

To train robust and high-performing models, xGen-MM leverages a series of large-scale datasets designed specifically for multimodal tasks. Central to this effort are two flagship datasets: MINT-1T, a trillion-token interleaved dataset, and BLIP3-KALE, a knowledge-augmented dense caption dataset. Both are meticulously crafted to boost the model’s ability to perform across a wide range of multimodal benchmarks.

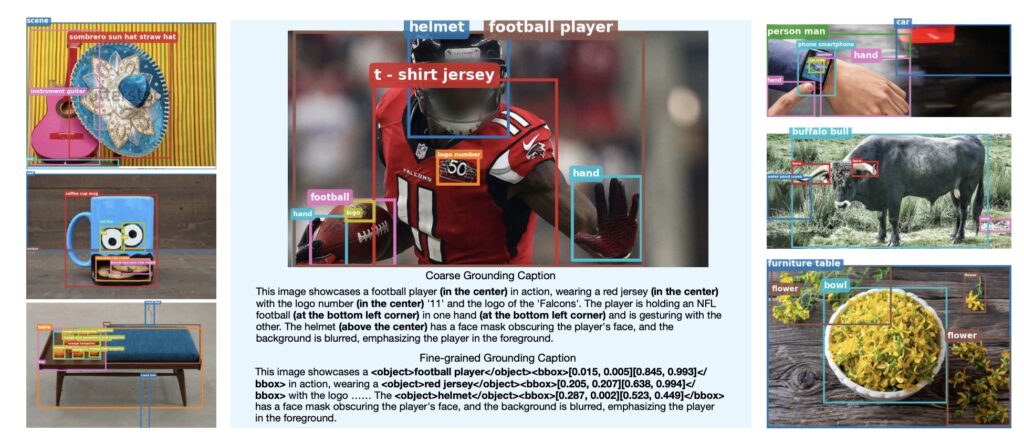

Additionally, two new specialized datasets—BLIP3-OCR-200M, which features dense OCR annotations, and BLIP3-GROUNDING-50M, focused on visual grounding—further enhance the model’s capabilities, allowing it to excel in tasks that require precise image-text correlations.

Emerging Abilities and Open-Source Innovation

xGen-MM (BLIP-3) has undergone extensive testing and evaluation across multiple benchmarks, consistently showcasing emergent abilities such as multimodal in-context learning. These emergent capabilities represent the AI’s ability to interpret and generate data across different modalities in real-time, leading to impressive results in a variety of tasks, from image captioning to visual question answering.

Perhaps the most exciting aspect of xGen-MM is its commitment to the open-source community. By providing access to its models, curated datasets, and fine-tuning codebase, xGen-MM bridges the gap between proprietary and open-source AI systems. Researchers and developers can now explore, replicate, and enhance multimodal foundation models without facing the typical barriers associated with closed-source solutions.

Empowering AI Research for the Future

With the release of xGen-MM (BLIP-3), Salesforce is offering the AI community a powerful new toolset that is not only scalable and efficient but also accessible. By prioritizing open-source development and high-quality data curation, xGen-MM empowers researchers to push the boundaries of multimodal AI. As LMMs continue to gain prominence, frameworks like xGen-MM are essential for ensuring that innovation remains open, collaborative, and community-driven.

xGen-MM (BLIP-3) isn’t just another AI framework—it’s a step forward in making advanced multimodal AI more understandable, replicable, and scalable for everyone.