How Advanced Mixture-of-Experts Architecture and High-Definition Capabilities Are Transforming Video Generation for Creators and Researchers Alike

- Pioneering Innovations: Wan2.2 introduces a Mixture-of-Experts (MoE) architecture, cinematic-level aesthetics, and enhanced motion generation, boosting model capacity without increasing computational costs.

- Efficient and Accessible: With support for high-definition text-to-video, image-to-video, and hybrid generation at 720P and 24fps, Wan2.2 runs on consumer-grade hardware and integrates seamlessly with community tools like DiffSynth-Studio and ComfyUI.

- Open-Source Impact: Backed by expanded training data, detailed installation guides, and a forward-looking todo list, Wan2.2 outperforms closed-source models while fostering collaboration under the Apache 2.0 license.

In the rapidly evolving world of artificial intelligence, video generation has long been a frontier where creativity meets computational power. Enter Wan2.2, the latest upgrade to the Wan series of open and advanced large-scale video generative models. Developed by a dedicated team at Wan-AI, this release isn’t just an incremental update—it’s a game-changer that democratizes high-quality video creation. By building on the foundation of Wan2.1, Wan2.2 incorporates groundbreaking features like an effective Mixture-of-Experts (MoE) architecture, meticulously curated aesthetic data for cinematic flair, and a massive expansion in training data that elevates motion complexity and semantic understanding. Whether you’re a filmmaker dreaming up epic scenes or a researcher pushing the boundaries of AI, Wan2.2 promises to make professional-grade video synthesis more accessible than ever.

At the heart of Wan2.2’s innovations is its MoE architecture, a clever adaptation from large language models that’s now revolutionizing video diffusion processes. Imagine splitting the denoising task across specialized “experts”—one handling high-noise early stages for broad layout and another refining low-noise details later on. In the A14B model series, this means two experts each with about 14 billion parameters, totaling 27 billion but activating only 14 billion per step. This keeps inference costs and GPU memory usage remarkably stable, all while enlarging the model’s overall capacity. The switch between experts is guided by the signal-to-noise ratio (SNR), starting with a high-noise expert when noise is dominant and transitioning at a defined threshold (t_moe) based on half of the minimum SNR. Validation experiments show Wan2.2’s MoE setup achieves the lowest loss curves compared to baselines, proving its superior convergence and alignment with real-world video distributions. This isn’t just technical jargon; it translates to videos that feel more natural and detailed, opening doors for applications in entertainment, education, and beyond.

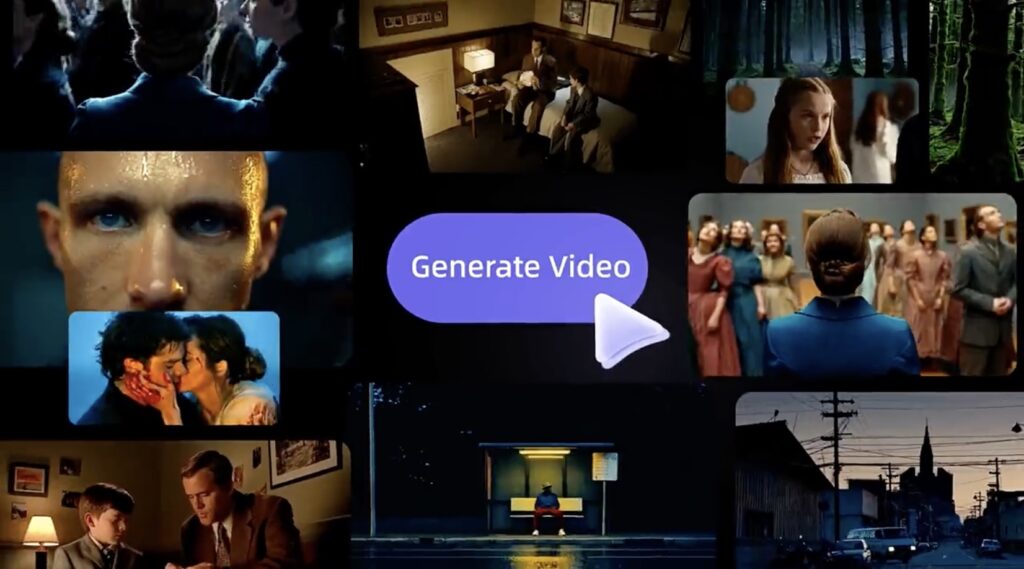

Beyond architecture, Wan2.2 shines in delivering cinematic-level aesthetics that rival professional productions. The model is trained on carefully curated data with labels for lighting, composition, contrast, color tones, and more, allowing users to customize styles with precision. Want a moody noir scene or a vibrant summer blockbuster vibe? Wan2.2 makes it controllable and effortless. This is amplified by a staggering increase in training data—65.6% more images and 83.2% more videos than Wan2.1—enhancing generalization across motions, semantics, and visuals. The result? Top-tier performance that outstrips both open- and closed-source competitors, as benchmarked on Wan-Bench 2.0. From anthropomorphic cats boxing in spotlighted arenas to serene beach cats on surfboards, the model’s ability to generate complex, fluid motions brings prompts to life with unprecedented realism.

Efficiency is another cornerstone of Wan2.2, especially with its high-definition hybrid text-image-to-video (TI2V) capabilities. The open-sourced 5B model, powered by the advanced Wan2.2-VAE, boasts a compression ratio of 16×16×4 (up to 4×32×32 with patchification), enabling 720P video at 24fps on everyday hardware like an RTX 4090. This hybrid approach supports text-to-video (T2V), image-to-video (I2V), and combined TI2V in a unified framework, generating 5-second clips in under 9 minutes without heavy optimization. For larger setups, multi-GPU inference via PyTorch FSDP and DeepSpeed Ulysses accelerates things further, making it ideal for both industrial applications and academic research. Tests across GPUs highlight its practicality: on high-end cards, it balances speed and memory usage impressively, ensuring even hobbyists can experiment without breaking the bank.

The community around Wan2.2 is thriving, amplifying its reach. Tools like DiffSynth-Studio offer low-memory offloading, FP8 quantization, and LoRA training, while Kijai’s ComfyUI WanVideoWrapper provides cutting-edge optimizations for seamless integration. If your project builds on Wan2.2, the team encourages sharing to spotlight community works. Looking ahead, the todo list promises exciting additions: multi-GPU inference codes, checkpoints for A14B and 5B models, and integrations with ComfyUI and Diffusers for T2V, I2V, and TI2V. This forward momentum underscores Wan2.2’s role in fostering innovation.

From a broader perspective, Wan2.2 represents a pivotal shift in AI video generation, bridging the gap between open-source accessibility and commercial-grade quality. In an industry dominated by closed models, its Apache 2.0 license empowers users with full rights over generated content, as long as it adheres to ethical guidelines—no harmful misinformation or violations of privacy. By outperforming leaders on benchmarks while running efficiently, Wan2.2 lowers barriers for creators worldwide, potentially sparking revolutions in fields like virtual reality, advertising, and storytelling.

Wan2.2 isn’t just about generating videos; it’s about unleashing creativity on a global scale. As AI continues to blur the lines between imagination and reality, models like this pave the way for a future where anyone can craft cinematic masterpieces from simple prompts. Dive in, experiment, and see how Wan2.2 can transform your ideas into moving art.