New research reveals that while Large Language Models (LLMs) like ChatGPT offer unprecedented speed, they may be exacting a hidden price on our neural connectivity and critical thinking skills.

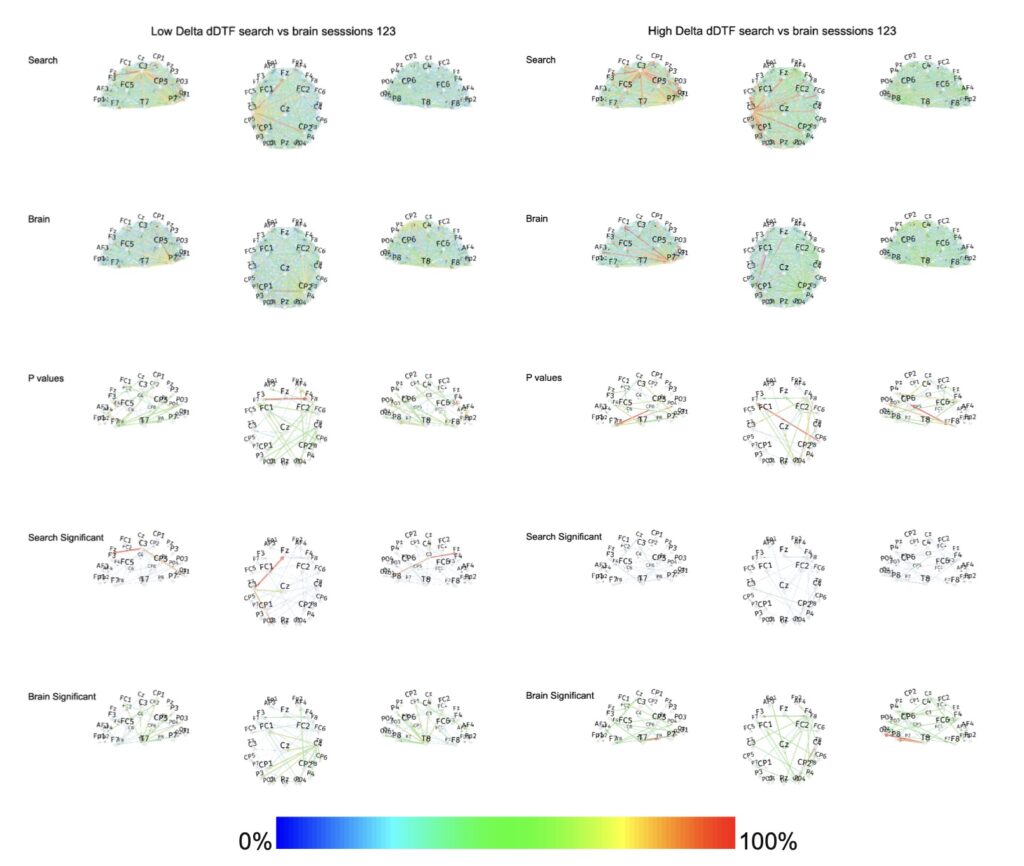

- Neural Disengagement: EEG data showed that participants using LLMs displayed the weakest brain connectivity compared to those using Search Engines or no tools at all, suggesting a significant reduction in cognitive effort.

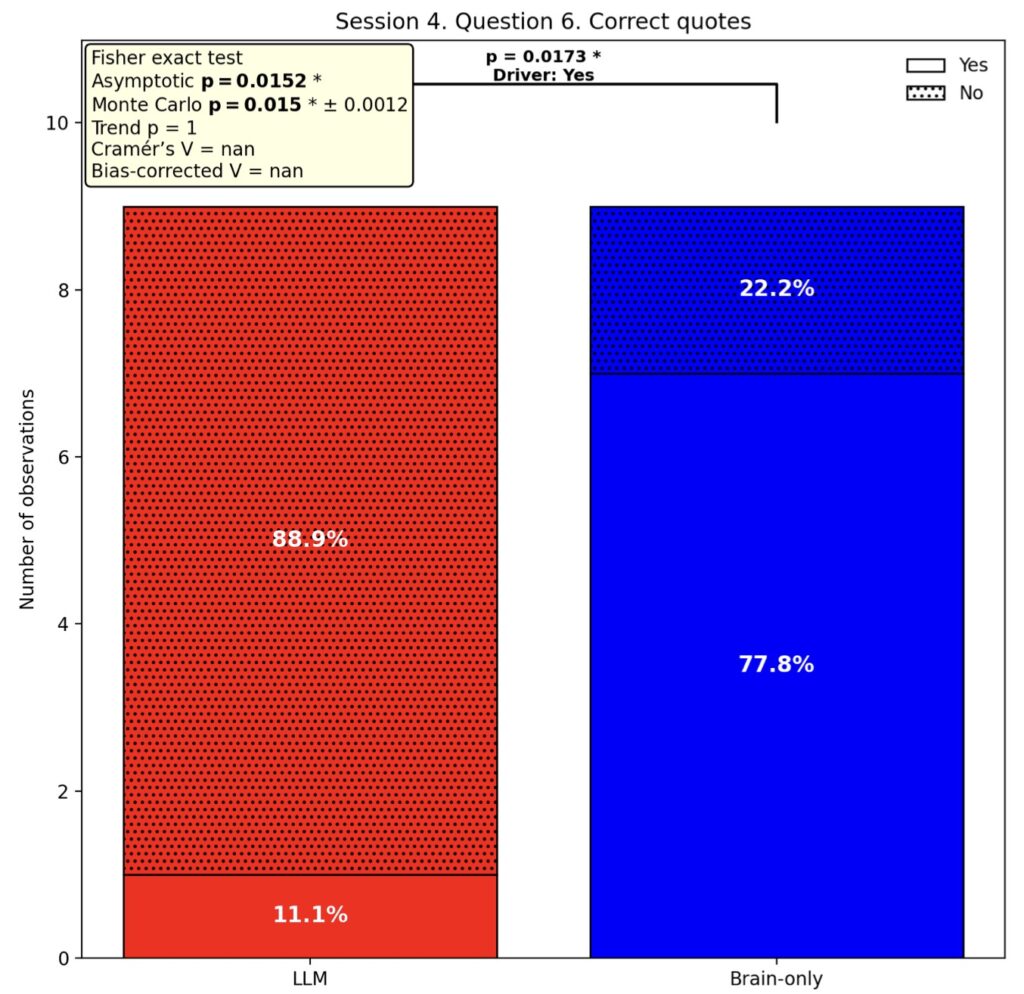

- The “Cognitive Debt” Effect: When habitual LLM users were forced to write without AI (Session 4), they exhibited “under-engagement” and struggled to activate necessary brain networks, whereas non-users who switched to AI showed heightened critical processing.

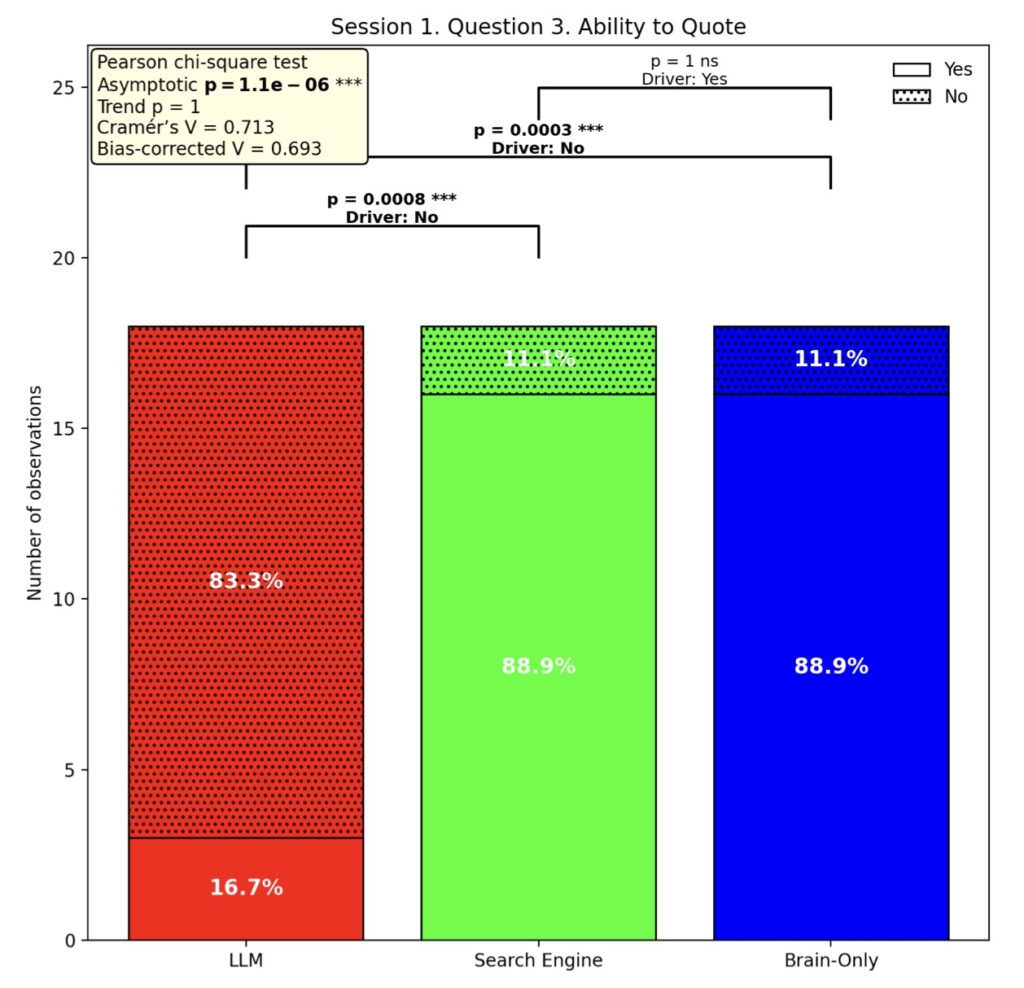

- Loss of Ownership: LLM users reported the lowest sense of ownership over their work and frequently failed to recall or quote their own essays, while human teachers easily identified AI-generated texts due to their structural homogeneity.

The rapid proliferation of Large Language Models (LLMs) has fundamentally transformed how we work, play, and learn. Tools like ChatGPT promise to democratize education and streamline complex tasks. However, this immediate convenience may come at a steep cognitive cost. A new study titled “Your Brain on ChatGPT” explores a critical question: Does offloading writing tasks to AI lead to “cognitive atrophy”?

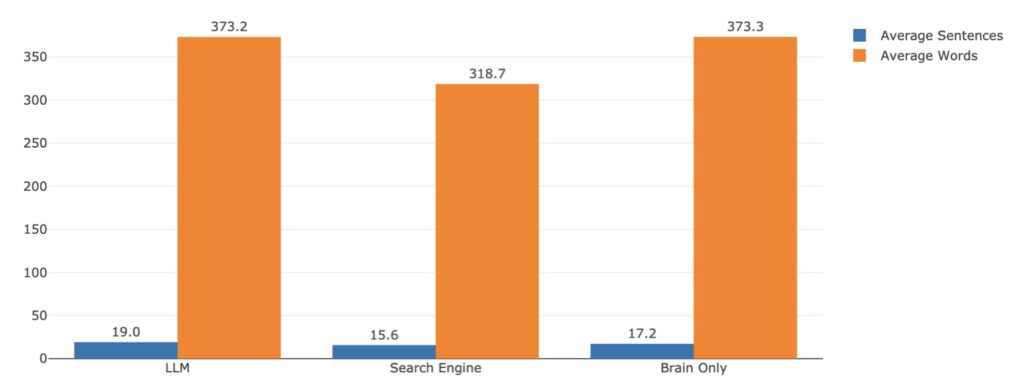

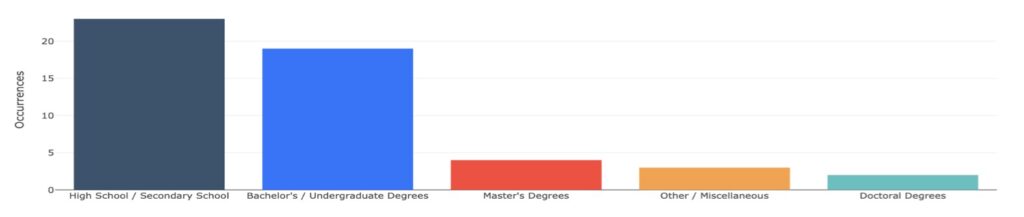

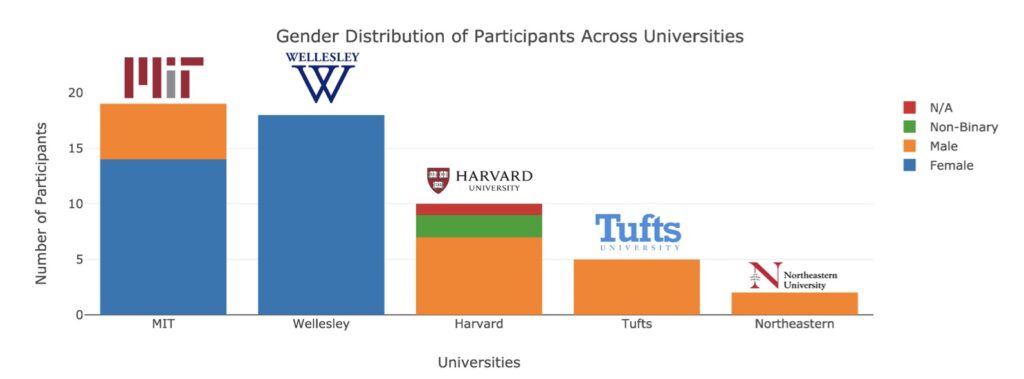

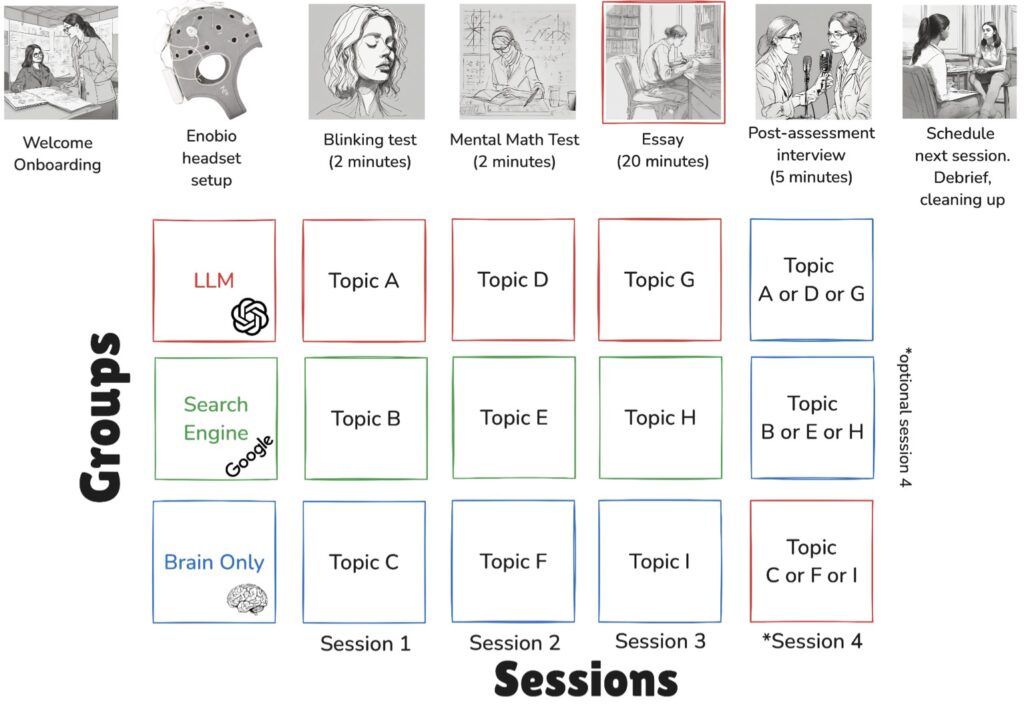

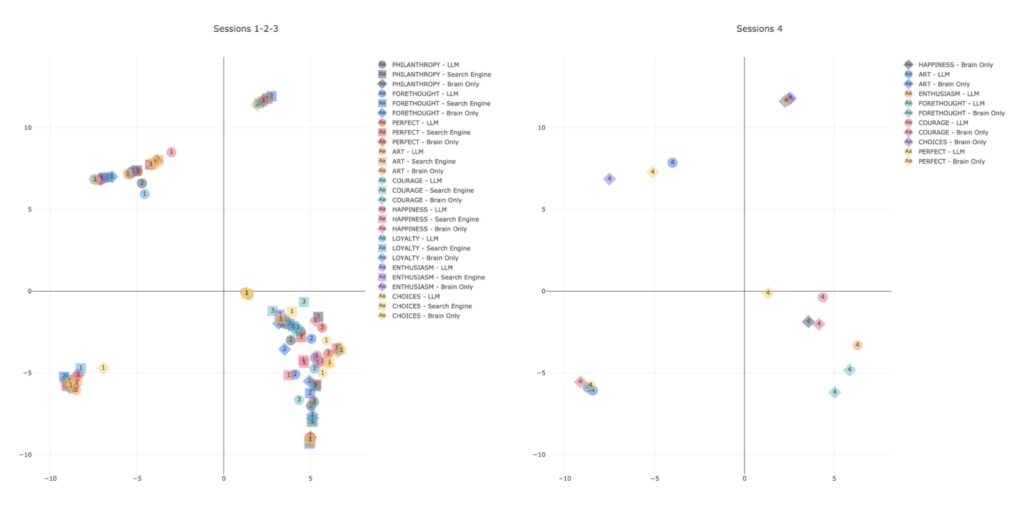

Researchers recruited 54 participants to perform essay writing tasks over four sessions spanning four months. Divided into three groups—LLM (AI-assisted), Search Engine (traditional internet search), and Brain-only (no external tools)—the participants were monitored using Electroencephalography (EEG) to map their neural activity. The results provide a sobering look at how our brains react to automation.

Neural Connectivity: Use It or Lose It

The study’s most striking finding is that cognitive activity scales down in direct relation to external tool use.

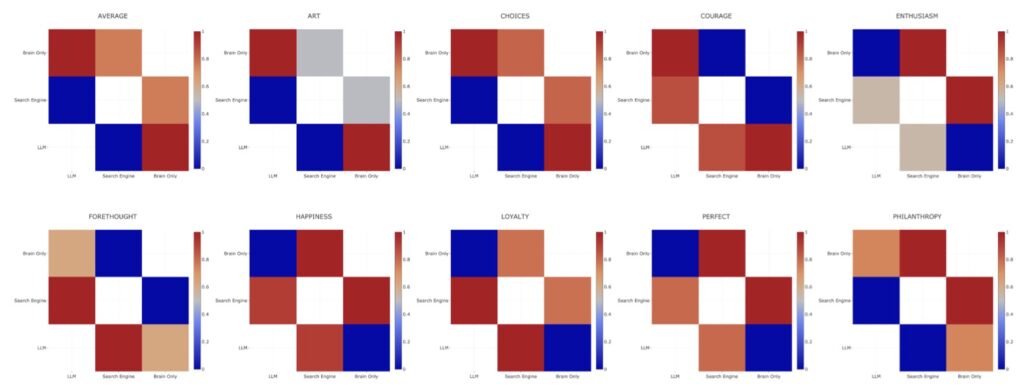

When analyzing brain connectivity, the Brain-only group exhibited the strongest, most wide-ranging neural networks. These participants were deeply engaged in macro-level structuring and micro-level syntax generation. The Search Engine group sat in the middle, showing moderate engagement.

In stark contrast, the LLM group displayed the weakest overall coupling. By offloading the “thinking” to the algorithm, these users bypassed the heavy lifting of neural processing. The EEG data revealed that the brain essentially idles when the AI takes the wheel, raising concerns about long-term skill retention.

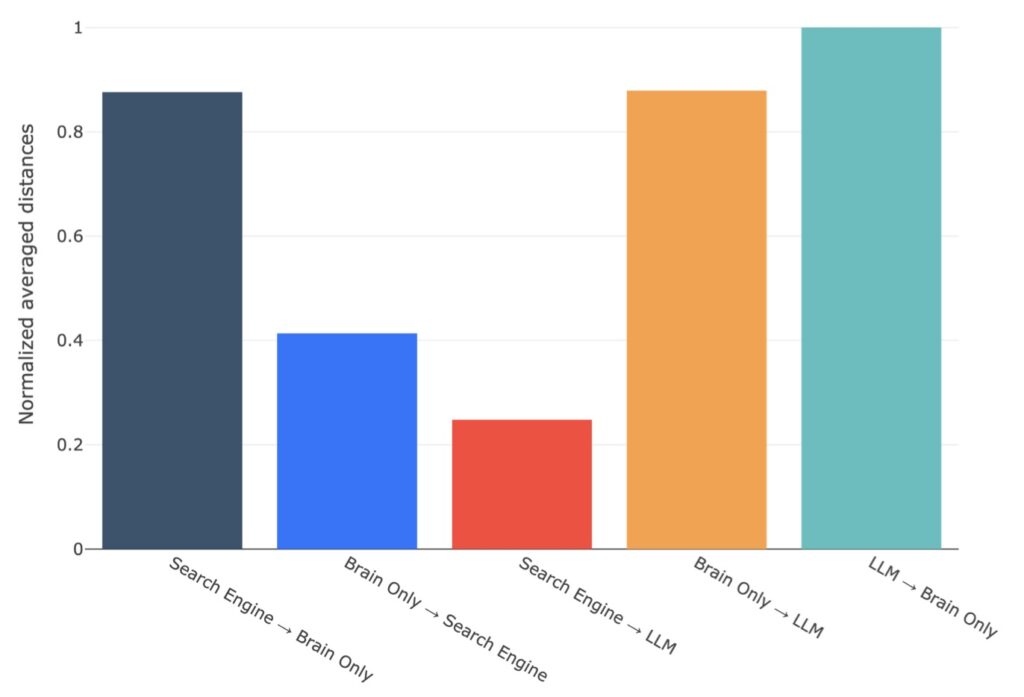

The “Switch”: Evidence of Cognitive Debt

The true impact of AI reliance became apparent in Session 4, where researchers swapped the conditions. LLM users were forced to write with no tools (LLM-to-Brain), and Brain-only users were given access to AI (Brain-to-LLM).

- The LLM-to-Brain struggle: Participants who had relied on AI for the previous three months showed reduced alpha and beta connectivity when forced to write on their own. They exhibited signs of “under-engagement,” suggesting they had accumulated cognitive debt. Their brains struggled to coordinate the necessary neural resources to complete the task effectively.

- The Brain-to-LLM boost: Conversely, when unassisted writers were given AI tools, their brains lit up. They showed widespread activation in occipito-parietal and prefrontal areas. Rather than passively accepting AI output, these users engaged in “critical rewriting,” using the AI as a tool for refinement rather than generation.

The Echo Chamber and Loss of Ownership

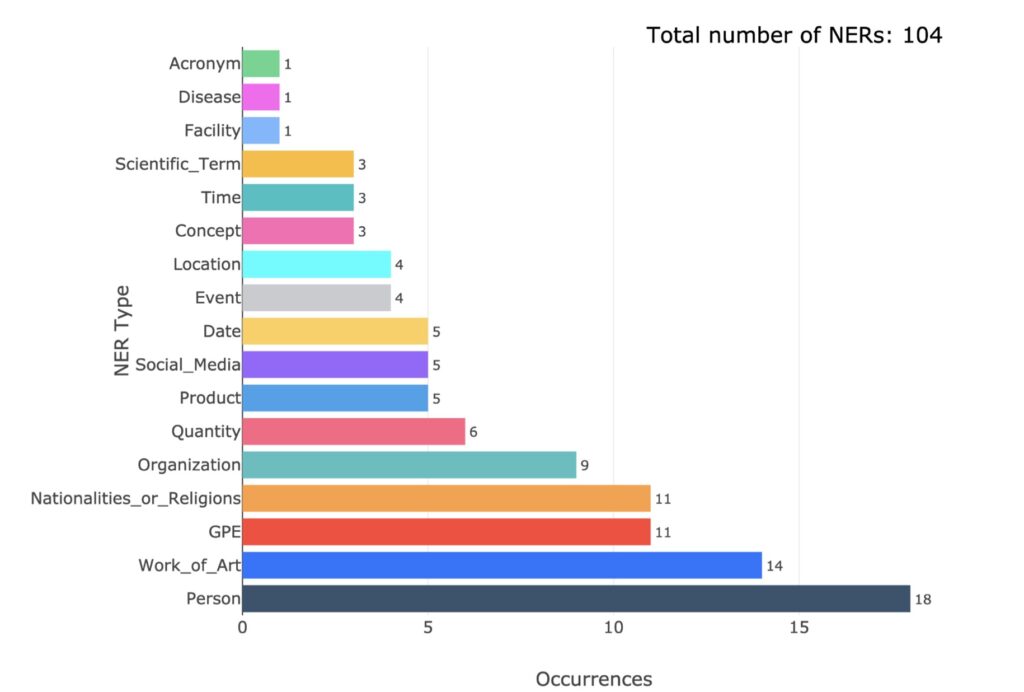

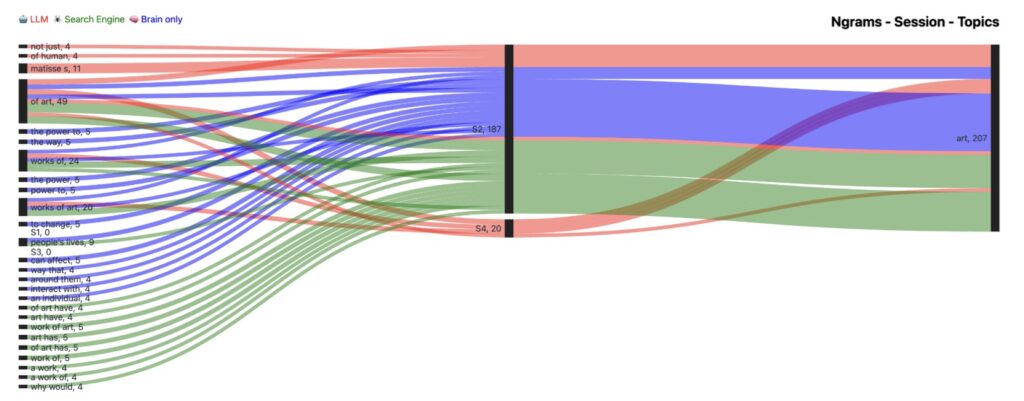

Beyond neural signals, the study analyzed the essays using Natural Language Processing (NLP) and human evaluation. The results pointed to a “homogeneity of thought” among AI users.

Essays produced by the LLM group lacked diversity. Teachers and AI judges noted that n-gram patterns and topic ontologies were strikingly similar within the group. Furthermore, LLM users reported the lowest self-perceived ownership of their work. In post-session interviews, many could not accurately quote the essay they had “written” just minutes prior.

While the Search Engine group maintained strong ownership (though less than the Brain-only group), the LLM users fell into a passive role. They became curators of an algorithmic “echo chamber,” accepting probabilistic answers based on training data rather than formulating independent arguments.

Implications for the Future of Learning

While LLMs reduce the friction of answering questions, this study suggests they also diminish the inclination to critically evaluate information. Over the course of four months, LLM users consistently underperformed at neural, linguistic, and behavioral levels compared to their Brain-only counterparts.

As AI integrates further into education, we face a paradox: the tools that offer the greatest access to information may be the very things that degrade our ability to process it. The findings underscore a pressing need to treat AI not just as a productivity hack, but as a tool that requires active, critical engagement to prevent the erosion of human learning skills.