How Google’s Latest Breakthrough AI Model is Transforming the Future of Assistance

- Agentic Revolution: Gemini 2.0 introduces a new era of AI agents capable of reasoning, planning, and acting on users’ behalf while maintaining human supervision.

- Enhanced Multimodality: With support for native image, video, and audio outputs alongside advanced tool integration, Gemini 2.0 redefines versatility in AI applications.

- Commitment to Responsible Innovation: Google prioritizes safety and ethics, integrating robust evaluation mechanisms to ensure responsible deployment of AI technology.

Artificial Intelligence stands at the frontier of innovation, and with the release of Gemini 2.0, Google has set the stage for an unprecedented leap in AI capability. Sundar Pichai, CEO of Google and Alphabet, frames this advancement as a transformative step towards creating universally accessible AI assistants. Building on the success of Gemini 1.0 and 1.5, Gemini 2.0 combines enhanced multimodal capabilities with agentic functionality—ushering in a new paradigm where AI doesn’t just understand information but acts decisively to assist users.

What Sets Gemini 2.0 Apart?

Gemini 2.0 isn’t just a model upgrade; it’s a redefinition of what AI can achieve. Its core innovation lies in native multimodal output, including text-to-speech (TTS) in multiple languages and natively generated images. These features extend the boundaries of AI interaction, making it possible for users to receive dynamic, context-aware responses that integrate seamlessly with their environment.

Key highlights include:

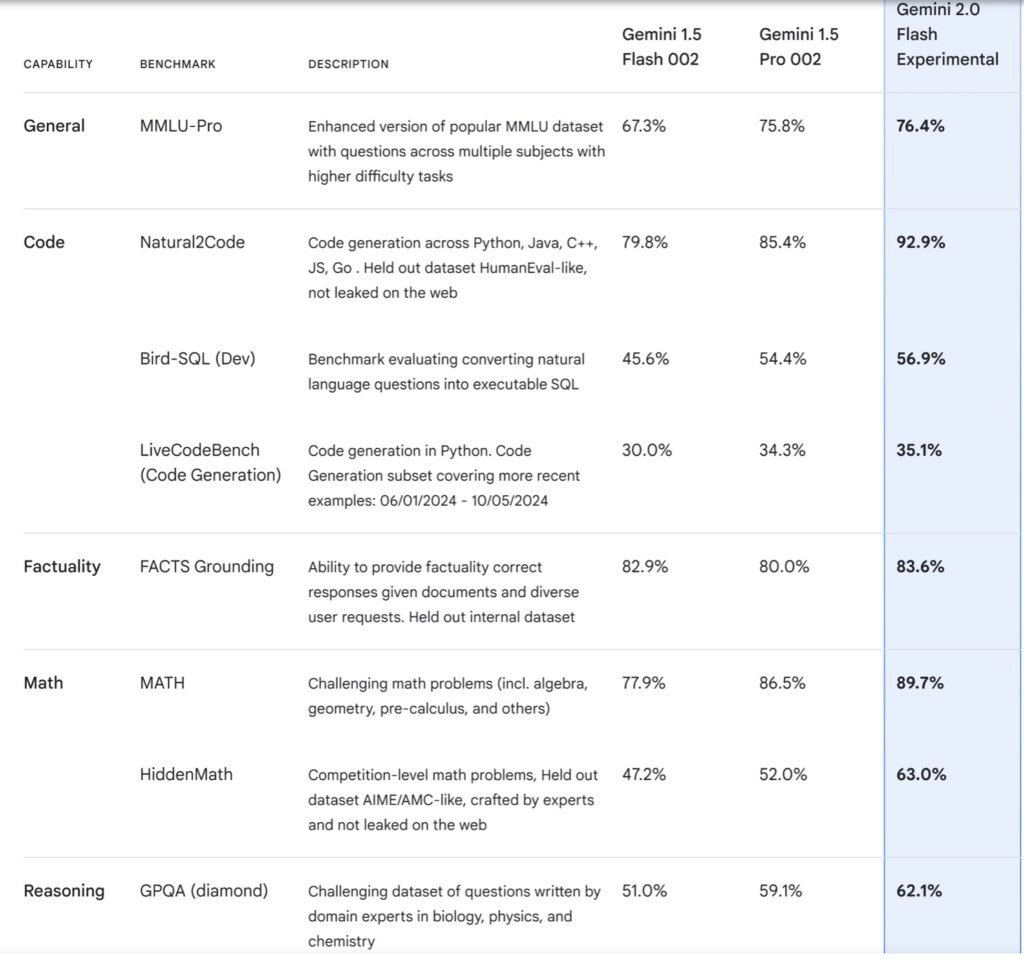

- Speed and Performance: Gemini 2.0 Flash outpaces its predecessors, delivering superior benchmarks with reduced latency.

- Tool Integration: Native support for tools like Google Search, Lens, and Maps ensures unparalleled utility across tasks.

- Advanced Reasoning: The Deep Research feature demonstrates the model’s ability to tackle complex topics, offering insights and solutions with ease.

Agentic AI: The Future of Assistance

Gemini 2.0’s agentic design signifies a shift from passive information retrieval to proactive assistance. This capability is evident in prototypes like Project Astra and Project Mariner, which explore real-world and browser-based task management, respectively. With improved dialogue, memory, and planning capabilities, Gemini-based agents can execute multi-step instructions and adapt to diverse scenarios.

For developers, tools like the Multimodal Live API and the AI-powered coding assistant Jules open new avenues for creating interactive applications, emphasizing utility across industries, from software development to gaming.

Safety and Responsibility at the Core

Google underscores its commitment to responsible innovation by embedding robust safety mechanisms into Gemini 2.0. The Responsibility and Safety Committee (RSC) evaluates risks and implements safeguards against misuse, including privacy controls, anti-prompt injection defenses, and optimized training for multimodal outputs. By involving trusted testers and conducting rigorous assessments, Google ensures that Gemini 2.0 remains a secure and ethical tool for all users.

Building Towards the Future

The launch of Gemini 2.0 is more than a technological milestone—it’s a glimpse into the evolving role of AI in everyday life. From assisting users with advanced research to navigating virtual and real-world environments, Gemini 2.0 lays the groundwork for next-generation AI agents. With plans for expanded integration into Google products and beyond, the possibilities are vast and exciting.

As Sundar Pichai remarked, “If Gemini 1.0 was about organizing and understanding information, Gemini 2.0 is about making it much more useful.” Indeed, this agentic era promises to revolutionize how we interact with technology and redefine the boundaries of human-AI collaboration.