From self-directed agent swarms to aesthetic-aware coding, Moonshot AI’s latest model sets a new standard for open-source intelligence.

- Global SOTA Dominance: Kimi K2.5 claims the throne as the most powerful open-source model, achieving top-tier scores on agentic benchmarks like HLE (50.2%) and vision/coding tasks like MMMU Pro (78.5%) and SWE-bench Verified (76.8%).

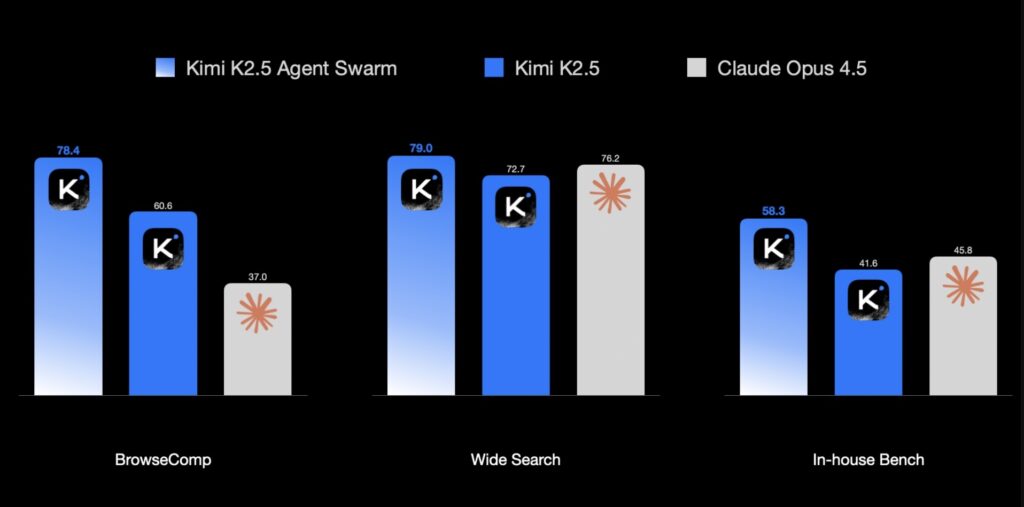

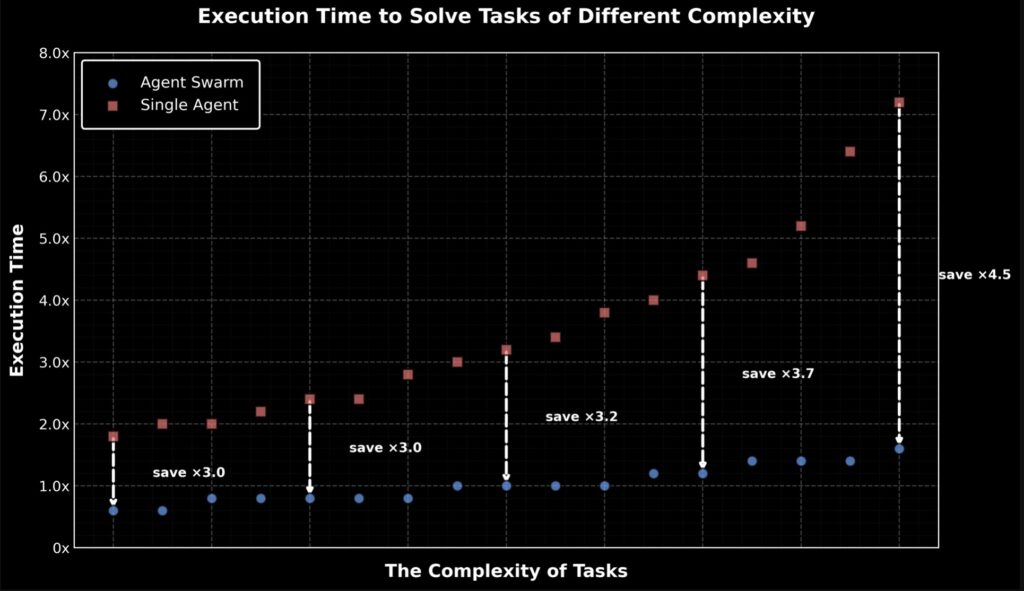

- Revolutionary Agent Swarms: Introducing a “Swarm” paradigm where K2.5 orchestrates up to 100 sub-agents in parallel, executing workflows 4.5× faster than single-agent setups using a novel reinforcement learning approach.

- Production-Grade Utility: Whether it’s turning a video into an aesthetic website via Kimi Code or handling 100-page financial documents, K2.5 is built for real-world application, integrating seamlessly into terminals and IDEs.

Today marks a pivotal shift in the open-source landscape with the introduction of Kimi K2.5. Building on the foundation of Kimi K2, this new model is the result of continued pretraining over approximately 15 trillion mixed visual and text tokens. Unlike models that treat vision as an afterthought, K2.5 is natively multimodal. This architecture eliminates the traditional trade-off between vision and text capabilities—instead, they improve in unison.

Available immediately on Kimi.com and through the Kimi App and API, K2.5 introduces four distinct modes to cater to every need: Instant, Thinking, Agent, and the groundbreaking Agent Swarm (Beta).

Coding with Taste: A New Era of Visual Engineering

Kimi K2.5 isn’t just a coding assistant; it is a visual engineer with a sense of aesthetics. It stands as the strongest open-source model to date for coding, particularly in front-end development where visual context is king.

From Prompt to Motion

K2.5 can transform simple conversation prompts into complete, interactive front-end interfaces. It goes beyond static layouts to implement rich animations and scroll-triggered effects. By reasoning over images and video, it lowers the barrier for users to express intent visually.

A prime example of this capability is the “La Danse” demo. In an autonomous visual debugging showcase, K2.5 was tasked with translating the aesthetic of Matisse’s La Danse into a webpage. Using Kimi Code, it generated the site, visually inspected its own output against the artwork, and iteratively debugged the code to match the artistic style—all without human intervention.

Kimi Code: The Terminal Companion

For professional software engineers, K2.5 pairs with Kimi Code, a new open-source product that integrates directly into your terminal and popular IDEs like VSCode, Cursor, and Zed. Kimi Code supports image and video inputs and automatically discovers and migrates existing skills (MCPs) into your environment. Internal evaluations on the Kimi Code Bench show consistent improvements across debugging, refactoring, and testing tasks.

The Hive Mind: Scaling Out with Agent Swarms

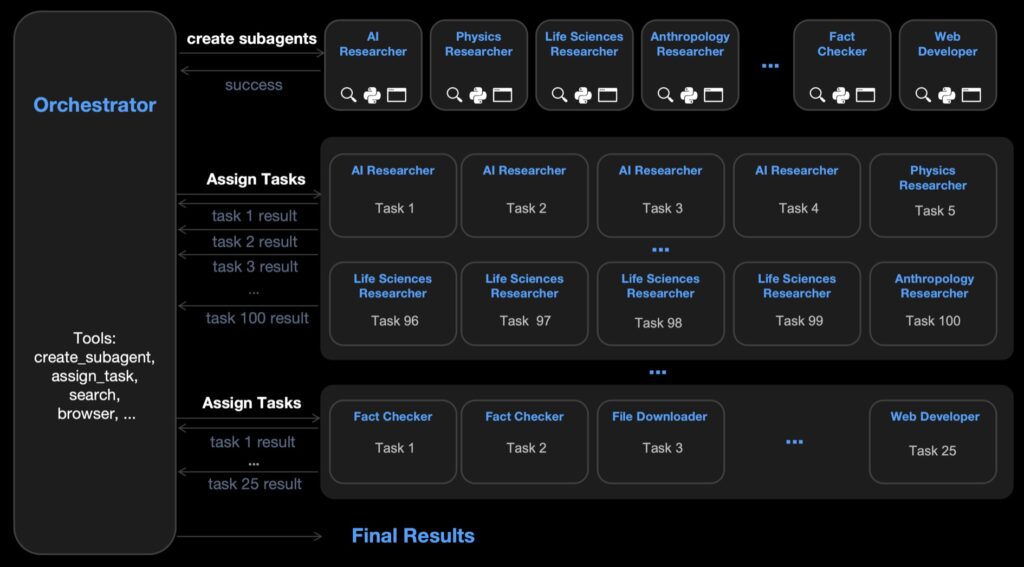

Perhaps the most futuristic leap in K2.5 is the shift from single-agent scaling to Self-Directed Agent Swarms. Available as a research preview for high-tier users, this feature allows K2.5 to automatically orchestrate a swarm of up to 100 sub-agents working in parallel.

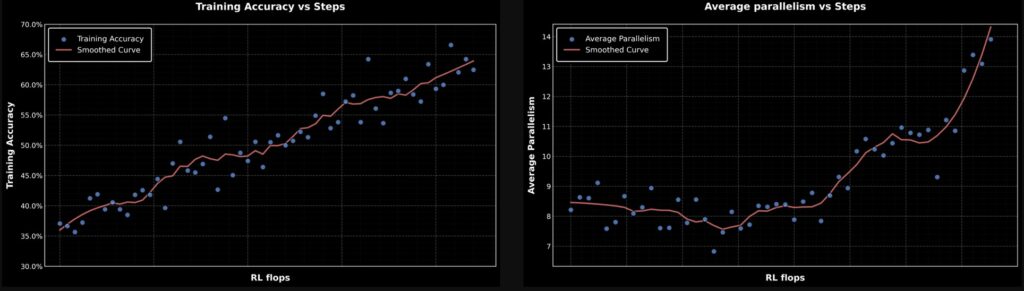

Parallel-Agent Reinforcement Learning (PARL)

Traditional agents work sequentially, which is slow and prone to bottlenecks. K2.5 employs a novel training method called PARL. It utilizes a trainable orchestrator agent to decompose complex tasks into parallelizable subtasks. These subtasks are executed concurrently by frozen sub-agents, handling up to 1,500 tool calls in a coordinated effort.

To achieve this, the model was trained using a sophisticated reward system that prevents “serial collapse”—where an agent defaults to working alone.

- Early Training: Incentivizes the instantiation of sub-agents and concurrent execution.

- Late Training: Shifts focus to end-to-end task quality (Rquality), ensuring that parallelism actually contributes to success.

The result? A 4.5× reduction in execution time and an 80% reduction in end-to-end runtime on internal evaluations. The system uses a “Critical Steps” metric, inspired by critical path analysis in computing, to ensure that spawning more agents genuinely shortens the workflow.

Office Productivity: The Expert Analyst

Kimi K2.5 brings agentic intelligence to the high-density world of knowledge work. It is designed to handle the heavy lifting of modern office tasks, from constructing financial models with Pivot Tables to writing LaTeX equations in PDFs.

In the AI Office Benchmark, K2.5 demonstrated a 59.3% improvement over K2 Thinking mode. It can digest massive inputs—reasoning over 10,000-word papers or 100-page documents—and produce expert-level outputs across spreadsheets, slide decks, and reports. This is not just a chatbot; it is a comprehensive analyst capable of multi-step, production-grade workflows.

Technical Dominance: The Benchmark Breakdown

Kimi K2.5’s claims are backed by rigorous testing against top-tier competitors like DeepSeek-V3.2, Claude Opus 4.5, and GPT-5.2.

- Agentic Benchmarks: On the HLE (Humanity’s Last Exam) full set, K2.5 scored 50.2% (with tools), showcasing superior reasoning in both text and image contexts. In BrowseComp, which tests agentic web browsing, it achieved 74.9%.

- Vision & Coding: The model achieved Open-Source SOTA on MMMU Pro (78.5%) and VideoMMMU (86.6%), confirming its visual reasoning prowess. In coding, it secured 76.8% on SWE-bench Verified.

- Scientific Reasoning: High performance was also noted on GPQA-Diamond and AIME 2025, where K2.5 was evaluated with a high-compute budget to ensure maximum reasoning depth.

A Step Toward AGI

Grounded in massive-scale vision-text pre-training and armed with the ability to self-organize into swarms, Kimi K2.5 represents a meaningful step toward Artificial General Intelligence (AGI) for the open-source community. By mastering real-world constraints—whether in code, visual design, or complex data analysis—Kimi K2.5 is redefining what is possible in the daily workflow of developers and knowledge workers alike.