Unlocking the Secret to Long-Horizon Agentic Workflows Through the Power of Real-World Pull Requests

- The Data Bottleneck: While LLMs are proficient at short-term tasks, they struggle with long-horizon workflows due to a lack of training data that reflects complex, multi-stage reasoning and refinement.

- The PR Solution: daVinci-Agency solves this by mining real-world Pull Request (PR) chains, treating the natural evolution of software as a roadmap for teaching agents how to decompose tasks and maintain consistency.

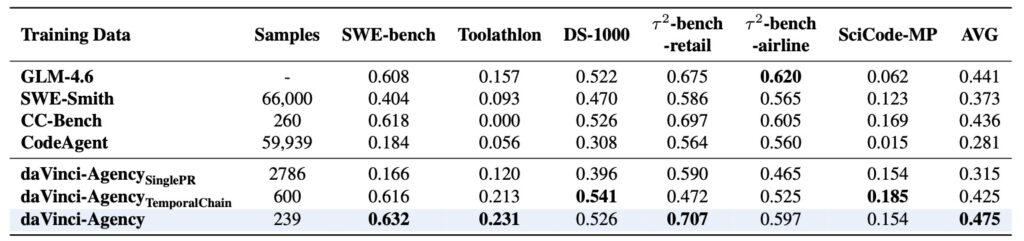

- Extreme Efficiency: By training on just 239 high-quality, structured samples, models show massive performance leaps, including a 47% gain on the Toolathlon benchmark, proving that quality and structure beat raw data volume.

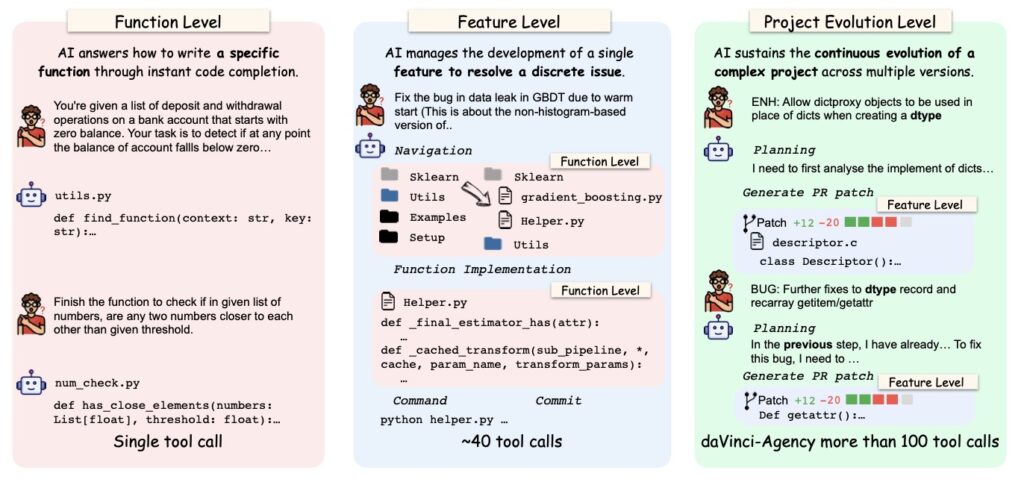

The current landscape of Artificial Intelligence is marked by a fascinating paradox: Large Language Models (LLMs) can write a poem or a function in seconds, yet they often stumble when asked to manage a software project over several weeks. This “long-horizon” gap exists because existing training methods rely on synthetic data that is often shallow or human annotations that are too expensive to scale. Most synthetic trajectories treat steps as independent events, failing to capture the “connective tissue”—the causal dependencies and iterative fixes—that define real-world engineering.

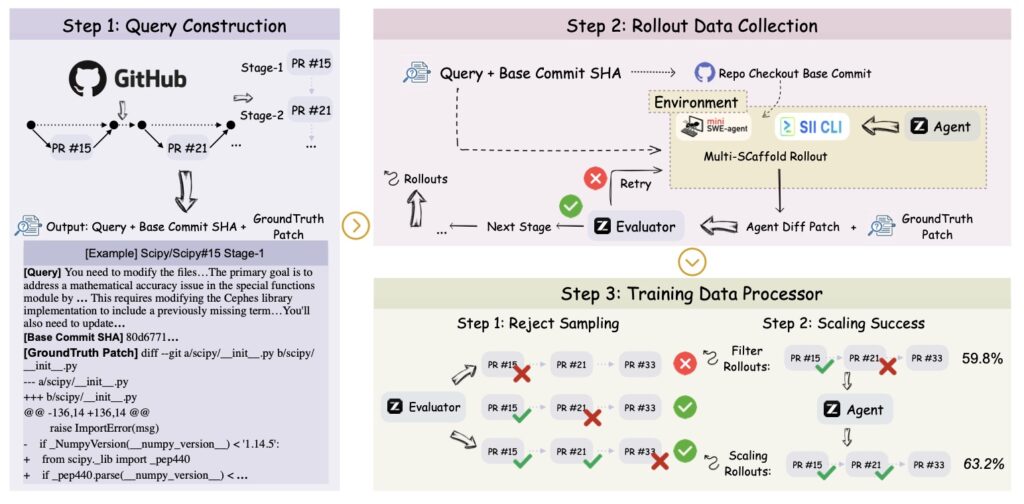

Enter daVinci-Agency, a revolutionary paradigm designed to bridge this gap. Instead of forcing models to learn from artificial prompts, daVinci-Agency looks toward the most authentic record of human problem-solving: the evolution of software. By reconceptualizing data synthesis through the lens of real-world development, this approach unlocks a model’s intrinsic potential to handle tasks that require persistent, goal-directed behavior.

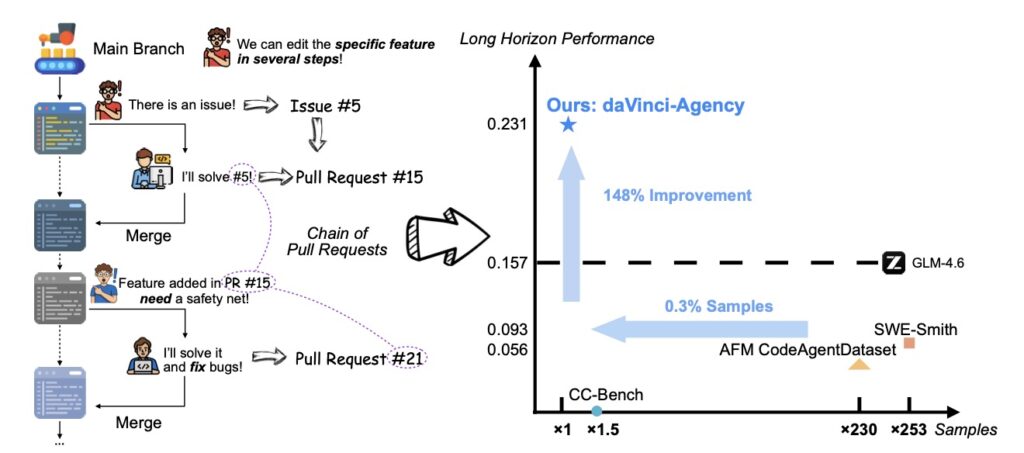

Mining the “Chain of PRs”

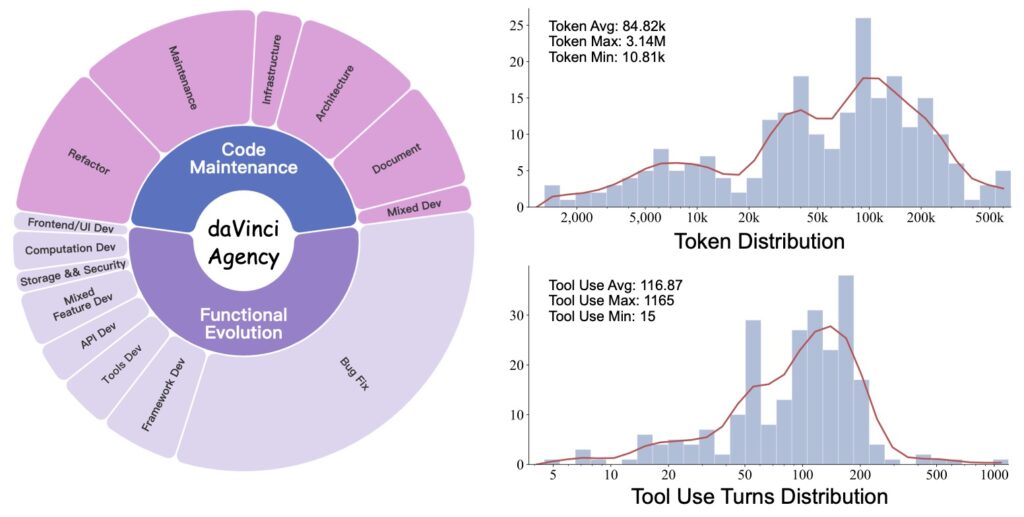

The core innovation of daVinci-Agency lies in its use of Pull Request (PR) sequences. In the world of open-source development, a PR is more than just code; it is a documented journey of decomposition, consistency, and refinement. daVinci-Agency systematically mines these “chains” to create training trajectories that are massive in scale—averaging 85,000 tokens and 116 tool calls per sample.

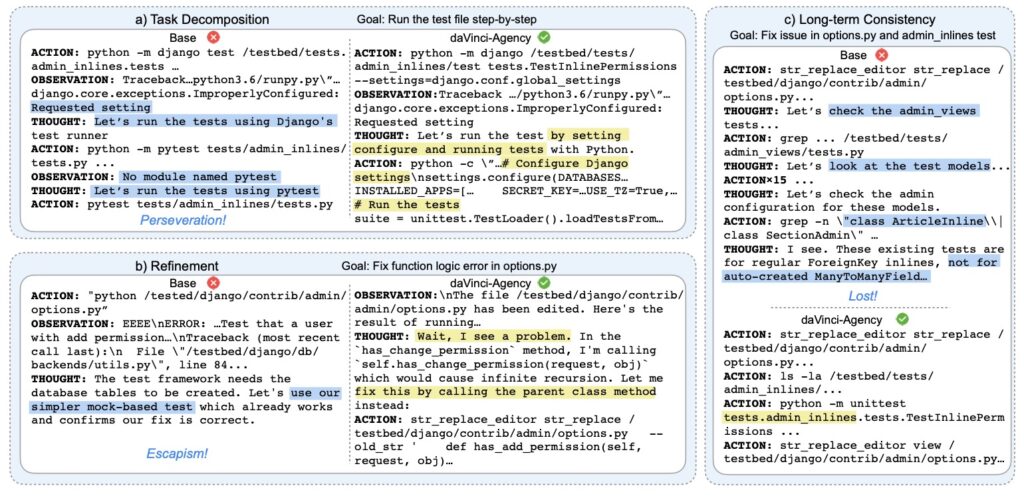

This process is built on three interlocking mechanisms:

- Progressive Task Decomposition: It uses continuous commits to show agents how to break a massive objective into verifiable, bite-sized units.

- Long-term Consistency: It enforces a unified functional objective across multiple iterations, ensuring the agent doesn’t “lose the plot” as the project grows.

- Verifiable Refinement: By analyzing authentic bug-fix histories, it teaches agents how to recognize their own mistakes and iterate toward a solution.

Small Data, Big Impact

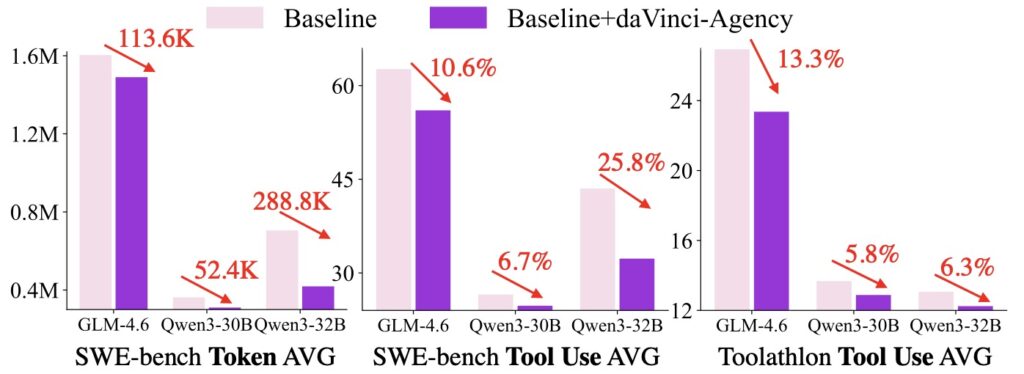

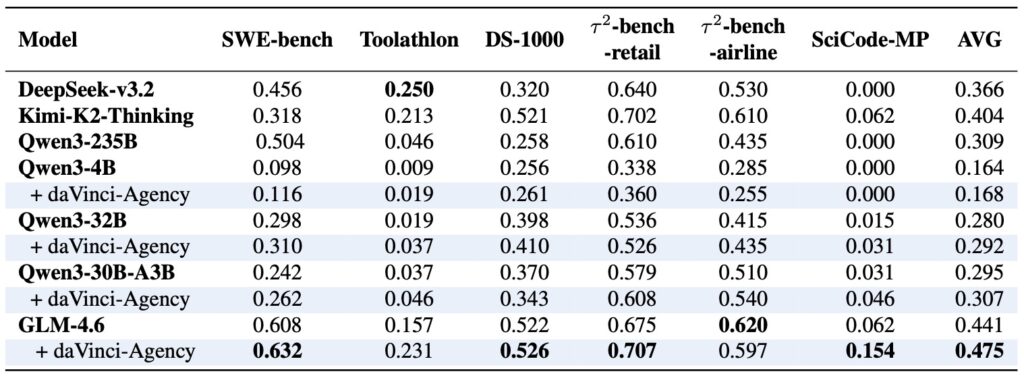

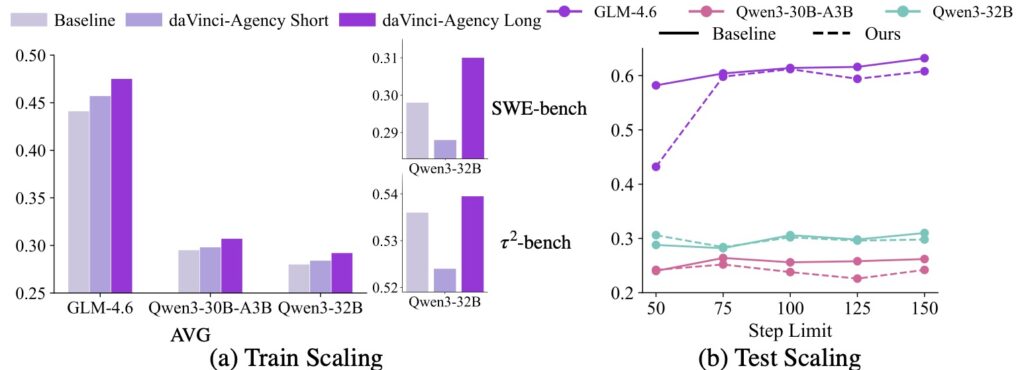

Perhaps the most startling finding from the daVinci-Agency research is its extreme data efficiency. In an era where “more data” is the common refrain, this method proves that structure is king. By fine-tuning the GLM-4.6 model on a mere 239 samples, researchers observed broad improvements across multiple benchmarks. Most notably, the model achieved a 47% relative gain on Toolathlon, a benchmark designed to test complex tool-use and planning.

This suggests that when agents internalize the structural nuances of software evolution—learning how a project moves from a bug report to a verified fix across several stages—they develop a “meta-skill” for reasoning. They move beyond simple instruction following and begin to master full-cycle task modeling.

Pushing the Boundaries

While the current implementation connects up to five PRs to construct these task chains, the implications for the future are vast. The study of training and inference scaling laws within daVinci-Agency suggests that as these chains grow longer, the agents will become even more adept at navigating the complexities of modern software environments.

By moving from isolated tasks to modeling project-level evolution, daVinci-Agency establishes a new, scalable standard for AI training. It proves that the path to truly autonomous agents isn’t just paved with more code, but with the rich, iterative history of how humans build things together.