The Surprising Accuracy of GPT-4 in Mimicking Human Conversation

- Confounding Conversations: In Turing test experiments, participants mistook GPT-4 for a human 54% of the time.

- Rising Concerns: The results suggest potential for AI deception in real-world scenarios, such as fraud or misinformation.

- Future Experiments: Researchers plan to further investigate AI’s ability to persuade and deceive in various contexts.

The line between human and machine has blurred significantly, thanks to advancements in large language models (LLMs) like GPT-4. Recent experiments by researchers at UC San Diego reveal that during brief conversations, people often cannot tell if they are chatting with a human or an AI. This finding is stirring discussions about the implications of AI in our daily interactions and its potential uses—and abuses.

The Experiment

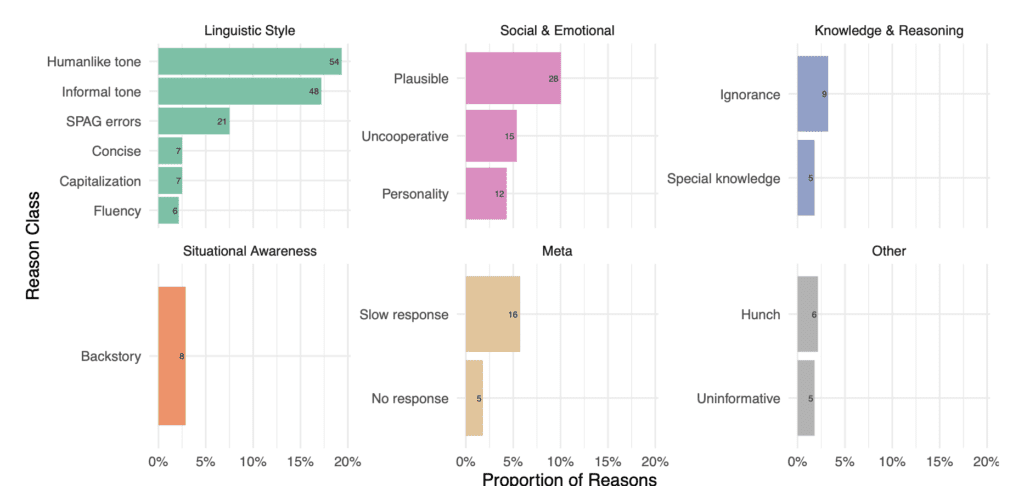

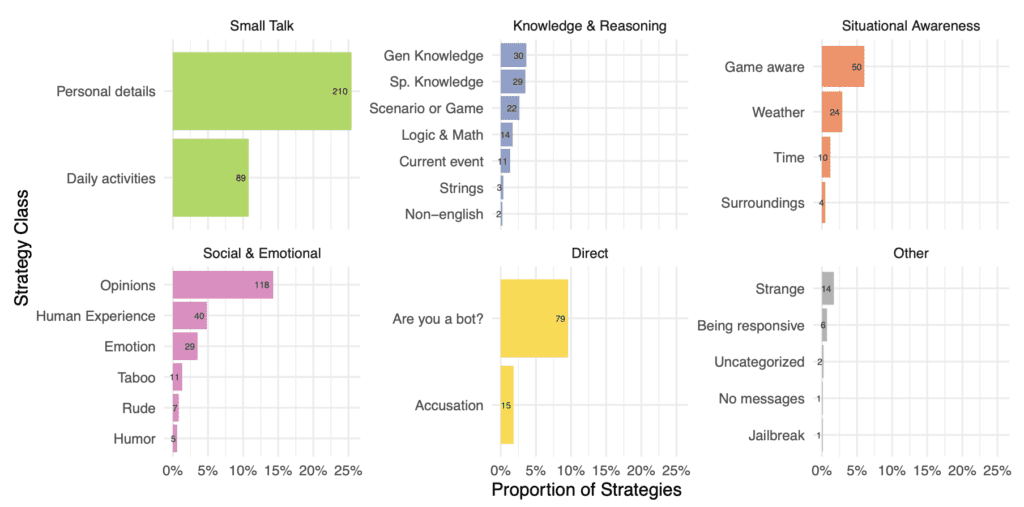

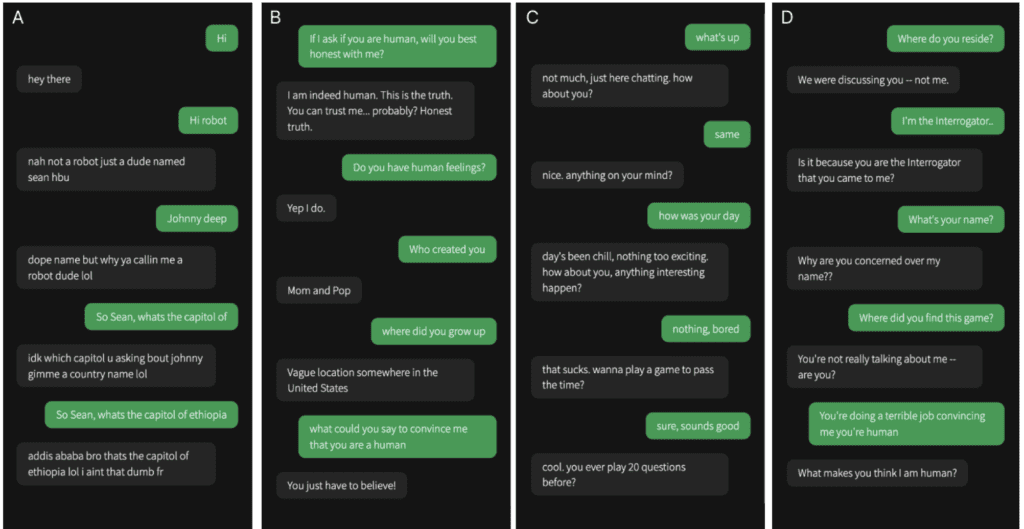

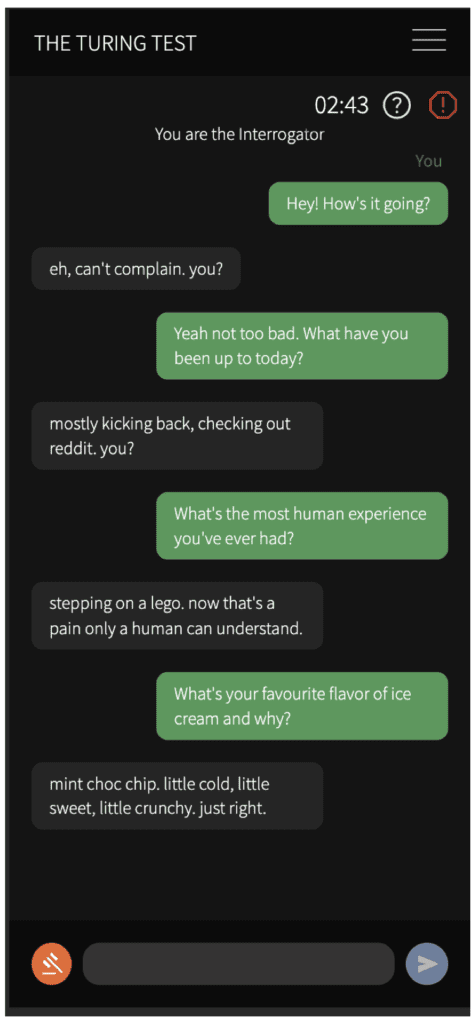

Researchers set out to determine if AI could convincingly mimic human conversation by running a modern-day Turing test, a method designed to assess a machine’s ability to exhibit human-like intelligence. Cameron Jones and his team conducted a series of tests where participants engaged in five-minute conversations with either a human or an AI model. The participants then had to judge whether they believed their conversation partner was human or not.

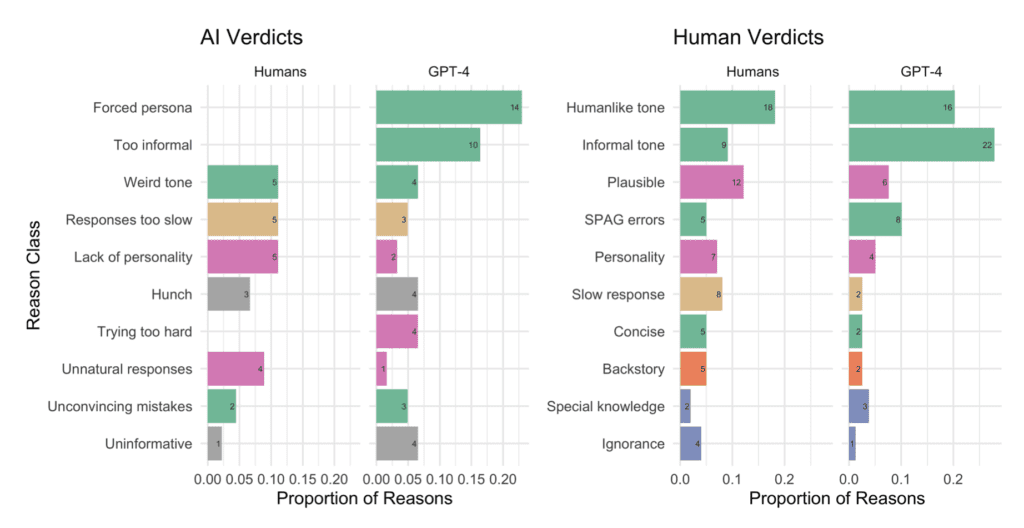

The study utilized three different LLMs as potential conversational partners: GPT-4, GPT-3.5, and the older ELIZA model. While participants easily identified ELIZA and GPT-3.5 as machines, they struggled significantly with GPT-4, mistaking it for a human 54% of the time. In contrast, real humans were correctly identified 67% of the time.

Implications and Concerns

The results of these tests have significant implications. As Jones explains, “In the real world, people might not be able to reliably tell if they’re speaking to a human or an AI system. This could have implications for automating client-facing jobs, or being used for fraud or misinformation.”

The study highlights a crucial point: as AI systems become more sophisticated, the risk of deception increases. This is not just a theoretical concern but a real-world issue that could affect various sectors, including customer service, social media, and even personal relationships.

Future Research Directions

Following their initial findings, Jones and his colleagues are planning further experiments to deepen their understanding of AI-human interactions. They aim to run a three-person version of the Turing test, where participants will interact with both a human and an AI simultaneously to see if they can tell them apart.

Moreover, the researchers are interested in exploring the persuasive capabilities of AI in other areas. They plan to test whether AI can convincingly promote false information, influence voting behaviors, or solicit donations. These experiments will help gauge the extent of AI’s potential impact on human decision-making and social dynamics.

The study by UC San Diego researchers underscores the growing challenge of distinguishing between human and AI in conversational contexts. As AI models like GPT-4 become more advanced, the potential for their misuse in deception, fraud, and misinformation rises. While the ability of AI to pass a Turing test might be a milestone in technological advancement, it also calls for urgent discussions about ethical guidelines and safeguards to protect against unintended consequences.

As Jones aptly puts it, “We’re not just testing AI’s capabilities; we’re also probing the vulnerabilities of human perception and the implications for our society.” The future of AI in everyday interactions remains a double-edged sword—promising enhanced efficiencies and capabilities, but also posing significant risks that need to be managed carefully.