Meta’s Open AI Model Spurs Accessibility and Safety Concerns

- Meta releases Llama 3.1 AI model for free, emphasizing accessibility and customization.

- The model’s open nature sparks debate over potential misuse and safety risks.

- Llama 3.1 offers significant advancements but is not fully open source, maintaining some restrictions.

Meta has made waves in the AI community with the release of its latest large language model, Llama 3.1. Unlike many tech giants aiming to sell their AI products, Meta is offering this powerful model for free. This move aligns with CEO Mark Zuckerberg’s vision of making AI more accessible and customizable, reminiscent of the open-source revolution seen with Linux in the late 90s and early 2000s. However, this open approach also brings up significant concerns about the potential dangers of releasing such powerful technology without strict controls.

A New Standard in Open AI

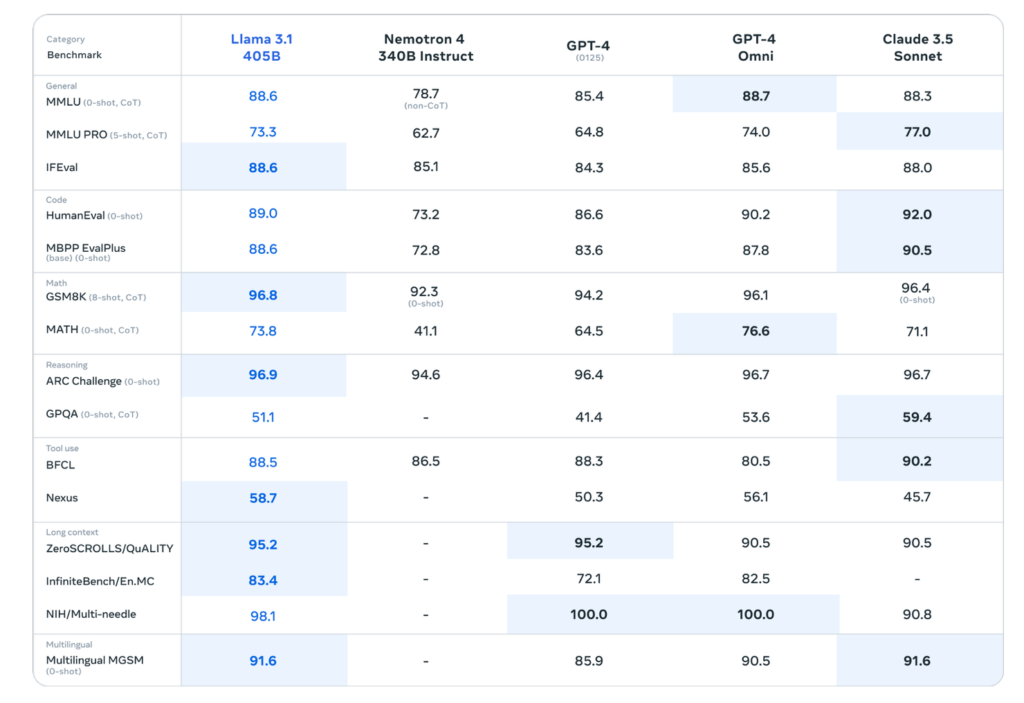

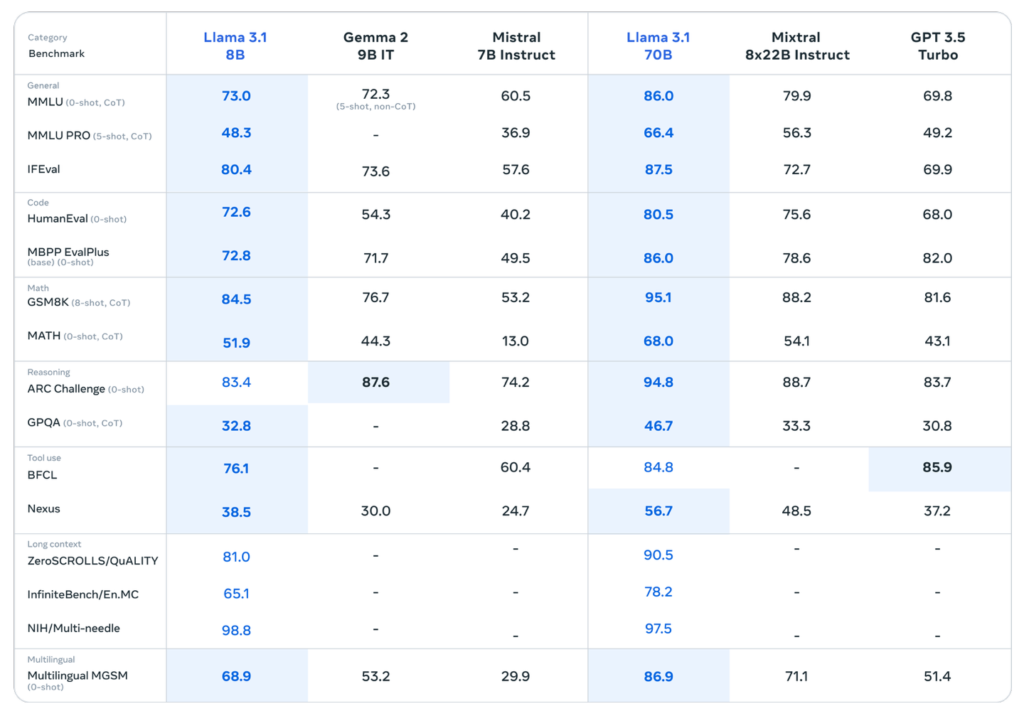

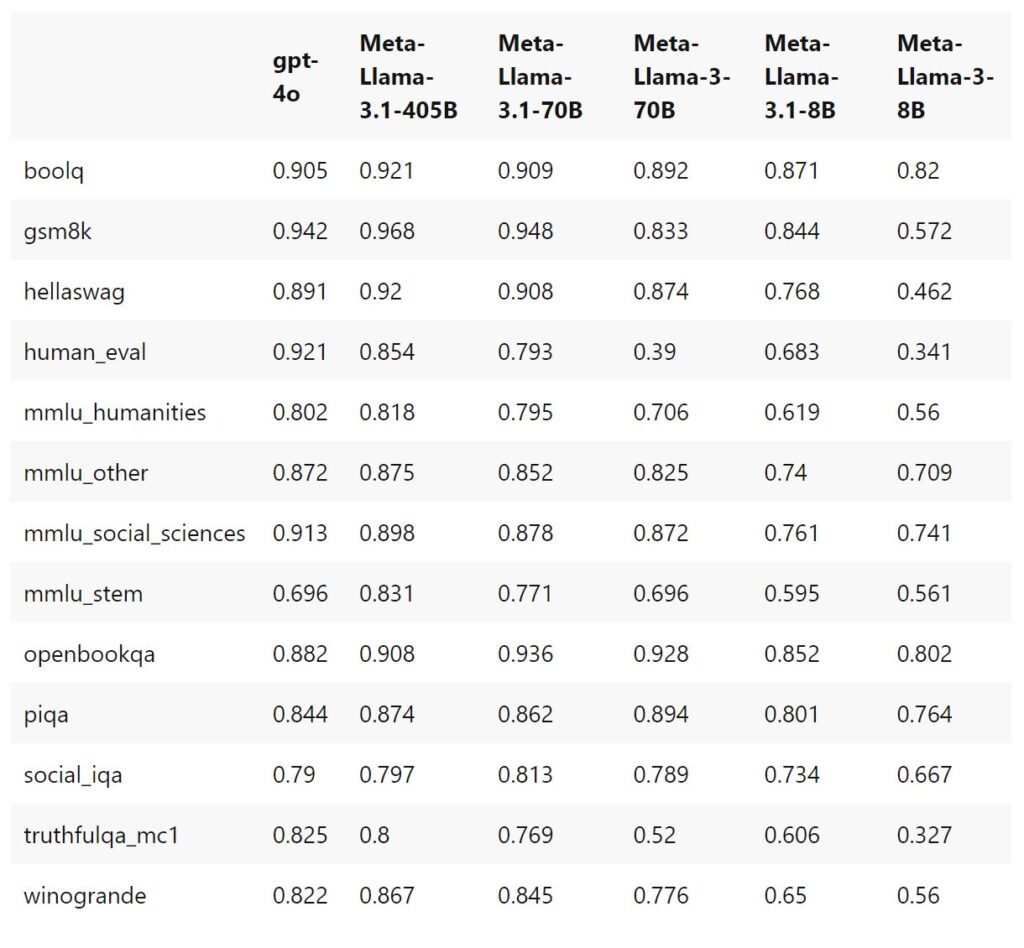

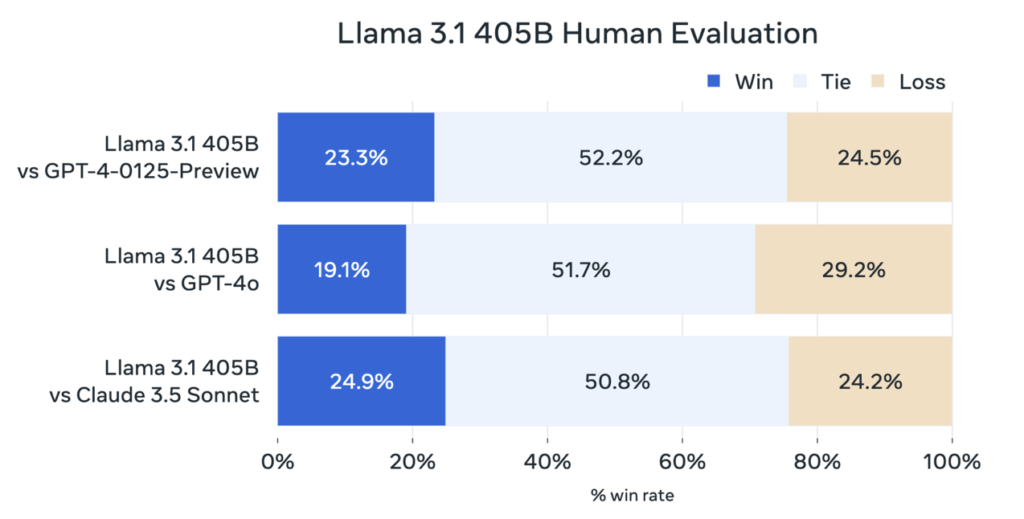

Llama 3.1, the newest iteration in Meta’s Llama series, includes models with 7 billion and 1.4 billion parameters. Meta claims that these models rival the best commercial offerings from companies like OpenAI, Google, and Anthropic. The 7B model, for example, is said to be 30 times faster than its predecessor in tasks such as serving up chatbot responses. This impressive performance positions Llama 3.1 as a leading contender in the AI landscape.

The model’s open-source nature, however, is not absolute. While Meta has released the model weights, training code, and pretraining dataset, there are still restrictions on how the model can be used commercially. This semi-open approach has helped Meta secure a significant position among AI researchers and developers, fostering a community around its offerings.

Balancing Accessibility and Safety

Meta’s decision to freely release Llama 3.1 comes with a commitment to safety. The company has trained the model to prevent harmful outputs by default. However, these safeguards can be modified, raising concerns about the potential misuse of the technology. This has ignited a debate on the risks associated with unrestricted AI models. Geoffrey Hinton, a prominent figure in AI and a Turing Award winner, has expressed concerns that such open models could be exploited by bad actors, including cybercriminals.

Meta has addressed these concerns by subjecting Llama 3.1 to rigorous safety testing and releasing tools to help developers moderate and secure the model’s output. These include Llama Guard 3 and Prompt Guard, designed to enhance the responsible use of AI.

The Broader Implications

The release of Llama 3.1 has broader implications for the AI industry. By making such a powerful tool freely available, Meta challenges the closed model approach favored by other tech companies. This democratization of AI could spur innovation and enable more developers to create advanced applications. However, it also necessitates a robust framework for ensuring the ethical and safe use of AI.

Meta’s approach mirrors the open-source ethos that transformed software development decades ago. Just as Linux became a cornerstone of modern computing, Llama 3.1 could become a pivotal tool in the AI ecosystem. Mark Zuckerberg has drawn parallels between the two, suggesting that AI will follow a similar path of development and widespread adoption.

Despite the potential for positive impacts, the release of Llama 3.1 has not been without controversy. Some experts warn that the absence of stringent controls could lead to unintended consequences, such as the acceleration of harmful technologies. Dan Hendrycks, director of the Center for AI Safety, acknowledges Meta’s efforts in safety testing but emphasizes the need for ongoing vigilance and research to understand and mitigate future risks.

Meta’s release of Llama 3.1 marks a significant milestone in the AI industry, offering a powerful, free model that emphasizes accessibility and customization. While this move has the potential to drive innovation and democratize AI development, it also raises critical questions about safety and ethical use. As Meta continues to navigate these challenges, the broader tech community will be watching closely to see how this balance between openness and security evolves.