Revolutionizing AI Inference with Speed, Scale, and Cost-Effectiveness

- SambaNova’s breakthrough technology delivers 3X the speed and 5X the efficiency of GPUs, shrinking DeepSeek-R1 671B’s hardware requirements to a single rack.

- By year-end, SambaNova will scale its capacity to offer 100X the global capacity for DeepSeek-R1, enabling unprecedented access to developers and enterprises.

- Hosted securely in U.S. data centers, SambaNova’s platform unlocks real-time, cost-effective inference for the full DeepSeek-R1 model, transforming AI adoption.

Artificial intelligence is entering a new era, and SambaNova Systems is leading the charge with its groundbreaking advancements in AI inference. The company has unveiled the fastest and most efficient deployment of DeepSeek-R1 671B, a state-of-the-art reasoning model, on its proprietary platform. By addressing the long-standing challenges of cost and inefficiency in AI inference, SambaNova is setting a new standard for developers and enterprises alike.

Breaking Barriers: The Fastest and Most Efficient DeepSeek-R1

DeepSeek-R1, a 671-billion-parameter model, has been a game-changer in AI, reducing training costs by 10X. However, its adoption has been limited due to the high computational demands of inference, which have made production costly and inefficient. SambaNova has solved this problem with its SN40L Reconfigurable Dataflow Unit (RDU) chips, which deliver unmatched performance.

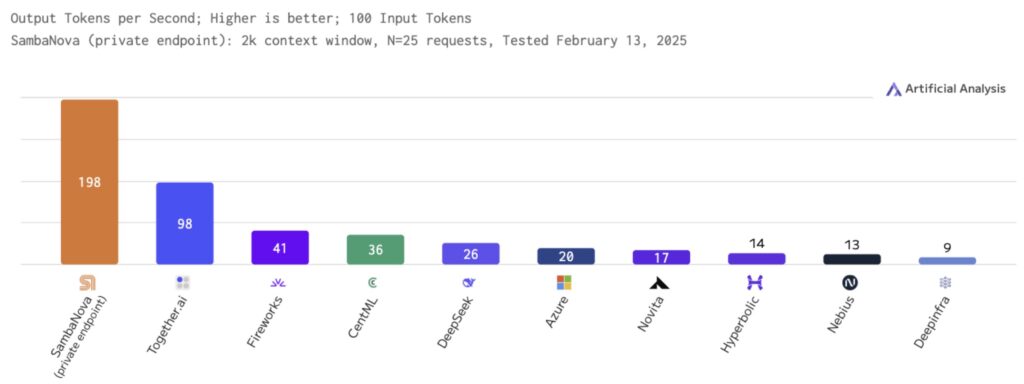

By collapsing the hardware requirements for DeepSeek-R1 from 40 racks of GPUs to just one rack of 16 RDUs, SambaNova has achieved 3X the speed and 5X the efficiency of the latest GPUs. This innovation allows the full DeepSeek-R1 model—not a distilled version—to run at 198 tokens per second per user, a speed that no other platform can match.

Rodrigo Liang, CEO of SambaNova, emphasized the significance of this breakthrough: “DeepSeek-R1 is one of the most advanced frontier AI models available, but its full potential has been limited by the inefficiency of GPUs. That changes today. We’re bringing the next major breakthrough—collapsing inference costs and reducing hardware requirements from 40 racks to just one—to offer DeepSeek-R1 at the fastest speeds, efficiently.”

AI Inference at Scale

SambaNova’s proprietary dataflow architecture and three-tier memory design are at the heart of this transformation. Unlike GPUs, which are constrained by memory and data communication bottlenecks, SambaNova’s RDUs are purpose-built for large-scale AI models like DeepSeek-R1. This allows the platform to achieve unprecedented efficiency, with a projected total rack throughput of 20,000 tokens per second in the near future.

Dr. Andrew Ng, a leading AI expert and founder of DeepLearning.AI, highlighted the importance of SambaNova’s achievement: “Reasoning models like R1 need to generate a lot of reasoning tokens to come up with a superior output, which makes them take longer than traditional LLMs. This makes speeding them up especially important. SambaNova’s high output speeds will support the use of reasoning models in latency-sensitive use cases.”

George Cameron, Co-Founder of Artificial Analysis, echoed this sentiment, stating that SambaNova’s deployment of DeepSeek-R1 is the fastest they have ever measured, making it a game-changer for developers and enterprises.

Scaling to Meet Global Demand

SambaNova is not stopping at speed and efficiency. The company is rapidly scaling its capacity to meet the growing demand for DeepSeek-R1. By the end of the year, SambaNova will offer 100X the current global capacity for the model, making it the most efficient enterprise solution for reasoning models.

Sumti Jairath, Chief Architect at SambaNova, explained, “DeepSeek-R1 is the perfect match for SambaNova’s three-tier memory architecture. With 671 billion parameters, R1 is the largest open-source large language model released to date, which means it needs a lot of memory to run. GPUs are memory constrained, but SambaNova’s unique dataflow architecture means we can run the model efficiently to achieve unprecedented throughput.”

This scalability will enable developers and enterprises to access the full power of DeepSeek-R1 for a wide range of applications, from autonomous coding agents to advanced reasoning tasks.

Transforming AI for Developers and Enterprises

SambaNova’s advancements are already making an impact. Companies like Blackbox AI, which serves over 10 million users, are leveraging SambaNova’s platform to accelerate their workflows. Robert Rizk, CEO of Blackbox AI, praised the partnership: “SambaNova’s chip capabilities are unmatched for serving the full DeepSeek-R1 671B model, which provides much better accuracy than any of the distilled versions. We couldn’t ask for a better partner to work with to serve millions of users.”

By offering real-time, cost-effective inference for the full DeepSeek-R1 model, SambaNova is unlocking new possibilities for developers and enterprises. The platform is hosted securely in U.S. data centers, ensuring privacy and reliability for its users.

Get Early Access to DeepSeek-R1

The full DeepSeek-R1 671B model is now available on SambaNova Cloud, with API access for select users. Developers and enterprises can experience the unmatched speed and efficiency of SambaNova’s platform by visiting cloud.sambanova.ai.

About SambaNova Systems

SambaNova Systems is a leader in generative AI, delivering purpose-built enterprise-scale AI platforms that redefine the boundaries of AI computing. With its innovative technology, SambaNova is empowering the next generation of AI applications, transforming industries, and enabling developers to achieve more than ever before.

SambaNova’s launch of the fastest DeepSeek-R1 671B model marks a pivotal moment in AI development. By solving the challenges of inference at scale, the company is not only making advanced AI accessible but also setting the stage for a future where reasoning models can power transformative applications across industries.