Bridging the Gap Between Artificial Intelligence and Real-World Physics for Dynamic Video Synthesis

- Introduction of MagicTime: A groundbreaking metamorphic time-lapse video generation model that integrates real-world physical principles, enhancing the realism and dynamism in generated videos.

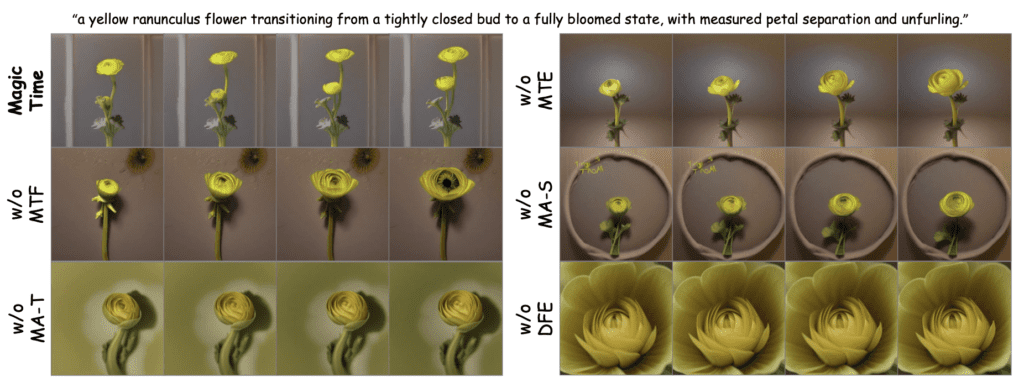

- Innovative Techniques for Enhanced Learning: MagicTime employs a unique MagicAdapter scheme and Dynamic Frames Extraction strategy to better understand and simulate physical transformations over time, setting a new standard in time-lapse video generation.

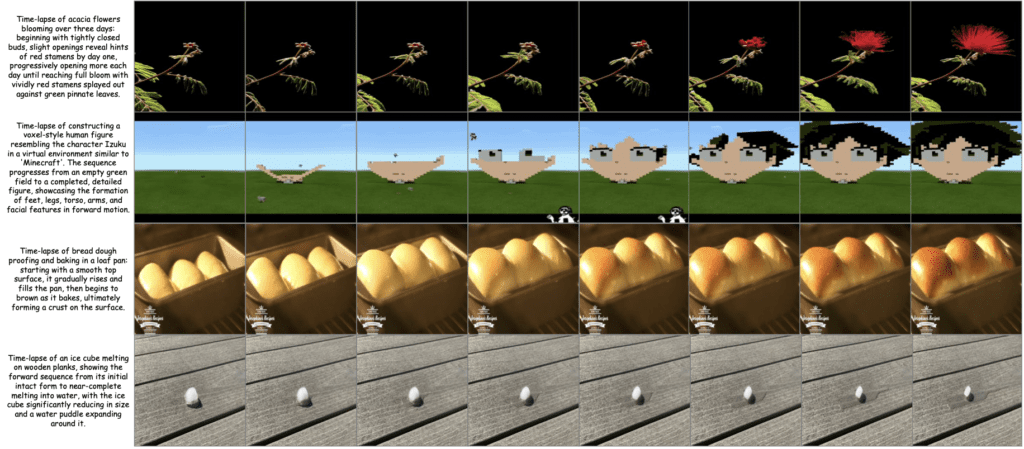

- Creation of ChronoMagic Dataset: The development of a specialized video-text dataset tailored for metamorphic video generation, paving the way for further advancements in this field.

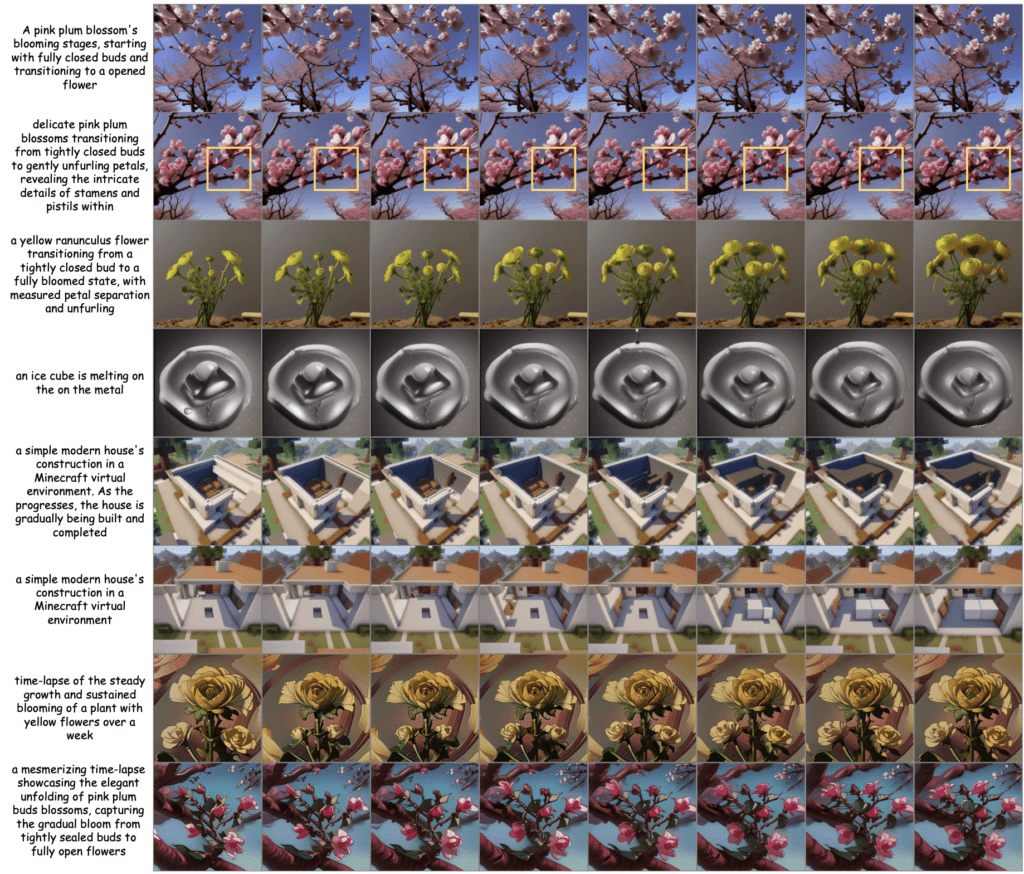

In the evolving landscape of Text-to-Video (T2V) generation, a new breakthrough has emerged that promises to significantly enhance the realism and physical accuracy of generated videos. Dubbed “MagicTime,” this innovative model ushers in a new era of metamorphic simulators, capable of producing time-lapse videos that not only captivate with their visual quality but also adhere closely to the intricate laws of physics.

At the core of MagicTime’s success is its unique approach to learning and simulation. Unlike traditional T2V models that often struggle with encoding complex physical knowledge, leading to videos with limited motion and variation, MagicTime leverages real-world time-lapse footage to deepen its understanding of physical transformations. This is achieved through a novel MagicAdapter scheme, which cleverly decouples spatial and temporal training. This separation allows the model to focus more intently on encoding physical knowledge from metamorphic videos, thereby enhancing the realism and dynamism of the generated content.

A second key innovation is the Dynamic Frames Extraction strategy. This technique enables MagicTime to adeptly handle the broad spectrum of variations inherent in metamorphic time-lapse videos. By focusing on dramatic object transformations, the model can incorporate a richer set of physical knowledge, further bridging the gap between artificial simulations and real-world phenomena.

To bolster its understanding of metamorphic video prompts, MagicTime introduces a Magic Text-Encoder. This component is crucial for interpreting complex descriptions and translating them into visually and physically coherent videos. The culmination of these innovations is the ChronoMagic dataset, a meticulously curated collection of video-text pairs designed specifically to unlock MagicTime’s full potential in metamorphic video generation.

Extensive testing has underscored MagicTime’s ability to outperform existing models, producing high-quality, dynamic metamorphic videos that closely mirror the content distribution and motion patterns of real-world footage. The implications of this technology are profound, offering new avenues for scientific visualization, entertainment, and educational content.

However, with great power comes great responsibility. The MagicTime team acknowledges the potential for misuse, as the technology could be harnessed to create deceptive or fraudulent content. It’s a reminder that ethical considerations must keep pace with technological advancements, ensuring that innovations like MagicTime are used to enrich society, rather than undermine it.

MagicTime represents a significant leap forward in the field of video generation, offering a glimpse into a future where digital simulations are indistinguishable from the natural transformations occurring around us. Its development not only showcases the potential of integrating AI with physical sciences but also sets a new benchmark for realism and dynamism in digital content creation.