How Test-Time Scaling and Advanced Computing Are Redefining AI Inference

- DeepSeek-R1 is a groundbreaking reasoning model that uses test-time scaling to deliver highly accurate responses through iterative, multi-pass inference methods like chain-of-thought and consensus.

- Powered by NVIDIA NIM microservices, DeepSeek-R1 achieves unprecedented performance, delivering up to 3,872 tokens per second on a single NVIDIA HGX H200 system, thanks to advanced GPU architecture and software optimizations.

- Enterprise-ready and secure, the DeepSeek-R1 NIM microservice allows developers to experiment, customize, and deploy specialized AI agents while maintaining data privacy and leveraging industry-standard APIs.

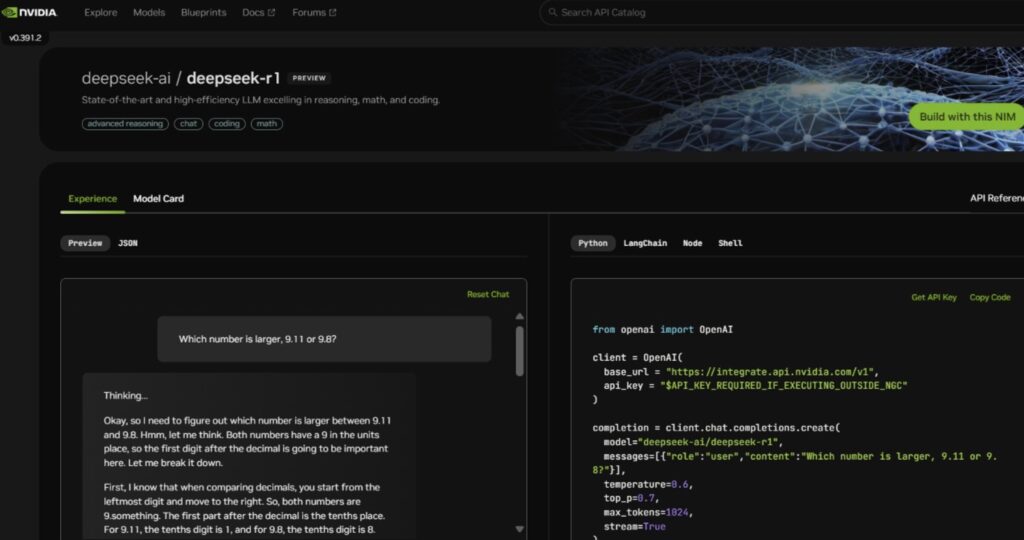

Artificial intelligence is entering a new era, one where reasoning and logical inference are no longer just buzzwords but tangible capabilities. At the forefront of this revolution is DeepSeek-R1, a state-of-the-art reasoning model now live on NVIDIA NIM. This open model is redefining what AI can achieve by leveraging advanced test-time scaling techniques, accelerated computing, and enterprise-grade deployment tools. Let’s dive into how DeepSeek-R1 is setting a new standard for AI reasoning and inference.

The Power of Test-Time Scaling

Traditional AI models often provide direct responses to queries, but DeepSeek-R1 takes a different approach. Instead of delivering immediate answers, it performs multiple inference passes over a query, employing methods like chain-of-thought reasoning, consensus building, and search-based evaluation. This iterative process, known as test-time scaling, allows the model to “think” through problems more thoroughly, resulting in higher-quality, more accurate responses.

As models like DeepSeek-R1 are allowed to iteratively process information, they generate more output tokens and longer generation cycles. This means that the quality of the model’s responses continues to scale with the amount of compute power applied. However, this also demands significant computational resources, making accelerated computing essential for real-time inference.

NVIDIA NIM: The Engine Behind DeepSeek-R1

To meet the computational demands of DeepSeek-R1, NVIDIA has integrated the model into its NIM microservice, now available on build.nvidia.com. The NIM microservice is designed to simplify deployments while maximizing performance and security.

DeepSeek-R1 is a large mixture-of-experts (MoE) model with an astounding 671 billion parameters—10 times more than many popular open-source large language models (LLMs). Each layer of the model incorporates 256 experts, with each token routed to eight experts in parallel for evaluation. This architecture requires high-bandwidth, low-latency communication to route prompt tokens efficiently.

The NVIDIA HGX H200 system, equipped with eight H200 GPUs connected via NVLink and NVLink Switch, is capable of running the full DeepSeek-R1 model at speeds of up to 3,872 tokens per second. This remarkable throughput is made possible by the NVIDIA Hopper architecture’s FP8 Transformer Engine and 900 GB/s of NVLink bandwidth, which optimize communication between experts.

Enterprise-Grade Deployment and Customization

One of the standout features of the DeepSeek-R1 NIM microservice is its enterprise-ready design. Developers can securely experiment with the model’s capabilities, build specialized AI agents, and deploy them on their preferred accelerated computing infrastructure. The microservice supports industry-standard APIs, ensuring seamless integration into existing workflows.

For enterprises looking to customize DeepSeek-R1, the NVIDIA AI Foundry and NVIDIA NeMo software provide the tools needed to create tailored NIM microservices. This flexibility allows organizations to address specific use cases while maintaining data privacy and security.

The Future of Reasoning Models

The advancements embodied by DeepSeek-R1 are just the beginning. The upcoming NVIDIA Blackwell architecture promises to take test-time scaling to new heights. With fifth-generation Tensor Corescapable of delivering up to 20 petaflops of FP4 compute performance and a 72-GPU NVLink domain optimized for inference, the future of reasoning models looks incredibly promising.

Get Started Today

Whether you’re looking to experiment with the API, build specialized agents, or deploy AI solutions at scale, DeepSeek-R1 and NVIDIA NIM provide the tools and performance needed to push the boundaries of what’s possible with AI.

DeepSeek-R1 is more than just a model—it’s a testament to the power of combining advanced reasoning techniques with cutting-edge computing infrastructure. By enabling test-time scaling and delivering enterprise-grade performance, DeepSeek-R1 and NVIDIA NIM are paving the way for a new generation of AI applications that can think, reason, and solve problems like never before.