How a New Self-Learning Framework Combats Hallucinations and Supercharges Problem-Solving in LLMs

- START integrates external tools like code execution to tackle hallucinations and inefficiencies in Large Reasoning Models (LRMs), enabling complex computations, self-checking, and debugging.

- Self-learning techniques—Hint-infer and Hint-RFT—allow START to autonomously master tool usage without demonstration data, setting a new standard for scalable AI training.

- State-of-the-art performance on PhD-level science, competition math, and coding benchmarks positions START as a leading open-source rival to proprietary giants like OpenAI’s o1.

The journey of AI reasoning began with Chain-of-Thought (CoT), where models like GPT-3 broke problems into intermediate steps. This evolved into long CoT, exemplified by models such as OpenAI’s o1 and DeepSeek-R1, which mimic human-like strategies like self-reflection and multi-method exploration. Yet, even these advanced models falter in high-stakes scenarios—hallucinating answers during complex calculations or simulations due to their reliance on internal reasoning alone.

The Achilles’ Heel of Long CoT: Hallucinations and Inefficiency

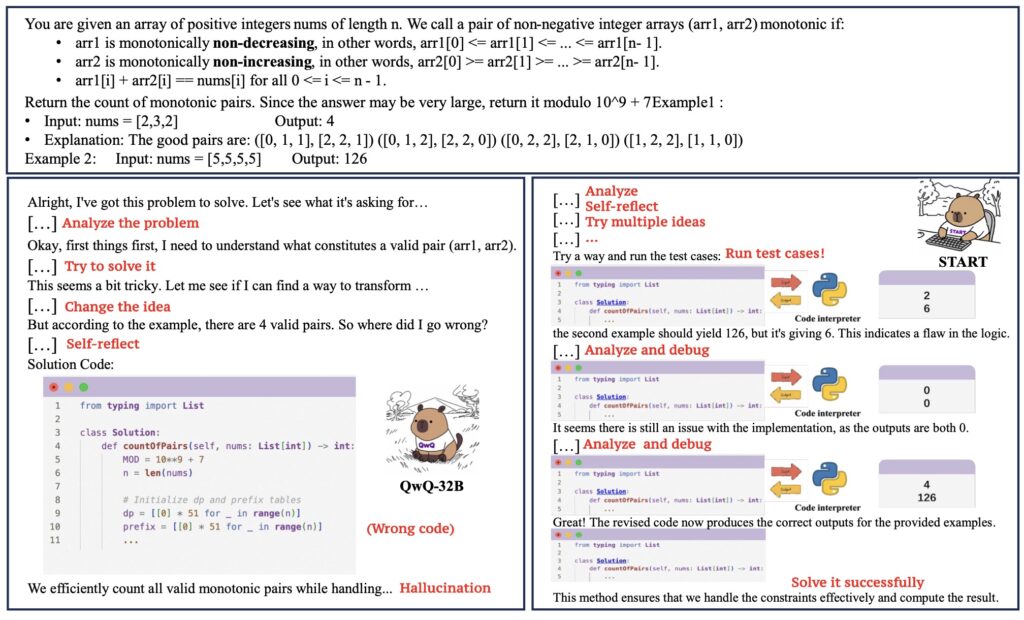

Long CoT models excel at structured thinking but lack the precision of external tools. For instance, solving a physics problem might require computing integrals or simulating systems—tasks prone to errors when done mentally. This limitation becomes glaring in domains like competitive mathematics or scientific research, where accuracy is non-negotiable. Worse, proprietary models like o1 offer tool integration (e.g., code execution) without transparency, leaving open-source efforts in the dark.

START’s Secret Sauce: Self-Learning Meets Tool Integration

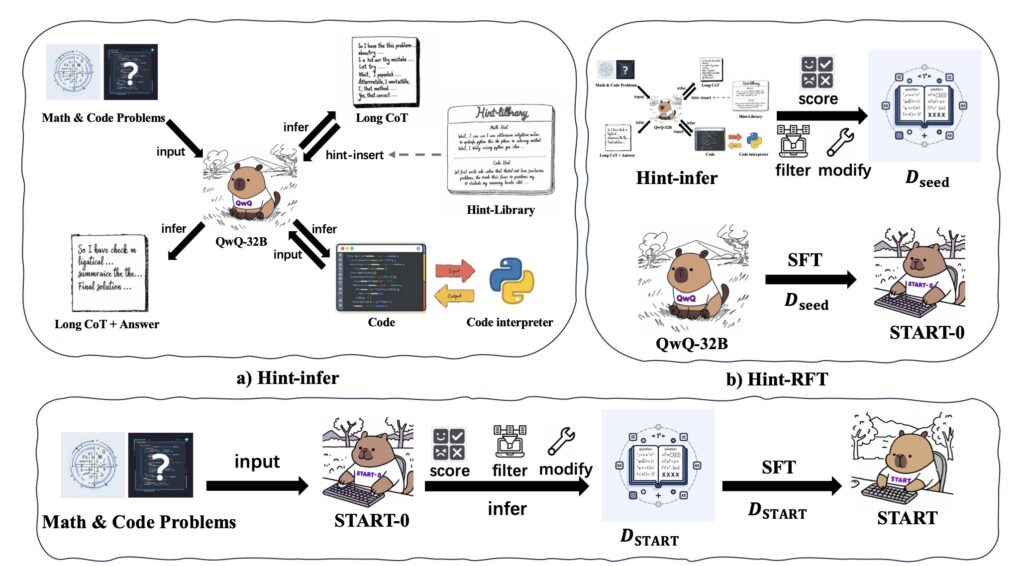

START (Self-Taught Reasoner with Tools) bridges this gap by synergizing long CoT reasoning with external tool usage. Its innovation lies in a two-pronged self-learning framework:

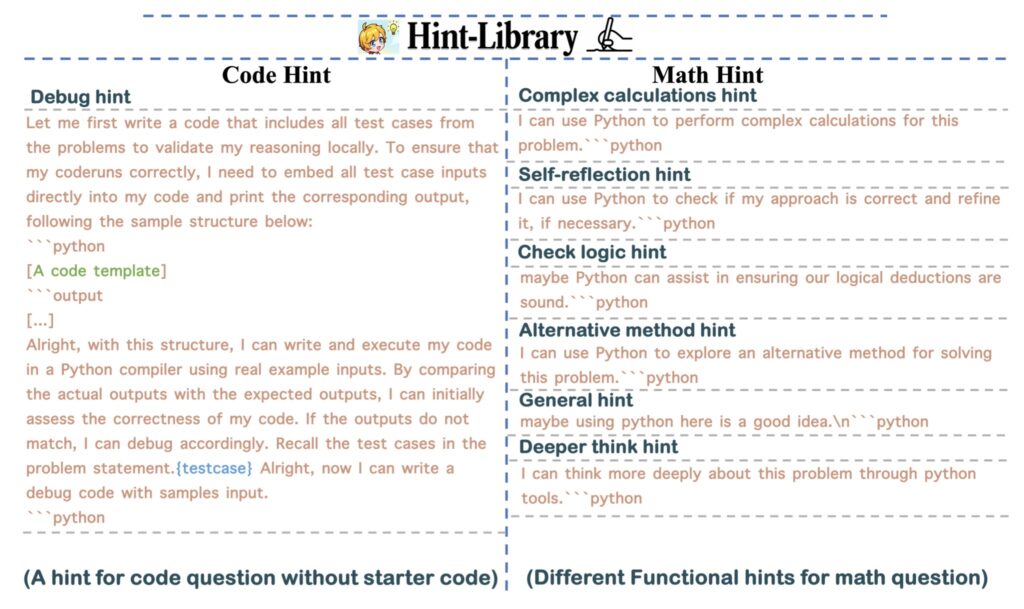

Hint-infer: The Nudge That Sparks Tool Usage

Imagine a student stuck on a problem until a teacher whispers, “Try using a calculator.” Similarly, Hint-infer inserts subtle prompts like “Wait, maybe using Python here is a good idea” during reasoning. These hints act as cognitive triggers, teaching the model to invoke tools like code interpreters without requiring pre-existing examples. Remarkably, this method also serves as a “test-time scaler,” improving performance sequentially as the model encounters new challenges.

Hint Rejection Sampling Fine-Tuning (Hint-RFT): Learning From Mistakes

START doesn’t stop at hints. Hint-RFT refines its reasoning by scoring, filtering, and modifying its tool-based solutions. Think of it as a self-editing process: the model generates multiple approaches, discards flawed ones, and fine-tunes itself on the best outcomes. This trial-and-error loop mimics human learning, enabling START to master tasks ranging from debugging code to exploring alternative mathematical proofs.

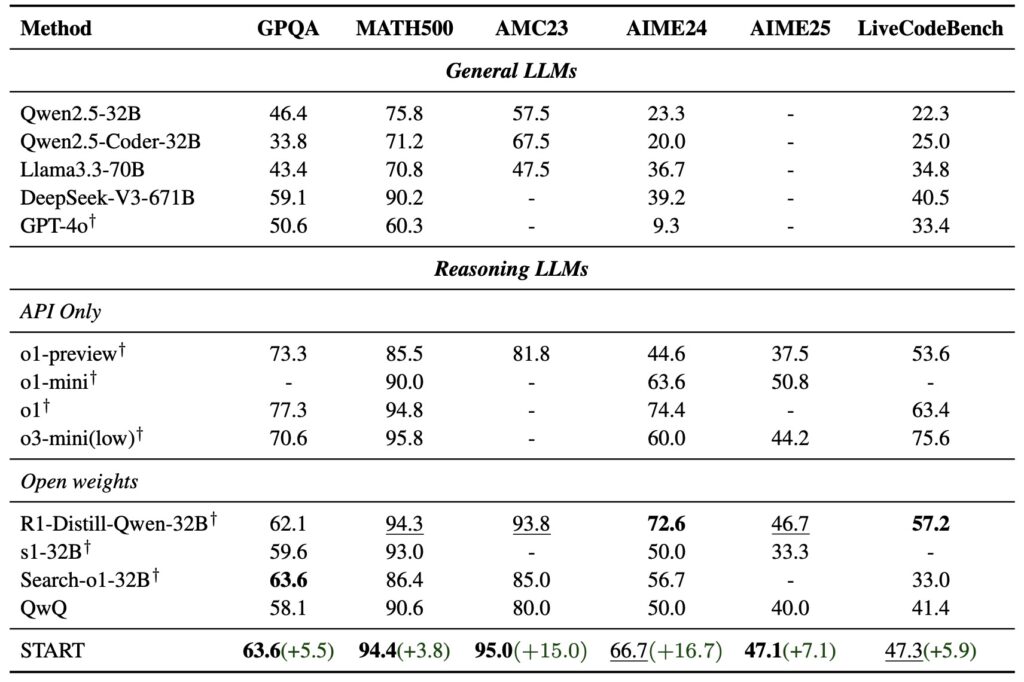

Benchmark Dominance: From PhD Science to Coding Challenges

START’s prowess is validated across notoriously tough benchmarks:

- GPQA (PhD-level science QA): 63.6% accuracy, outperforming its base model, QwQ-32B.

- Competition Math (AMC23, AIME24/25): 95.0%, 66.7%, and 47.1%—surpassing most open-source models and rivaling proprietary ones.

- LiveCodeBench (coding): 47.3% accuracy, showcasing robust problem-solving in real-world programming tasks.

These results not only crush baseline LRMs but also narrow the gap with closed models like o1-Preview, proving that open-source innovation can thrive.

Open, Collaborative, and Tool-Driven

START’s breakthroughs signal a paradigm shift. By democratizing tool integration and self-learning, it offers a blueprint for developing reliable, transparent AI systems. Future models could leverage specialized tools for medicine, engineering, or climate modeling—domains where precision is critical. Moreover, START’s open-source nature fosters collaboration, accelerating progress beyond walled gardens like OpenAI.

As AI tackles higher-stakes tasks, the fusion of autonomous reasoning and external tools will be indispensable. START isn’t just a model; it’s a manifesto for the next era of AI—one where machines think smarter, not harder.