InstantStyle Emerges as a Game-Changer, Masterfully Navigating the Complex Terrain of Style-Consistent Imagery

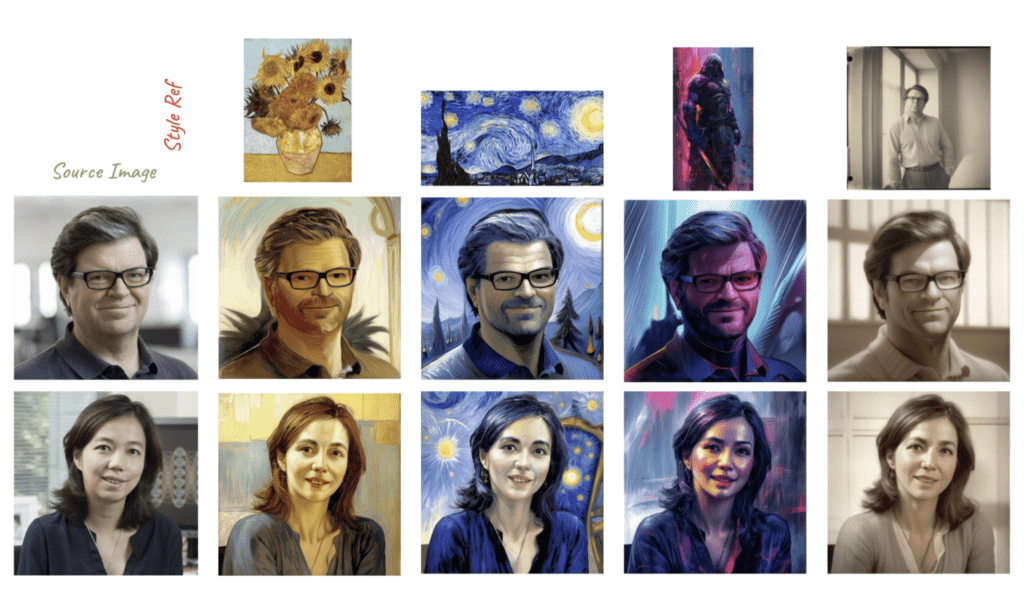

- Innovative Style and Content Disentanglement: InstantStyle introduces a groundbreaking approach to separate style and content in images, using a mechanism that manipulates features within the same space, enhancing the clarity and integrity of generated images.

- Selective Style Feature Injection: By integrating reference image features solely into designated style-specific blocks, InstantStyle circumvents the common pitfalls of style leaks and obviates the need for intricate weight adjustments, streamlining the image personalization process.

- Broad Applicability and Future Potential: The framework’s principles are not only effective for text-to-image generation but also hold promising implications for other consistent generation tasks, including video, hinting at a broader impact on the future of digital media creation.

In the swiftly evolving landscape of digital image personalization, the advent of diffusion-based models like InstantStyle heralds a significant leap forward. This innovative framework addresses the intricate challenges that have long beleaguered image generation models, particularly in maintaining style consistency without sacrificing text controllability.

Challenging the Status Quo

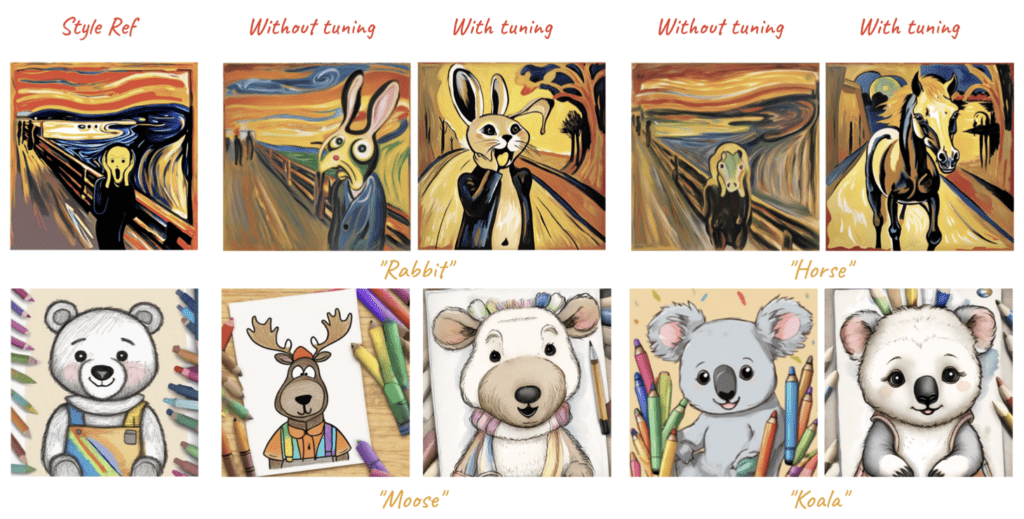

Traditional methods, despite their advancements, often struggle with the nebulous concept of ‘style,’ a term that encompasses a broad spectrum of elements ranging from color and material to atmosphere and structure. Inversion-based techniques, while promising, tend to degrade the style, losing out on the fine details that give an image its character. Meanwhile, adapter-based methods demand painstaking weight tuning for each reference image, a process both time-consuming and prone to errors.

Enter InstantStyle

With its two-pronged strategy, InstantStyle elegantly sidesteps these issues. The first tactic involves a simple yet effective mechanism for decoupling style and content within the feature space of images. This approach hinges on the premise that features within a given space can be manipulated—added or subtracted—to achieve the desired stylistic effect without entangling content. The second strategy zeroes in on the injection of style features directly into specially designated blocks, thereby ensuring that the style remains untainted by unrelated content elements. This method not only maintains the stylistic integrity of the generated images but also dramatically simplifies the process, eliminating the arduous task of weight fine-tuning.

Implications and Future Directions

The implications of InstantStyle extend far beyond mere image customization. The framework’s underlying principles offer valuable insights into the specific roles played by different attention layers in neural networks, suggesting that not all layers are created equal in their contribution to style and content separation. This revelation could significantly impact the training of future models, promoting efficiency and reducing the risk of overfitting.

Moreover, the adaptability of InstantStyle’s methodologies opens up new vistas in consistent generation tasks across various media forms, including video. As the digital realm continues to expand and evolve, the potential applications of such a versatile framework are virtually limitless.

InstantStyle stands at the forefront of a new era in digital media creation, promising a future where style and content coexist in harmony, unfettered by the limitations of past technologies. As research progresses, the digital artistry landscape is poised for a transformation, guided by the innovative spirit of InstantStyle and its potential to redefine the boundaries of image and video generation.