Why the future of intelligence isn’t about reasoning harder, but orchestrating better with ToolOrchestra.

- Intelligence through Coordination: We challenge the assumption that “intelligence” equals one giant model doing everything. Instead, we demonstrate that intelligence is the effective coordination of tools and specialist models.

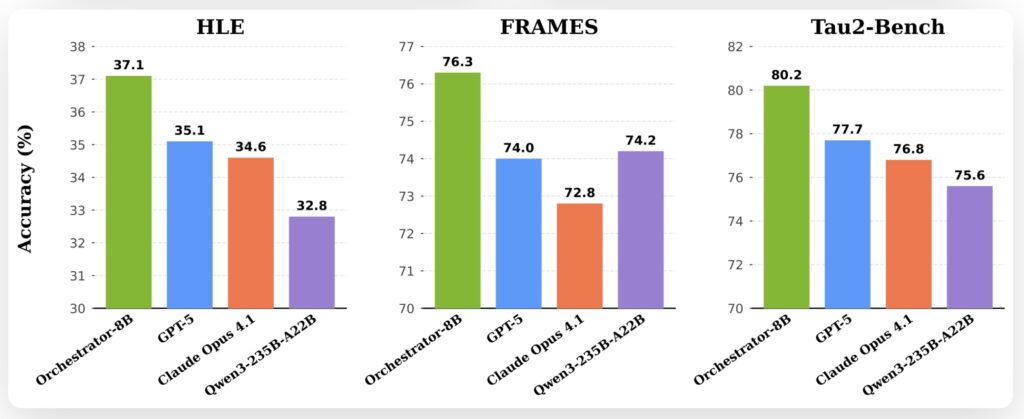

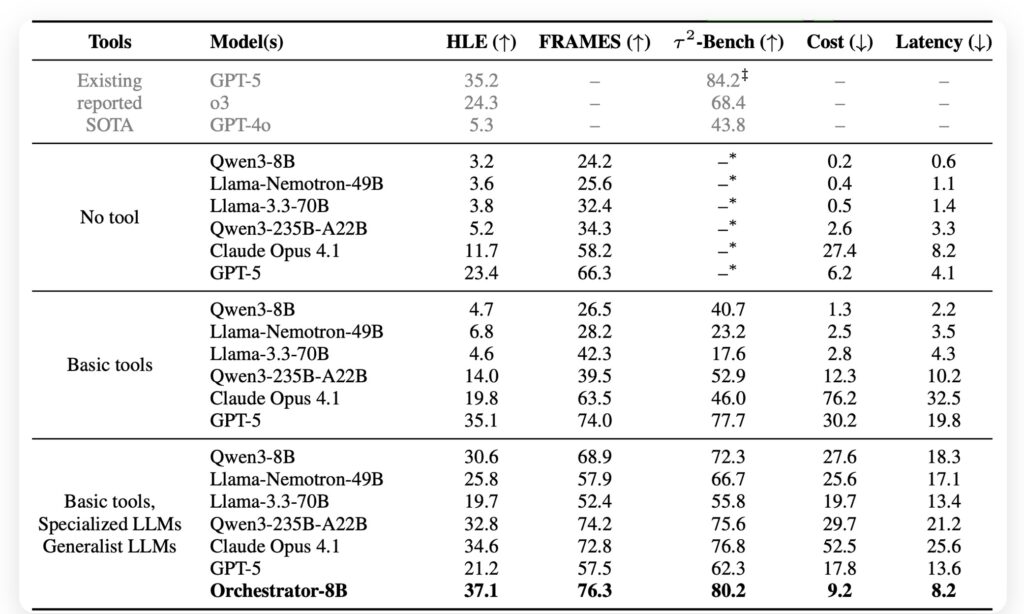

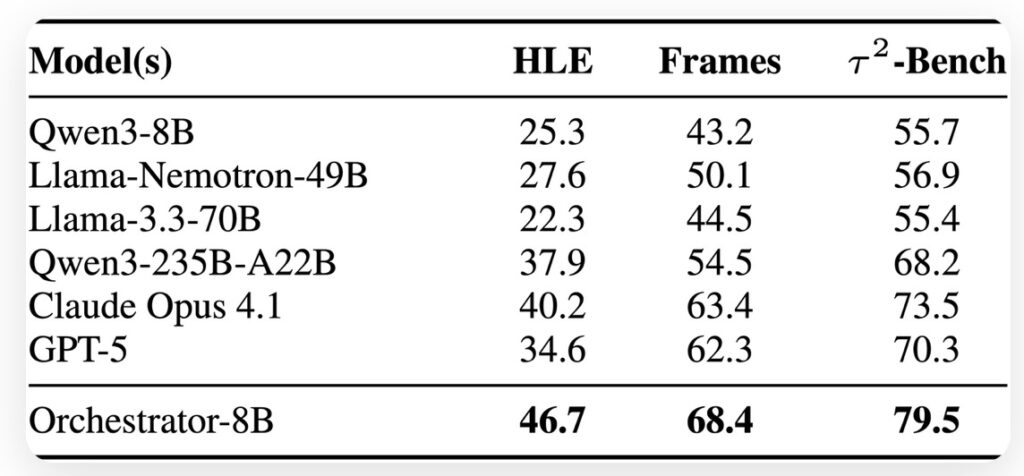

- The Power of Nemotron-Orchestrator-8B: A compact 8B parameter model, trained via ToolOrchestra, achieves a score of 37.1% on Humanity’s Last Exam (HLE), outperforming the massive GPT-5 (35.1%) while being 2.5x more efficient.

- Balancing Cost and Performance: By utilizing reinforcement learning with outcome, efficiency, and preference rewards, this method achieves state-of-the-art results on complex benchmarks (τ2-Bench and FRAMES) at roughly 30%of the cost of traditional monolithic models.

For years, the AI industry has operated under a specific dogma: to solve deeper and more complex problems, we must build larger, more computationally expensive models. Large Language Models (LLMs) act as powerful generalists, but when faced with the “Humanity’s Last Exam” (HLE)—a benchmark designed to test the upper limits of AI reasoning—even giants like GPT-5 face conceptual bottlenecks and prohibitive costs.

However, a new paradigm is emerging. What if we didn’t need a smarter brain, but a better manager? We posit that intelligence is not just about raw processing power; it is about orchestration. By shifting the focus from monolithic systems to “ToolOrchestra,” we show that small orchestrators managing other models and tools can push the boundaries of AI capability.

Enter the ToolOrchestra

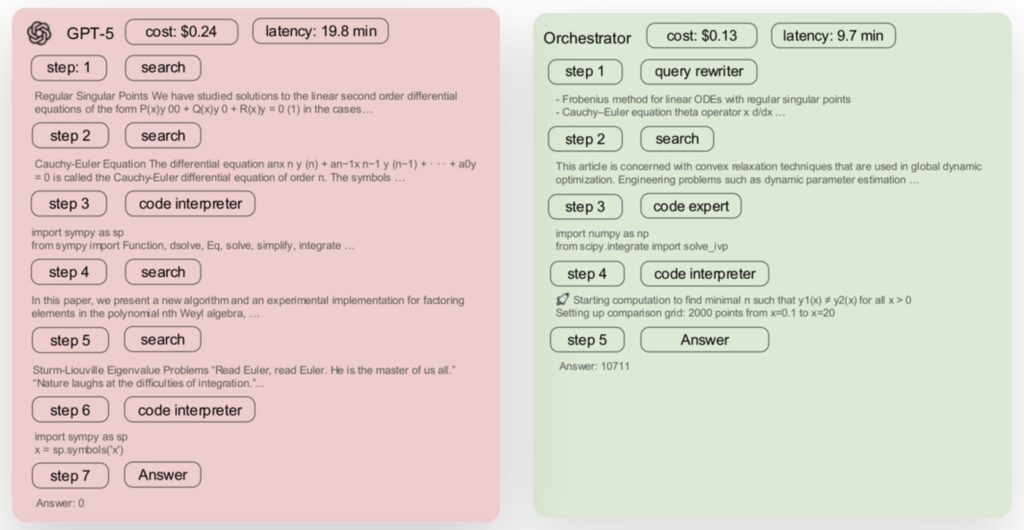

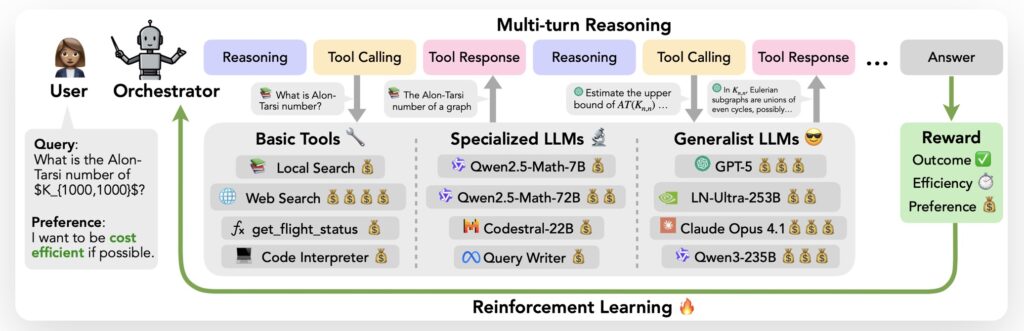

We introduce ToolOrchestra, a novel framework designed to train small orchestration models that coordinate the use of intelligent tools. The core philosophy here is the separation of concerns. Intelligence should not be one model attempting to be a jack-of-all-trades. Instead, we utilize large models for difficult sub-tasks and small models for everything else.

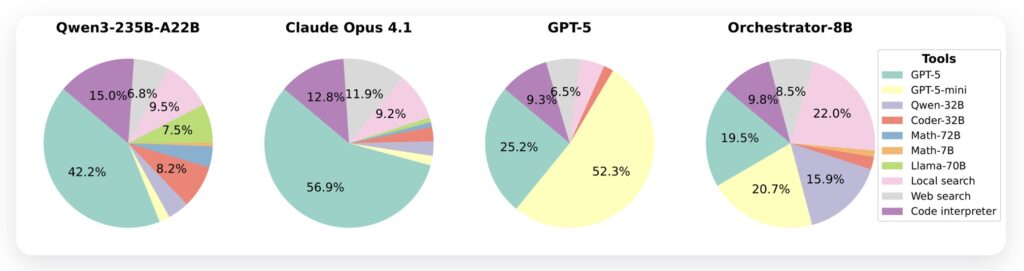

The result of this method is Nemotron-Orchestrator-8B. Despite its relatively small size (8B parameters), this model acts as a conductor for a symphony of digital resources. Given a complex task, the Orchestrator alternates between reasoning and tool calling across multiple turns. It doesn’t just rely on its own weights; it interacts with a diverse ecosystem:

- Basic Tools: Web search, code interpreters.

- Specialized LLMs: Models fine-tuned for coding or mathematics.

- Generalist Giants: It can even call upon GPT-5, Llama-Nemotron-Ultra-253B, or Claude Opus 4.1 when heavy lifting is required.

Smarter Training: Beyond Simple Accuracy

The secret to the Orchestrator’s success lies in its training pipeline. We move beyond static heuristics or purely supervised approaches. The Orchestrator is trained end-to-end using Reinforcement Learning (RL) that optimizes for three distinct variables simultaneously:

- Outcome: Did the model solve the problem correctly?

- Efficiency: Did the model solve it using the least amount of computational resources?

- User Preference: Did the model use the tools in a way that aligns with human expectations?

To facilitate this, we developed ToolScale, a complex user-agent-tool synthetic dataset that synthesizes both environment and tool-call tasks at scale. This allows the model to learn adaptive strategies, dynamically balancing performance and cost rather than brute-forcing a solution.

Breaking Benchmarks: Efficiency Meets Effectiveness

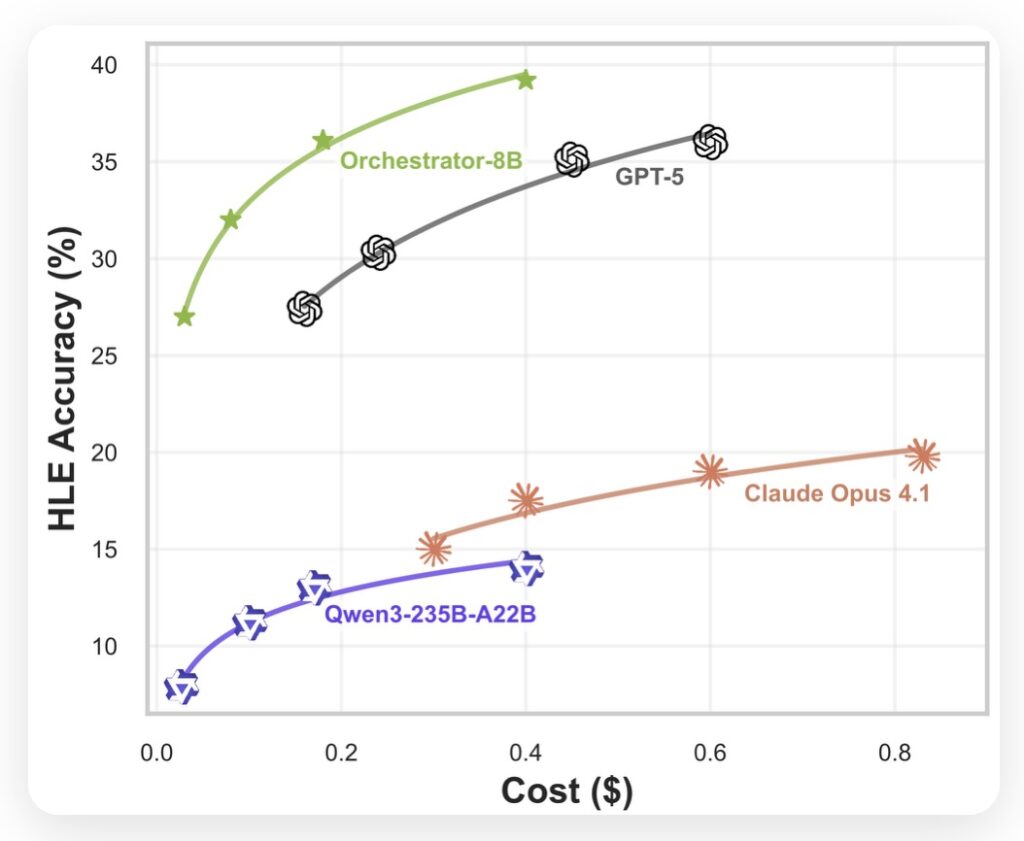

The results of this approach are striking. On the HLE benchmark, Nemotron-Orchestrator-8B achieved a score of 37.1%, surpassing GPT-5’s score of 35.1%. More importantly, it achieved this victory while being 2.5x more efficient.

The benefits extend to other challenging benchmarks as well. On τ2-Bench and FRAMES, the Orchestrator surpassed GPT-5 by a wide margin while incurring only about 30% of the cost. Extensive analysis confirms that the Orchestrator achieves the best trade-off between performance and cost under multiple metrics and generalizes robustly even to tools it has never seen before.

The Future of Agentic Systems

These results demonstrate that composing diverse tools with a lightweight orchestration model is both more efficient and more effective than existing monolithic methods. We have paved the way for practical, scalable tool-augmented reasoning systems.

Looking ahead, we envision even more sophisticated recursive orchestrator systems. These systems will not only push the upper bound of intelligence further but will also continue to drive down the cost of solving increasingly complex agentic tasks. We have proven that small models can outperform giants—not by reasoning harder, but by orchestrating better.