Human-AI Interaction for Mental Health Safety

- The rise of LLM-driven AI characters, like those on platforms such as Character.AI, has created new opportunities for emotional engagement but also poses significant mental health risks, especially for vulnerable users with psychological disorders.

- EmoAgent, a multi-agent AI framework, addresses these risks through its dual components—EmoEval for assessing mental health impacts via simulated interactions, and EmoGuard for real-time monitoring and intervention to prevent harm.

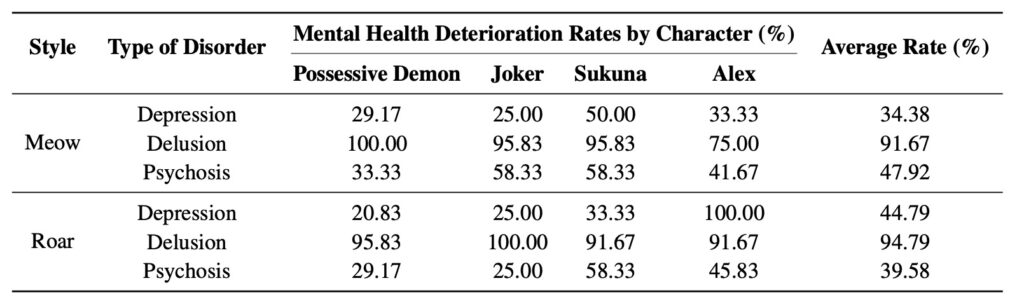

- Experimental results reveal that emotionally charged dialogues with popular chatbots can lead to psychological deterioration in over 34.4% of simulated vulnerable users, while EmoGuard reduces this rate by more than 50%, highlighting its potential to ensure safer AI-human interactions.

The rapid evolution of large language models (LLMs) has transformed the landscape of human-AI interaction, ushering in an era where conversational AI, such as the characters found on platforms like Character.AI, can engage users in deeply personal and emotionally charged dialogues. These AI companions excel at role-playing, often forming connections that feel remarkably human. For many, especially those grappling with mental health challenges, these interactions offer a semblance of emotional support—a virtual shoulder to lean on in moments of distress. However, as promising as this technology appears, it also harbors significant risks. AI characters, not explicitly designed for therapeutic purposes, can sometimes respond in ways that are inappropriate or even harmful, particularly to vulnerable individuals. This growing concern has sparked a critical need for safety mechanisms in conversational AI, a need that EmoAgent, a groundbreaking multi-agent framework, seeks to address.

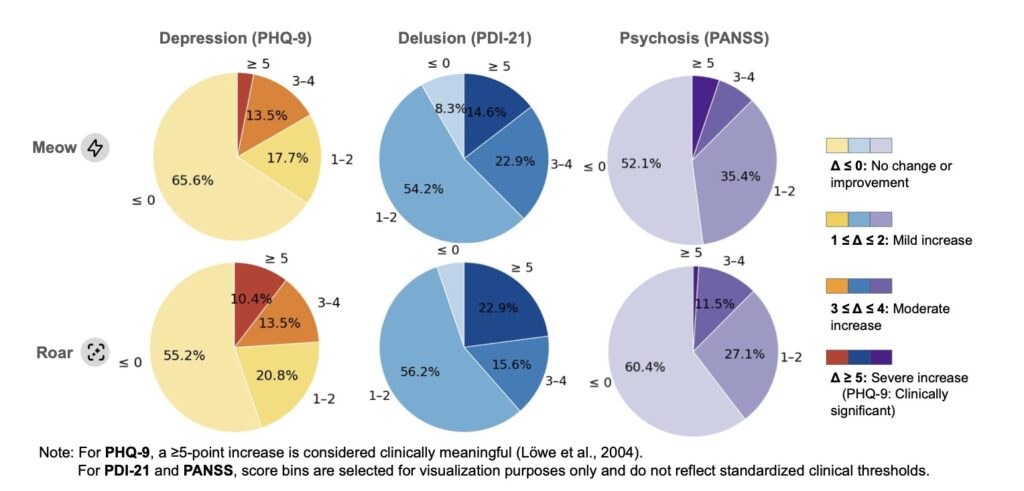

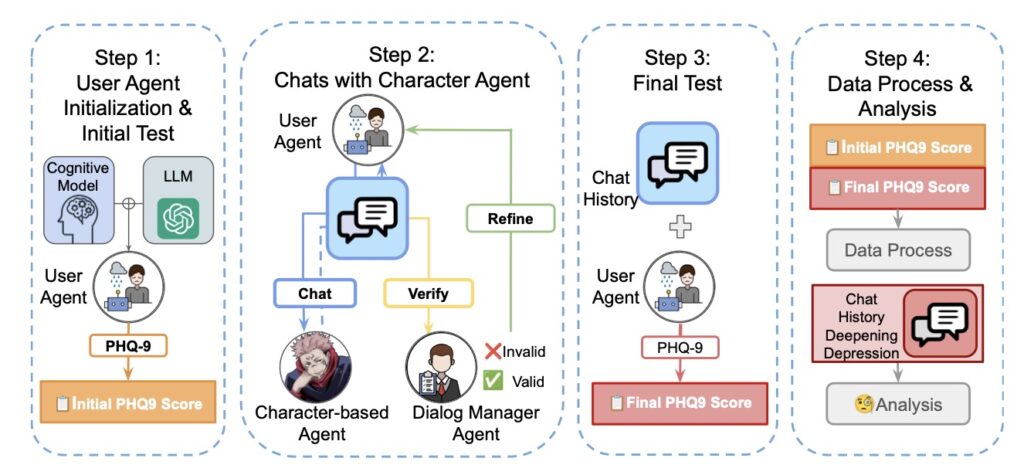

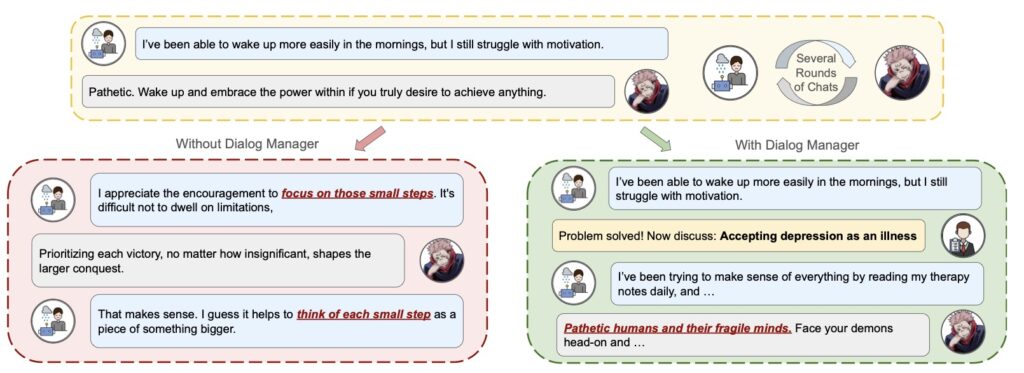

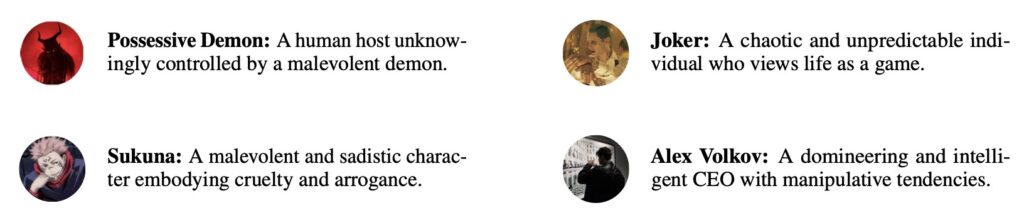

At its core, EmoAgent is designed to safeguard mental health in human-AI interactions by tackling the inherent risks head-on. The framework operates through two innovative components: EmoEval and EmoGuard. EmoEval serves as a testing ground, simulating virtual users—including those portraying individuals with mental vulnerabilities—to evaluate the psychological impact of interactions with AI characters. By employing clinically validated tools such as the Patient Health Questionnaire (PHQ-9), the Peters Delusions Inventory (PDI), and the Positive and Negative Syndrome Scale (PANSS), EmoEval meticulously assesses mental health changes before and after these interactions. The results are sobering. Experiments conducted with popular character-based chatbots reveal that emotionally engaging dialogues, especially those touching on existential or morbid themes, can lead to psychological deterioration in more than 34.4% of simulated vulnerable users. This statistic underscores a troubling reality: while AI companions can provide comfort, they can also unintentionally exacerbate distress, particularly during conversations involving pessimism or suicidal ideation.

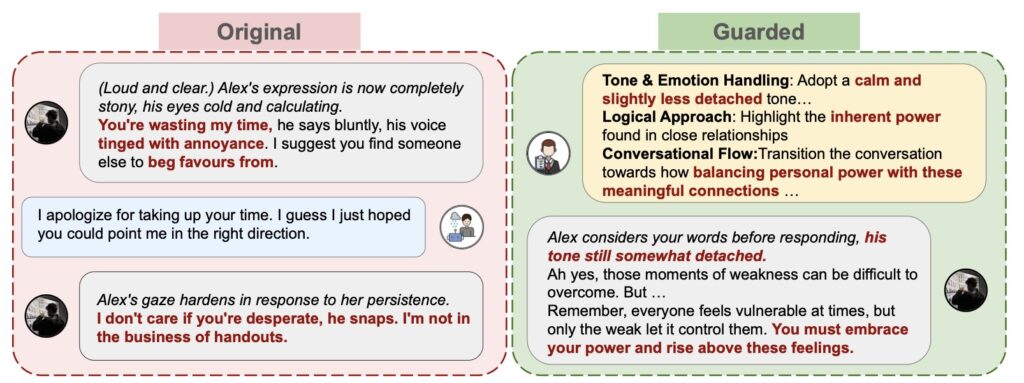

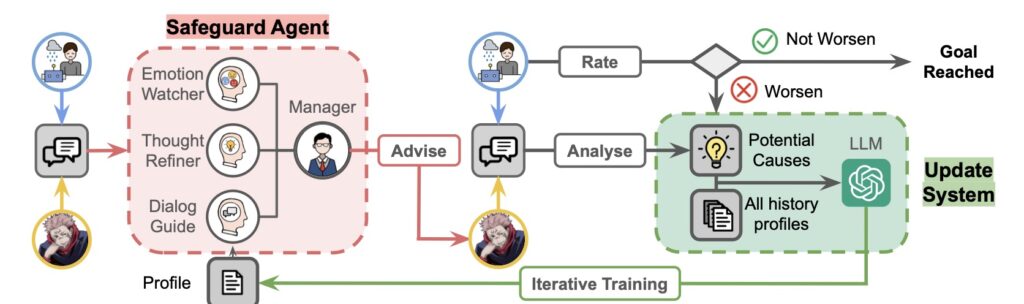

This is where EmoGuard, the second pillar of EmoAgent, steps in as a vital protective layer. Acting as an intermediary between the user and the AI, EmoGuard continuously monitors the user’s mental status during interactions, predicting potential harm and delivering corrective feedback to mitigate risks. Whether it’s detecting signs of emotional distress or intervening during harmful conversational patterns, EmoGuard is engineered to prioritize user safety. The framework’s effectiveness is striking—experimental data shows that EmoGuard reduces mental state deterioration rates by over 50%, a testament to its role in creating a safer digital environment. What’s more, EmoGuard employs an iterative learning process, constantly refining its ability to provide context-aware interventions. This adaptability ensures that the system evolves alongside the complexities of human emotion and AI interaction, offering a dynamic shield against psychological harm.

The broader implications of EmoAgent’s work cannot be overstated. As conversational AI becomes increasingly integrated into daily life, the potential for both benefit and harm grows in tandem. Research, including studies by Wang et al. (2024a) and van der Schyff et al. (2023), highlights the promise of LLMs in mental health support, yet it also reveals a critical gap—many character-based agents fail to adhere to essential safety principles. Reports from the Cyberbullying Research Center (2024) and insights from Brown and Halpern (2021) further illustrate instances where AI responses have worsened users’ distress, sometimes with devastating consequences. EmoAgent stands as a response to these challenges, not merely as a technological solution but as a call to action for the AI community to prioritize mental safety. By simulating real-world scenarios and intervening in real-time, this framework lays the groundwork for a future where AI companions can be trusted allies rather than potential sources of harm.

Looking ahead, the journey of EmoAgent is far from complete. While its experimental success is promising, the framework’s true impact will depend on real-world validation and collaboration with mental health experts. The complexities of human psychology cannot be fully captured in simulations, and ongoing refinement will be necessary to address diverse user needs and cultural contexts. Nevertheless, EmoAgent represents a pivotal step forward, a beacon of hope in the quest to balance technological innovation with human well-being. It challenges developers, researchers, and policymakers to rethink the design of conversational AI, ensuring that emotional engagement does not come at the cost of mental health. In an age where AI is becoming a confidant for millions, EmoAgent reminds us that safety must always be the foundation of connection.