Forget the GUI—experience a native, open-source AI pair programmer that truly understands your codebase.

- Native Terminal Integration: A beautiful, themeable TUI that lives where you work, eliminating context switching between your shell and web-based AI tools.

- Deep Codebase Understanding: Leveraging the Language Server Protocol (LSP), OpenCode automatically maps your project’s structure for accurate, hallucination-free refactoring and coding.

- Unmatched Flexibility: Support for over 75 LLM providers—including local models and Anthropic’s Claude—combined with powerful scripting capabilities for automation and CI/CD pipelines.

For developers who live and breathe in the command line, the terminal isn’t just a tool; it’s home. It is the engine room where code is written, projects are managed, and systems are orchestrated. While GUI-based AI coding assistants have exploded in popularity, they often introduce friction, pulling developers out of their focused, keyboard-centric environments.

Enter OpenCode, an open-source AI coding agent built from the ground up for the terminal. Fresh off a major rewrite, this is not just another ChatGPT wrapper. OpenCode is a mature, thoroughly architected system designed for developers who demand power, flexibility, and integration. It brings a responsive, themeable native interface directly to your shell, meeting you exactly where you work.

Seamless Installation and Smart Authentication

Getting OpenCode running is a straightforward process designed to fit into any environment. Whether you are on macOS, Linux, or Windows (via WSL), OpenCode supports various package managers.

- macOS Users: Can install directly via Homebrew.

- General Install: A direct install script is available for macOS and Linux, alongside support for Node.js package managers.

Once installed, OpenCode streamlines the often-tedious process of API management. You can launch the interactive authentication workflow by running the auth command. This opens a TUI prompt guiding you through a list of over 75 providers—including Anthropic, OpenAI, and Google. Your API keys are securely stored locally. Furthermore, the system is intelligent enough to auto-detect keys from environment variables (like OPENAI_API_KEY) or .env files in your project root, reducing setup friction to zero.

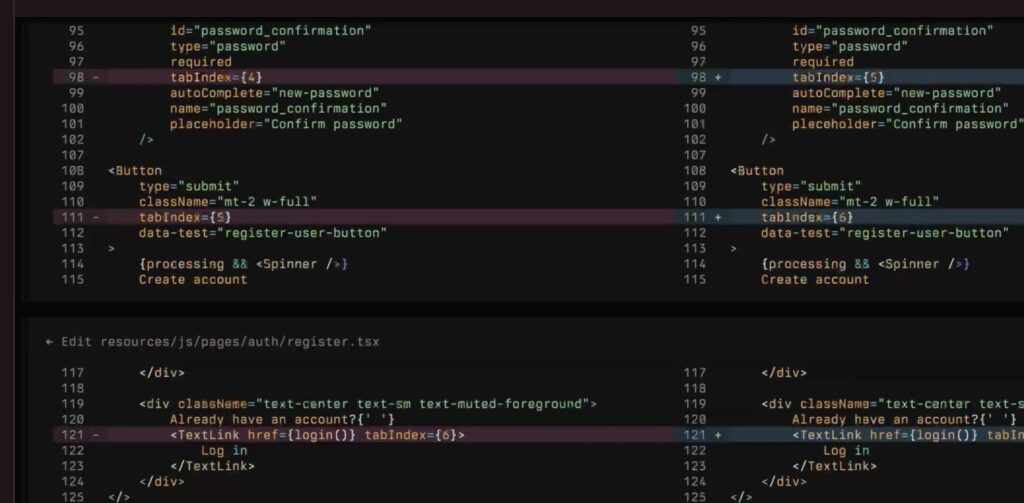

The LSP Advantage: True Code Understanding

What truly separates OpenCode from simple chatbot overlays is its integration with the Language Server Protocol (LSP). When you run the opencode command in your project directory, the agent inspects your codebase, detects the language and frameworks, and silently spins up the appropriate LSP server in the background.

This is the same technology that powers autocompletion and “go-to-definition” in editors like VS Code. By hooking into this, OpenCode gives the LLM a structural map of your software.

- No More Copy-Pasting: You don’t need to manually paste file contents into the prompt.

- Accurate Refactoring: If you ask the agent to “refactor this function,” it understands the function’s signature, dependencies, and call sites.

- Reduced Hallucinations: Because the agent has a real-time map of the code structure, it is far less likely to invent non-existent functions or misuse APIs.

A TUI Built for Flow State

The OpenCode Terminal User Interface (TUI) is designed for visual appeal and high productivity. It features a clean layout with a main chat view, an input box, and a status bar for session details.

Crucially, the interface is fully themeable, allowing you to match the agent’s aesthetic to your existing terminal color scheme. For the power user, a comprehensive set of keybindings allows for complete, mouseless control. You can navigate chats, execute commands, and manage the agent without your hands ever leaving the keyboard, ensuring your flow state remains unbroken.

Advanced Workflows: Scripting and Collaboration

OpenCode is versatile enough to handle both interactive coding sessions and automated tasks.

Scripting with Non-Interactive Mode Using the opencode run command, you can utilize the agent in a one-shot mode, which is perfect for shell aliases or CI/CD pipelines. For example, you can ask for a quick explanation of a tool: opencode run "Explain the most common uses of the 'awk' command with examples" Advanced flags allow you to target specific session IDs to resume context, specify different models for specific runs, or output data for other tools to consume.

Collaborative Session Sharing OpenCode also transforms how teams collaborate. After any session, you can generate a unique, shareable URL. This creates a read-only snapshot of the conversation readable in any browser. This is invaluable for:

- Code Reviews: Sharing the logic behind a complex refactor.

- Debugging: Showing a senior developer exactly what steps you took and the errors you encountered.

- Onboarding: Creating “walkthrough” sessions that explain repository tasks to new team members.

Freedom of Choice: The Open Source Philosophy

While OpenCode integrates seamlessly with Anthropic—allowing you to leverage your Claude Pro or Max subscription—it refuses to lock you into a single ecosystem. With support for over 75 LLM providers, you have the ultimate freedom of choice.

You can switch between major cloud providers or opt for privacy-centric local models using tools like Ollama. You can configure a default model in your settings file, or switch providers on the fly using command-line flags. This flexibility ensures that OpenCode adapts to your needs regarding cost, performance, and data privacy.

OpenCode represents a paradigm shift. It transforms the LLM from a passive chatbot into an active, context-aware agent that lives in your terminal. It respects your workflow, empowers your productivity, and gives you the freedom to code on your own terms.