Groundbreaking advancements in generative AI, neural graphics, and realistic simulations

- NVIDIA Research presents around 20 papers on generative AI and neural graphics, in collaboration with over a dozen universities worldwide.

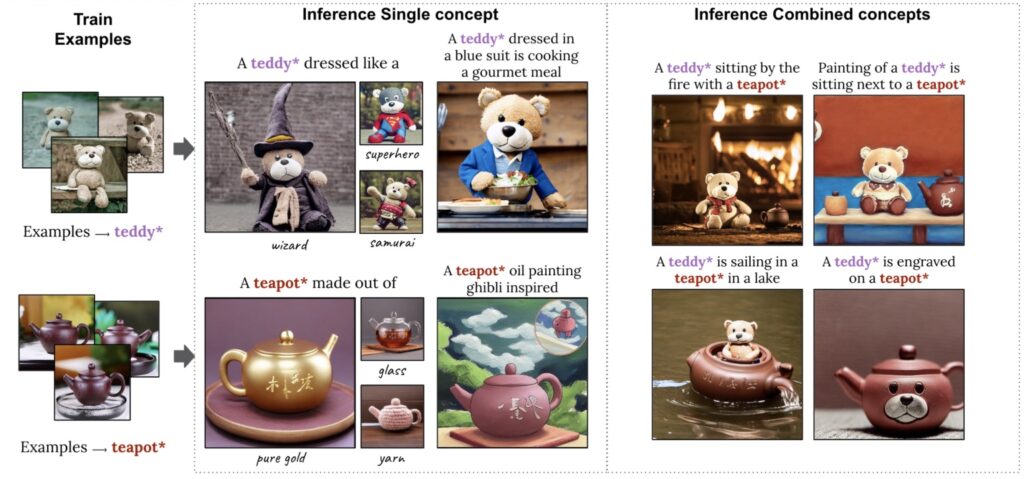

- Innovative research includes generative AI models for text-to-image generation, inverse rendering tools, neural physics models, and neural rendering models.

- The research advancements will aid developers, enterprises, and creators in various industries, including robotics, autonomous vehicles, art, architecture, graphic design, game development, and film.

NVIDIA has introduced a wealth of cutting-edge AI research, focused on generative AI and neural graphics, at the renowned computer graphics conference SIGGRAPH 2023. The company will present around 20 research papers, developed in collaboration with over a dozen universities from the U.S., Europe, and Israel. These papers encompass generative AI models that turn text into personalized images, inverse rendering tools that transform still images into 3D objects, neural physics models for simulating complex 3D elements with stunning realism, and neural rendering models for real-time, AI-powered visual details.

NVIDIA’s research innovations are regularly shared with developers on GitHub and incorporated into products like the NVIDIA Omniverse platform for metaverse applications and NVIDIA Picasso, a foundry for custom generative AI models for visual design. Years of NVIDIA graphics research have contributed to film-style rendering in games, exemplified by the recently released Cyberpunk 2077 Ray Tracing: Overdrive Mode.

The research advancements showcased at SIGGRAPH will help developers and enterprises rapidly generate synthetic data to populate virtual worlds for robotics and autonomous vehicle training. Moreover, they will enable creators in various industries, such as art, architecture, graphic design, game development, and film, to produce high-quality visuals more quickly for storyboarding, previsualization, and production.

Highlighted research includes customized text-to-image models, advances in inverse rendering and character creation, and neural physics for realistic simulations, such as simulating tens of thousands of hairs in high resolution and in real-time. The novel approach for accurate hair simulation, optimized for modern GPUs, significantly reduces simulation times while improving the quality of hair simulations in real-time, enabling accurate and interactive physically-based hair grooming. Furthermore, neural rendering research brings film-quality detail to real-time graphics.