Moving beyond handcrafted graphics to real-time, text-guided world building.

- Bridging the AR Gap: EgoEdit addresses the unique challenges of first-person (egocentric) footage—such as rapid motion and hand obstructions—that cause traditional AI video editors to fail.

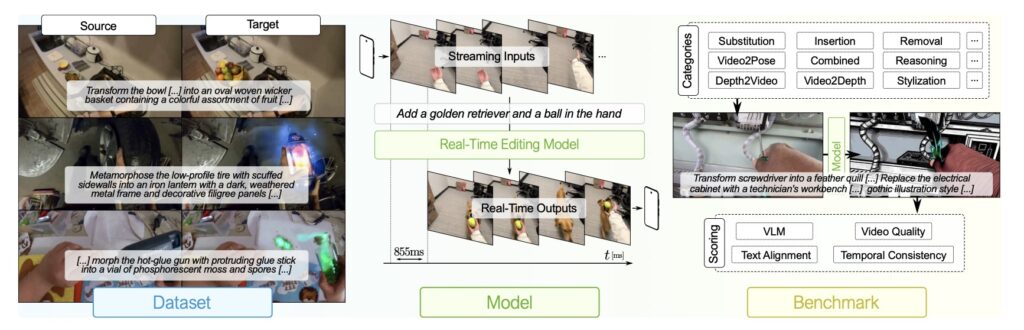

- A Complete Ecosystem: The project introduces a comprehensive suite: EgoEditData (a massive curated dataset), EgoEdit (a real-time streaming model), and EgoEditBench (a rigorous evaluation framework).

- Real-Time Capabilities: By enabling instruction-guided editing on a single GPU with interactive latency, EgoEdit paves the way for AR applications where users can modify their environment simply by speaking.

Altering the perceived world is the “Holy Grail” of Augmented Reality (AR). The ultimate goal is to empower users to immerse themselves in new worlds, transform their surrounding environments, and interact with virtual characters seamlessly. However, for years, this technology has been bottlenecked. Traditional AR experiences rely heavily on complex graphics pipelines and significant expert labor to handcraft every interaction.

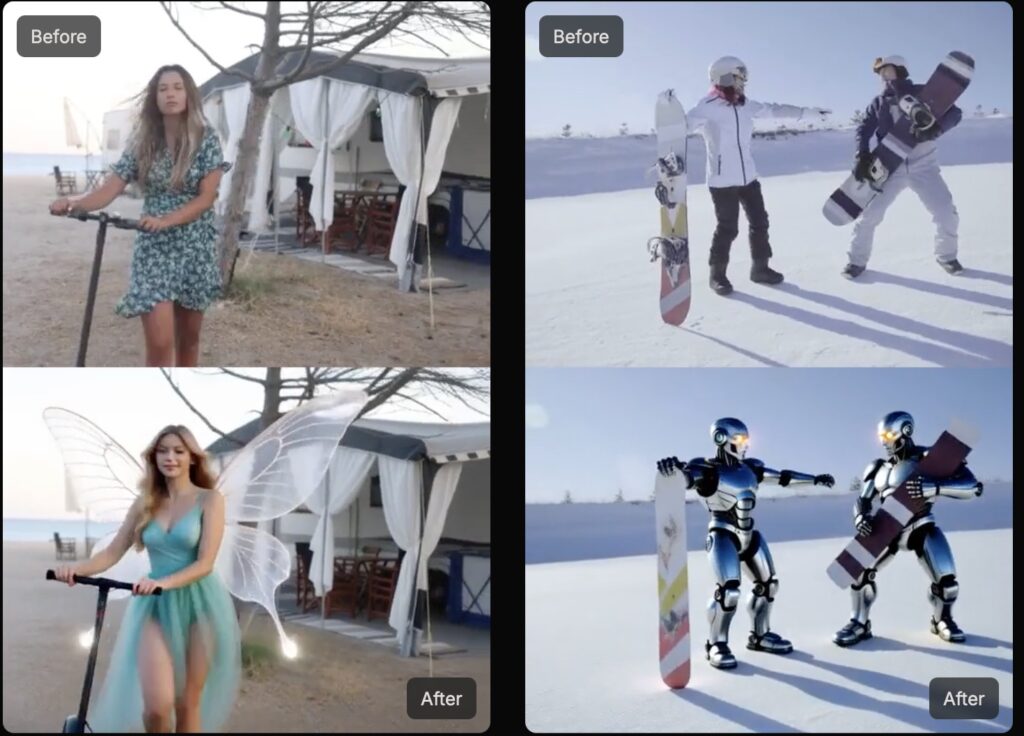

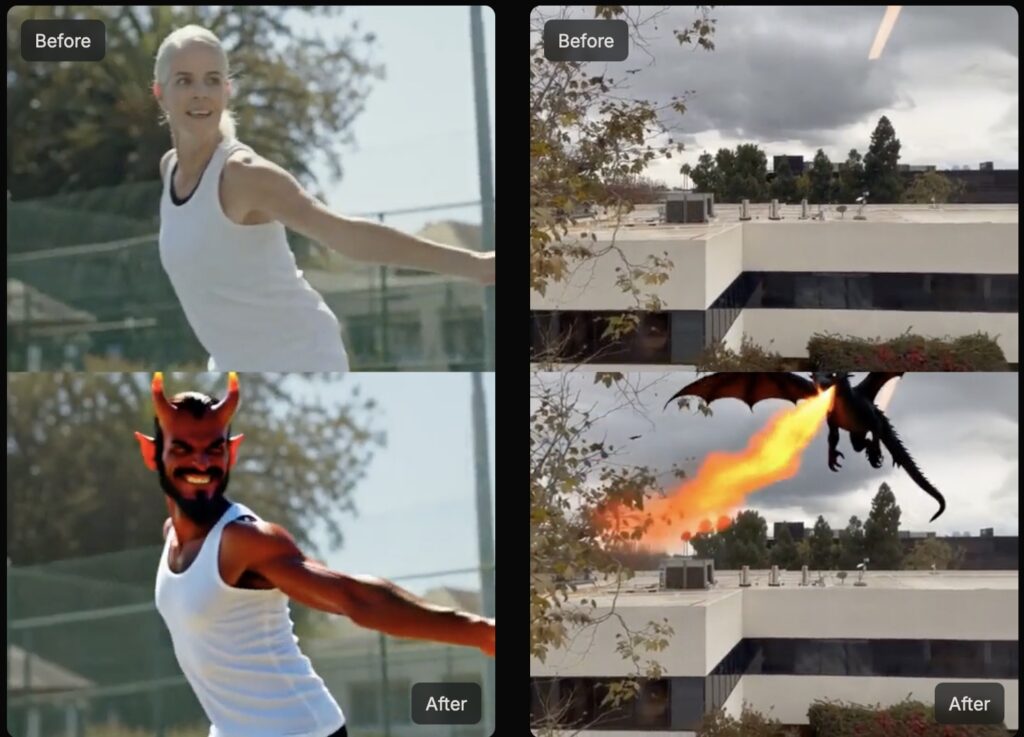

The rapid rise of text-conditioned generative AI raises a provocative question: Can we bypass the manual coding? Can simple language instructions serve as a direct engine for AR, allowing users to add, remove, or modify scene elements while they interact with the world? A new framework, EgoEdit, suggests the answer is yes.

The Egocentric Challenge: Why First-Person is Hard

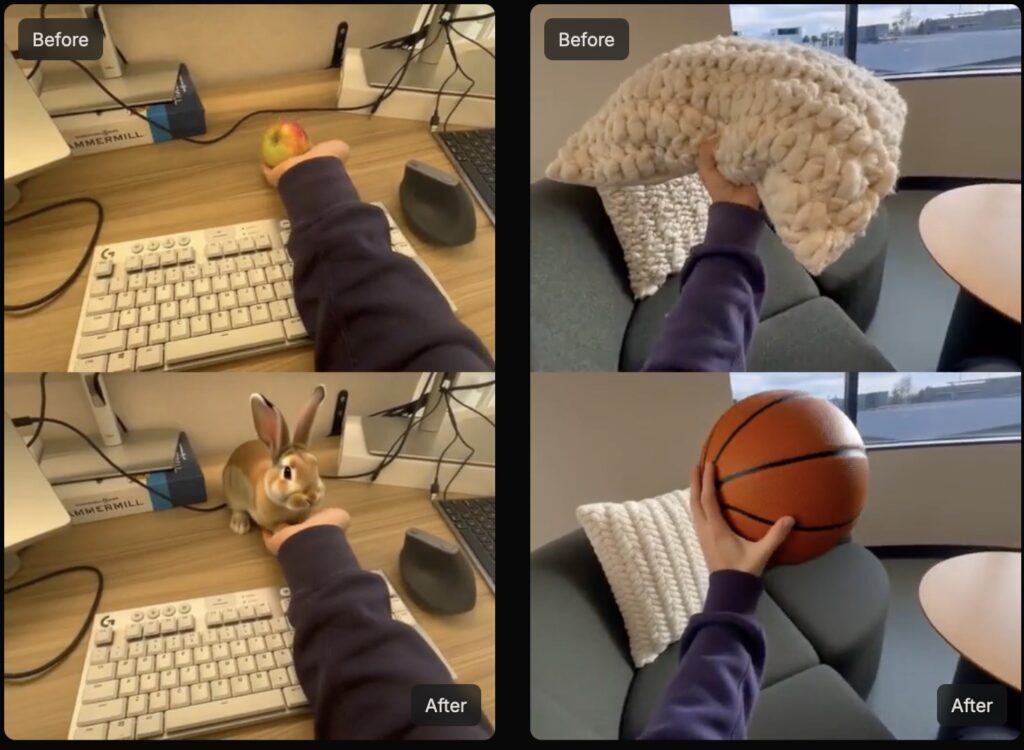

While recent AI video editors have shown impressive results on “third-person” footage (like movie scenes or stable landscape shots), they crumble when applied to egocentric views. First-person video, such as footage from smart glasses or a GoPro, presents unique chaos: rapid “egomotion” (head shaking), motion blur, and frequent hand-object interactions.

This creates a significant “domain gap.” Standard AI models struggle to track temporal stability when the camera is whipping around, and they often accidentally erase or distort the user’s hands when trying to edit the object they are holding. Furthermore, existing pipelines are mostly “offline,” meaning they take too long to process video to be useful for real-time interaction.

The Solution: The EgoEdit Ecosystem

To bridge this gap, researchers have introduced a complete ecosystem designed specifically for instruction-guided egocentric video editing. This ecosystem is built on three pillars:

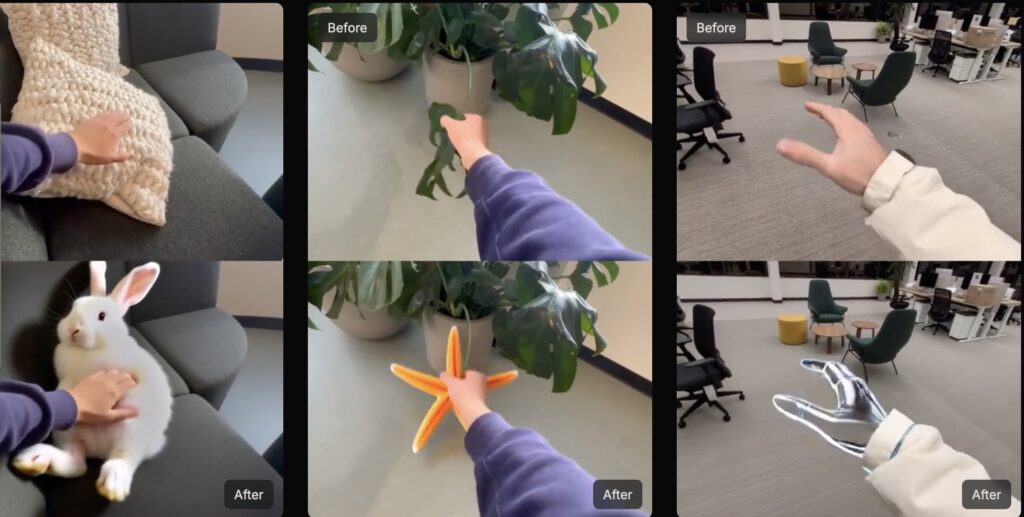

1. EgoEditData: The Foundation Data is the fuel of AI, and egocentric editing lacked a high-quality fuel source. The team constructed EgoEditData, the first manually curated dataset specifically designed for these scenarios. It features 99.7k editing pairs across 49.7k unique videos. Crucially, this dataset explicitly focuses on preserving hands during edits, ensuring that when a user interacts with a virtual object, their digital hands remain distinct and realistic.

2. EgoEdit: The Real-Time Editor The core of the project is the EgoEdit model itself. Unlike predecessors that require heavy processing time, EgoEdit is an instruction-following editor that supports real-time streaming inference on a single GPU. It utilizes a “Self Forcing” training method to manage data chunks efficiently. This allows the model to produce temporally stable results that faithfully follow text instructions without the jarring lag of offline editors.

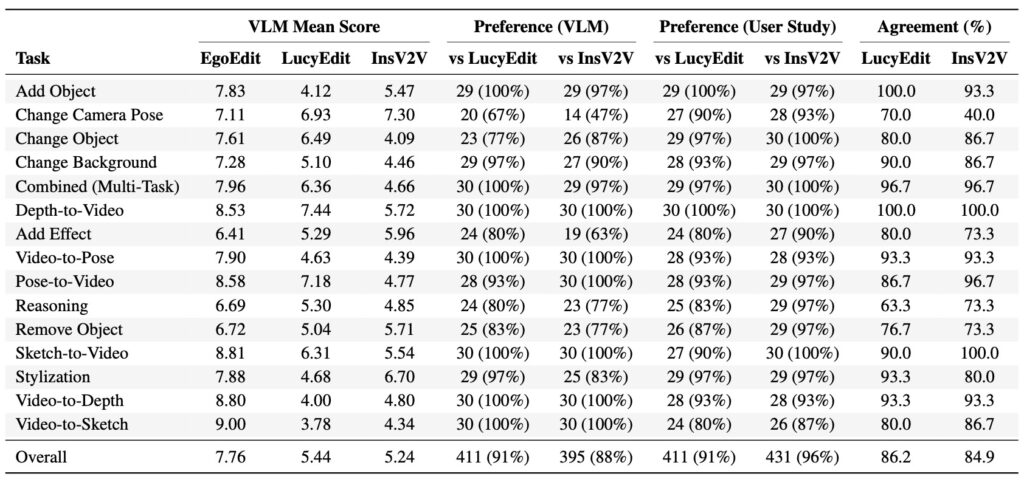

3. EgoEditBench: The Standard of Truth To prove efficacy, the team introduced EgoEditBench, an evaluation suite targeting instruction faithfulness, hand preservation, and stability under motion. Across these benchmarks, EgoEdit achieved clear gains where existing methods struggled, while maintaining performance comparable to the strongest baselines on general editing tasks.

Performance and the Reality of Real-Time

The results of EgoEdit are promising for the future of interactive AR. The model demonstrates strong generalization to “in-the-wild” videos, handling the messy reality of everyday footage better than its competitors.

However, the transition to real-time is not without its hurdles. The researchers are transparent about the current technical constraints:

- Latency: The model currently possesses a first-frame latency of 855ms. While sufficient for basic interaction, this is dominated by the recording time of the first chunk of video (9 RGB frames).

- Resolution: To maintain speed, EgoEdit operates at a resolution of 512×384px at 16 fps, which is slightly lower than the standard 480p resolution users might expect.

- Qualitative Gaps: While the real-time model (EgoEdit-RT) performs well, there are occasional dips in quality compared to slower models, specifically regarding out-of-distribution instructions or when objects become temporarily occluded.

EgoEdit represents a pivotal shift in how we approach Augmented Reality. By moving away from rigid, handcrafted graphics and toward flexible, instruction-guided generation, we are entering an era where the world can be rewritten with words.

With the public release of EgoEditData and EgoEditBench, the research community now has the foundation to refine these systems further. While challenges regarding resolution and latency remain, EgoEdit has successfully demonstrated that stable, faithful, and interactive first-person video editing is no longer science fiction—it is a solvable engineering challenge.