With a generational leap in hardware and architecture, the new Mistral 3 family on Blackwell systems delivers 10x faster inference, shattering previous performance bottlenecks.

- A 10x Performance Leap: The convergence of NVIDIA’s GB200 NVL72 system and Mistral’s new model architecture delivers a tenfold increase in inference speed and massive energy efficiency gains compared to previous H200 systems.

- Versatility from Cloud to Edge: The new family ranges from the massive Mistral Large 3 (675B parameters) for data centers to the compact Ministral 3 series, optimized for local execution on RTX GPUs and Jetson modules.

- Deep Engineering Integration: Success is driven by an “extreme co-design” approach, utilizing advanced features like TensorRT-LLM Wide Expert Parallelism, native NVFP4 quantization, and disaggregated serving to maximize throughput.

The landscape of artificial intelligence is shifting rapidly. As enterprise demands evolve from simple chatbots to complex, high-reasoning agents capable of long-context understanding, the pressure on hardware infrastructure has intensified. Addressing this challenge, NVIDIA has announced a massive expansion of its strategic collaboration with Mistral AI.

This partnership marks the arrival of the Mistral 3 frontier open model family, a release that signifies a pivotal moment where hardware acceleration and open-source architecture converge. The headline achievement is undeniable: the new models run up to 10x faster on NVIDIA GB200 NVL72 systems compared to the previous generation H200s, promising to solve the latency and cost bottlenecks that have historically plagued large-scale AI deployment.

A Generational Leap: The Blackwell Advantage

In the world of production AI, raw speed is useless without efficiency. The collaboration addresses this by optimizing the Mistral 3 family specifically for the NVIDIA Blackwell architecture.

The NVIDIA GB200 NVL72 doesn’t just offer higher performance; it fundamentally alters the economics of inference. The system exceeds 5,000,000 tokens per second per megawatt (MW) at user interactivity rates of 40 tokens per second. For data centers struggling with power constraints, this efficiency gain is just as critical as the speed boost, ensuring a lower per-token cost while maintaining the high throughput required for real-time applications.

Introducing the Mistral 3 Family

The engine driving this performance is a new suite of models designed to cover every spectrum of AI utility, from massive cloud workloads to local devices.

Mistral Large 3: The Flagship Powerhouse

At the top of the hierarchy sits Mistral Large 3, a state-of-the-art sparse Multimodal and Multilingual Mixture-of-Experts (MoE) model. Trained on NVIDIA Hopper GPUs, it offers parity with top-tier closed models while retaining the flexibility of open weights.

- Total Parameters: 675 Billion

- Active Parameters: 41 Billion

- Context Window: 256K tokens

Ministral 3: Dense Power at the Edge

Complementing the flagship is the Ministral 3 series, designed for speed and versatility. Available in 3B, 8B, and 14B parameter sizes (with Base, Instruct, and Reasoning variants for each), these models utilize the same massive 256K context window. Notably, the Ministral 3 series excels at the GPQA Diamond Accuracy benchmark by utilizing 100 fewer tokens while delivering higher accuracy, making it highly efficient for “on-device” reasoning.

The Engineering Behind the Speed

The “10x” performance claim is not marketing hyperbole; it is the result of a comprehensive optimization stack co-developed by Mistral and NVIDIA engineers through an “extreme co-design” approach.

Wide Expert Parallelism (Wide-EP) To exploit the massive scale of the GB200 NVL72, NVIDIA employed Wide-EP within TensorRT-LLM. While Mistral Large 3 utilizes roughly 128 experts per layer (fewer than some competitors), Wide-EP allows the model to fully utilize the NVLink fabric. This ensures that the model’s massive size does not result in communication bottlenecks, providing high-bandwidth, low-latency performance.

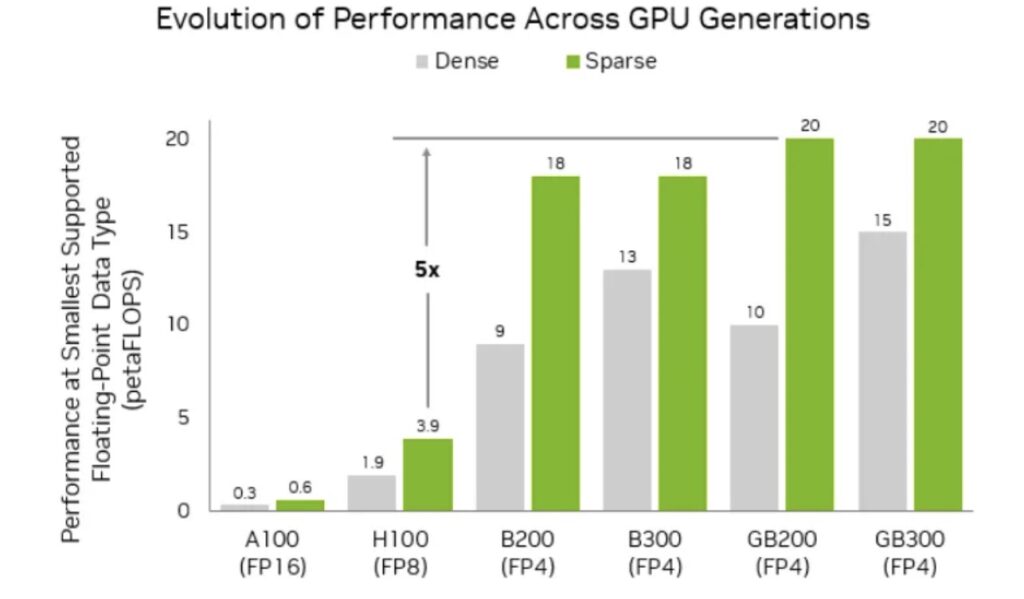

Native NVFP4 Quantization A significant technical advancement is the support for NVFP4, a quantization format native to the Blackwell architecture. Developers can deploy Mistral Large 3 using compute-optimized NVFP4 checkpoints. This reduces memory costs while leveraging higher-precision FP8 scaling factors to strictly maintain accuracy, specifically targeting MoE weights.

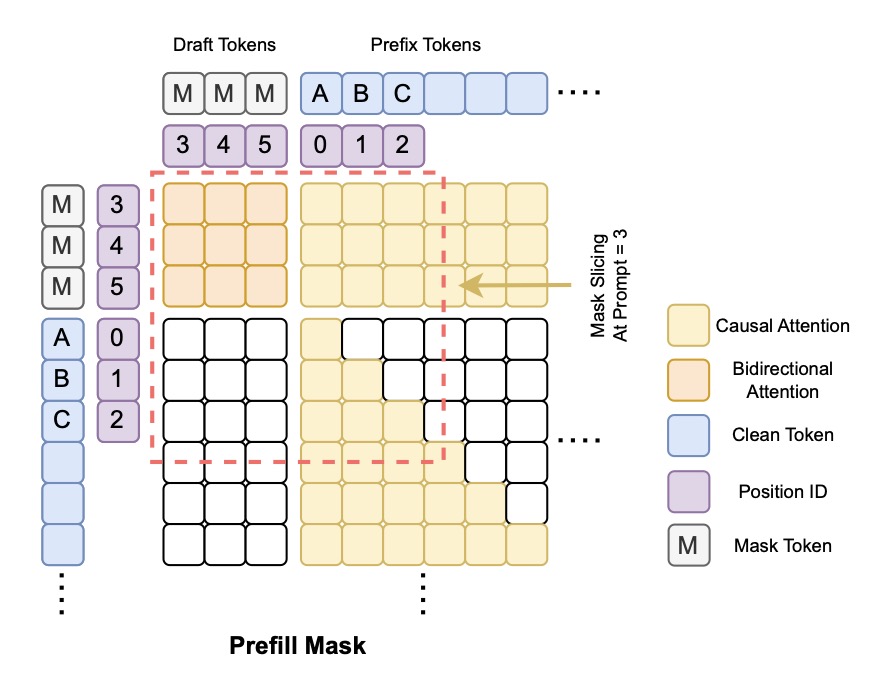

Disaggregated Serving with Dynamo Mistral Large 3 utilizes NVIDIA Dynamo to separate the prefill (processing input) and decode (generating output) phases of inference. By rate-matching these phases, Dynamo boosts performance for long-context workloads (e.g., 8K input/1K output), ensuring high throughput even when the full 256K context window is in play.

From the Cloud to Your Desk

The optimization efforts extend far beyond the data center. Recognizing the need for data privacy and local iteration, the Ministral 3 series has been engineered for edge deployment.

- RTX 5090 Power: On the NVIDIA RTX 5090 GPU, Ministral-3B variants reach blistering speeds of 385 tokens per second, bringing workstation-class AI performance to local PCs.

- Robotics & Edge: For developers using NVIDIA Jetson Thor, the Ministral-3-3B-Instruct model achieves up to 273 tokens per second (at a concurrency of 8), empowering the next generation of robotics.

NVIDIA has also collaborated with the open-source community—including Llama.cpp, Ollama, SGLang, and vLLM—to ensure these models are accessible regardless of the framework used.

A New Standard for Open Intelligence

The release of the NVIDIA-accelerated Mistral 3 family represents a major leap for the open-source community. By offering frontier-level performance backed by a robust hardware optimization stack, Mistral and NVIDIA are meeting developers where they are.

Whether utilizing the massive scale of the GB200 NVL72 or the edge-friendly density of an RTX 5090, this partnership delivers a scalable path for AI. With future optimizations like speculative decoding with multitoken prediction (MTP) on the horizon, the Mistral 3 family is poised to become the foundation for the next generation of intelligent applications.