With “TorchTPU,” Google isn’t just building a better chip; they are dismantling the software prison that keeps the AI world locked inside Nvidia’s ecosystem.

- The Secret Weapon: Google is developing “TorchTPU,” a project designed to make PyTorch run natively on Google’s hardware, bypassing the need for Nvidia’s proprietary CUDA software.

- The Strategy: By partnering with Meta and potentially open-sourcing the technology, Google aims to eliminate the massive “switching costs” that currently force companies to pay Nvidia’s premium prices.

- The Stakes: If successful, this move turns AI chips into a commodity, threatening the foundational software lock-in that justifies Nvidia’s massive market valuation.

A recent leak has revealed that Google is launching a direct, strategic attack on Nvidia’s most valuable asset. The target isn’t the H100 chip or the next generation of Blackwell GPUs. The target is Nvidia’s software.

For years, Nvidia has maintained a legendary lock-in on the AI industry, building a $4 trillion empire protected by a formidable defensive wall. Google’s secret project, internally dubbed TorchTPU, is designed to breach that wall. If it works, the “Nvidia is unstoppable” narrative dies, and the economics of the AI revolution change overnight.

The “Software Prison” of CUDA

To understand the magnitude of this leak, one must understand why Nvidia dominates. Wall Street analysts often refer to CUDA (Compute Unified Device Architecture) as Nvidia’s “strongest defensive wall.”

PyTorch is the most used AI framework on Earth; it is the default language for AI developers. However, PyTorch was built around CUDA. This created a scenario where buying Nvidia chips wasn’t just a hardware purchase—it was a subscription to an entire ecosystem.

Even when Nvidia raises prices or faces supply shortages, companies like Oracle, Microsoft, and OpenAI stay locked in. Why? Because the “switching costs” are astronomical. Moving away from Nvidia historically required:

- Millions of dollars in engineering work.

- Months of code rewrites.

- Significant performance drops.

This isn’t just a hardware moat; it is a software prison. Until now, companies had no escape route.

Google’s Ironwood TPUs: The AI Powerhouse Redefining Cloud Computing

Enter TorchTPU: The Escape Route

The leak reveals that Google has identified the specific mechanism to break this lock-in.

The irony of the current market is that Google’s TPU (Tensor Processing Unit) chips are actually highly competitive. They offer good performance, better availability, and lower costs than Nvidia’s GPUs. However, they have sat largely unused by the broader developer community. The reason? Language barriers.

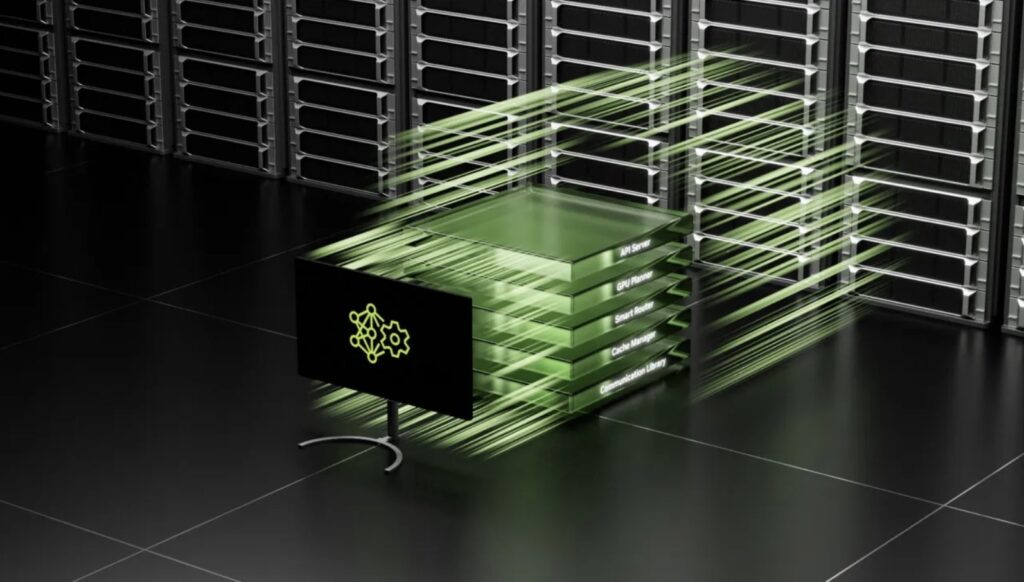

Google’s chips were optimized for JAX (Google’s internal framework), not PyTorch. For a developer to use a Google TPU, they would have to rewrite their entire codebase. Nobody wanted to do that, so they continued fighting for expensive Nvidia allocations.

TorchTPU changes the equation entirely.

- It allows PyTorch to run natively on Google hardware.

- It promises no rewrites and no performance loss.

- It reduces the engineering time to switch from months to minutes.

Google is reportedly partnering with Meta—the creator and owner of PyTorch—to make this integration seamless. By aligning with the tools developers already use, rather than forcing them to adapt to Google’s proprietary tools, they are removing the friction that protected Nvidia’s monopoly.

Commoditizing the Hardware

The implications of this project are staggering for the industry’s financial landscape. Google is reportedly considering open-sourcing parts of the TorchTPU stack to speed up adoption. This suggests Google is willing to give the software away for free just to break Nvidia’s grip.

If TorchTPU succeeds, the “switching cost” evaporates. Cloud providers like Amazon (building Trainium) and Microsoft (building Maia) are desperate to escape Nvidia’s monopoly pricing. Google has just handed them the software bridge to do so.

When software lock-in disappears, hardware becomes a commodity. Chip companies usually compete on price, not ecosystem exclusivity. Currently, Nvidia trades at roughly 50x earnings because the market views its software moat as untouchable. Competitors like AMD trade at roughly 25x. If Google removes the software advantage, Nvidia risks being repriced as “just another chip company.”

The Race to 2026

Jensen Huang and Nvidia are now in a race against time. Their response options are limited: they cannot buy Google, they cannot kill PyTorch (as it is owned by Meta), and they cannot stop open-source adoption. Their only play is to improve CUDA faster than Google can catch up.

Google needs an estimated 12 to 18 months to make TorchTPU production-ready. While investors react to daily fluctuations—Nvidia and Google were both down roughly 2% following broader market moves—the market has not yet priced in this structural threat.

Investors currently see TorchTPU as just “another competitor.” They fail to realize it is an attack on the foundation of Nvidia’s valuation. As companies realize AI infrastructure costs are unsustainable, the arrival of a plug-and-play alternative in 2026 could mark the end of Nvidia’s absolute dominance.