How URAE is Redefining Text-to-Image Diffusion Models with Data and Parameter Efficiency

- Synthetic Data as a Game-Changer: URAE leverages synthetic data from teacher models to significantly boost training convergence, even with limited datasets.

- Parameter Efficiency Redefined: By fine-tuning minor components of weight matrices, URAE outperforms traditional low-rank adapters, offering superior performance without synthetic data.

- Breaking Resolution Barriers: URAE achieves state-of-the-art 2K and 4K image generation with minimal resources, setting new benchmarks for high-resolution text-to-image models.

The field of text-to-image diffusion models has seen remarkable advancements in recent years, enabling the creation of stunning visual content from simple textual prompts. However, generating high-resolution images remains a significant challenge, especially when training data and computational resources are scarce. Enter Ultra-Resolution Adaptation with Ease (URAE), a groundbreaking framework that addresses these limitations by focusing on two critical aspects: data efficiency and parameter efficiency. This article explores how URAE is revolutionizing high-resolution image generation, its technical innovations, and its broader implications for research and real-world applications.

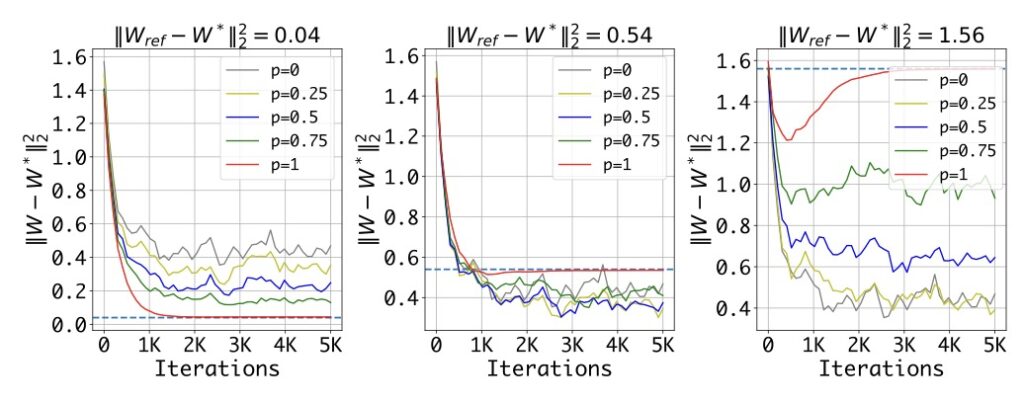

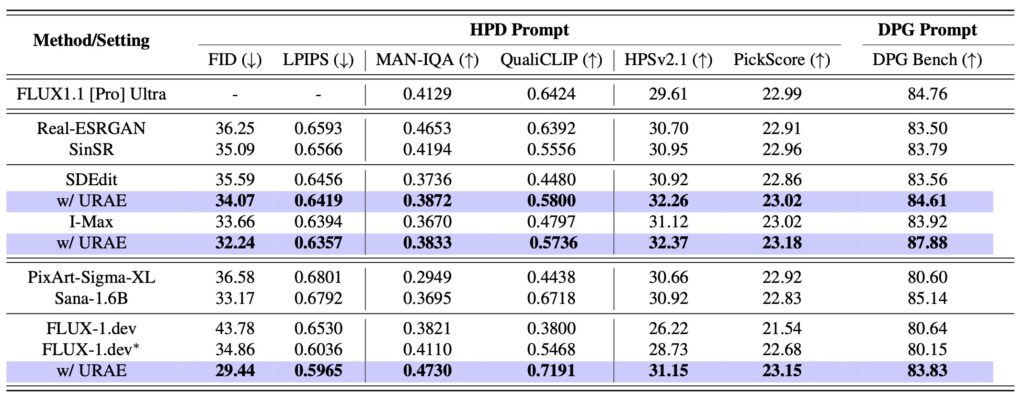

Training text-to-image diffusion models for ultra-resolution settings is no small feat. High-resolution generation demands vast amounts of data and computational power, which are often inaccessible to researchers and developers. Traditional methods, such as low-rank adapters, have provided some relief but fall short when synthetic data is unavailable. URAE tackles these challenges head-on by introducing a set of innovative strategies that optimize both data and parameter usage, enabling high-quality image generation with minimal resources.

A Catalyst for Training Convergence

One of URAE’s key innovations is its use of synthetic data generated by teacher models. Synthetic data serves as a powerful tool to accelerate training convergence, especially in data-scarce scenarios. By leveraging high-quality synthetic samples, URAE demonstrates that even limited datasets can produce exceptional results. This approach not only reduces the dependency on large-scale real-world datasets but also opens new avenues for training models in resource-constrained environments.

Fine-Tuning for Superior Performance

When synthetic data is unavailable, URAE shifts its focus to parameter efficiency. Traditional methods like low-rank adapters modify large portions of weight matrices, often leading to suboptimal performance. URAE, however, introduces a novel approach: fine-tuning only the minor components of weight matrices. This strategy not only enhances performance but also maintains computational efficiency, making it a viable alternative for high-resolution image generation.

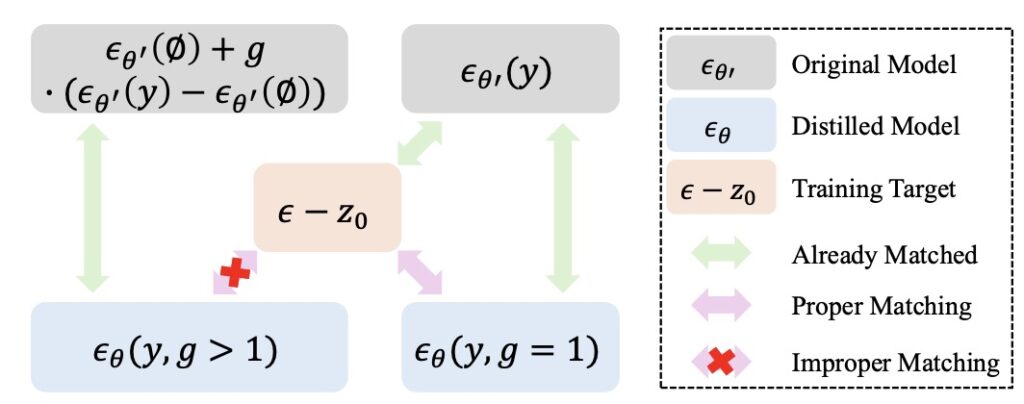

The Role of Classifier-Free Guidance

For models that employ guidance distillation, such as FLUX, URAE highlights the importance of disabling classifier-free guidance during adaptation. By setting the guidance scale to 1, URAE ensures that the model achieves optimal performance without compromising on quality. This insight is particularly valuable for developers working with guidance-based models, as it simplifies the adaptation process while delivering superior results.

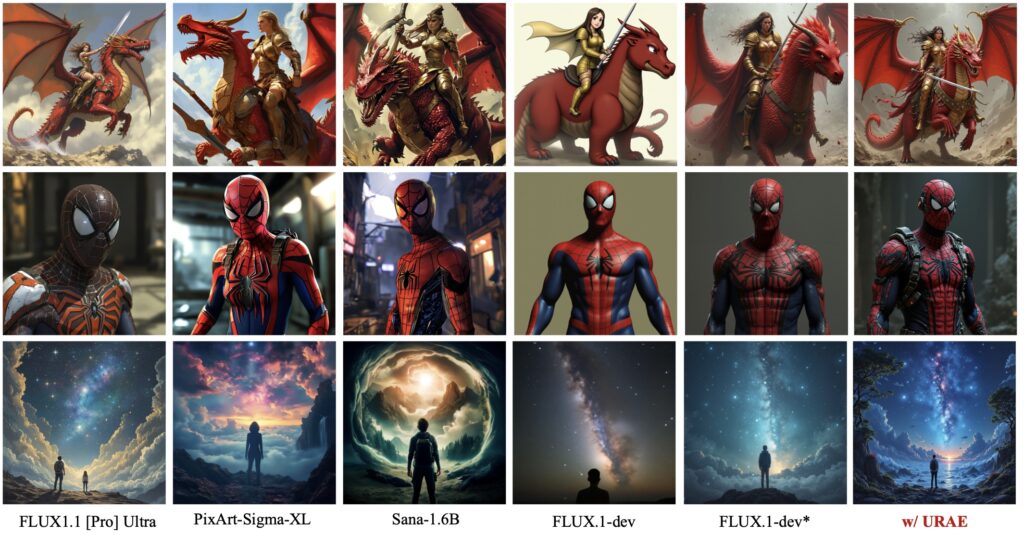

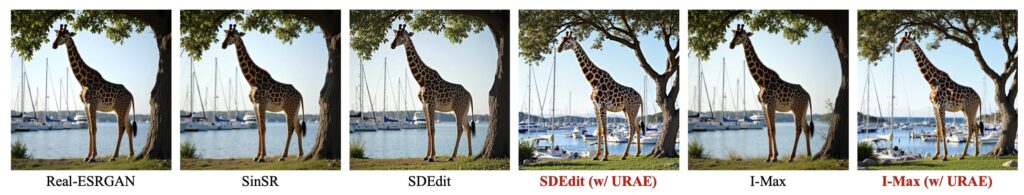

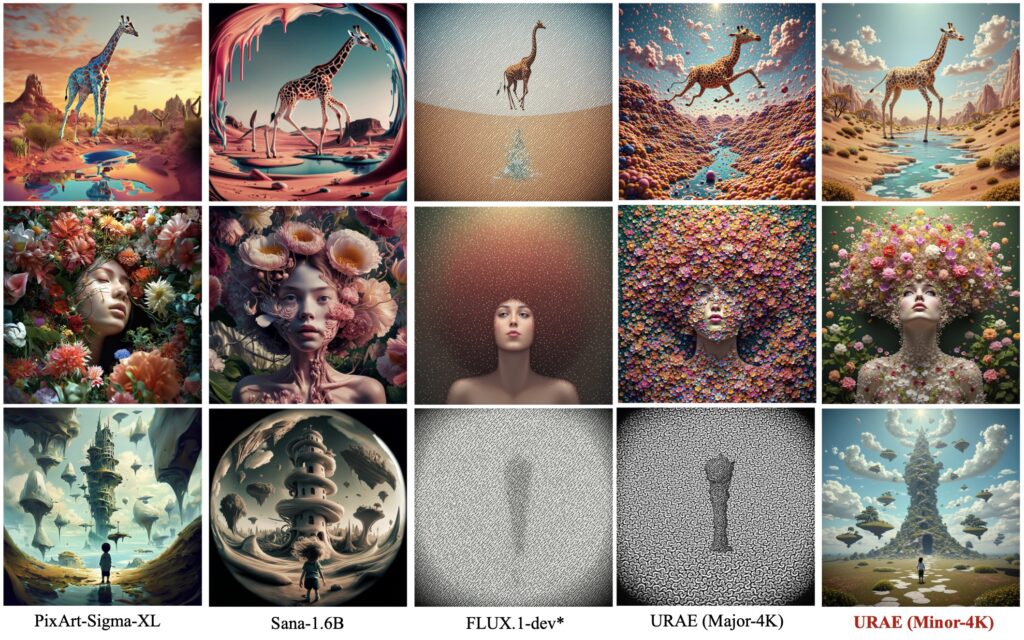

Benchmarking URAE: 2K and 4K Generation

Extensive experiments validate URAE’s effectiveness. The framework achieves comparable 2K-generation performance to state-of-the-art closed-source models like FLUX1.1 [Pro] Ultra, using only 3,000 samples and 2,000 iterations. Moreover, URAE sets new benchmarks for 4K-resolution generation, pushing the boundaries of what’s possible with text-to-image diffusion models. These achievements underscore URAE’s potential to democratize high-resolution image generation, making it accessible to a wider audience.

Future Directions: Balancing Quality and Efficiency

While URAE represents a significant leap forward, there is still room for improvement. Current models lag behind recent high-resolution methods in terms of inference-time efficiency. Future research could focus on architectural optimizations to streamline the ultra-resolution generation process, balancing quality and efficiency. Additionally, integrating URAE into multi-modal large language models could unlock even more versatile capabilities, paving the way for groundbreaking applications in AI-driven content creation.

Broader Implications and Ethical Considerations

URAE’s advancements have far-reaching implications for both research and real-world applications. By enabling richer and more detailed visual content, URAE benefits domains such as digital art, virtual reality, advertising, and scientific visualization. However, these advancements also raise important ethical considerations. High-fidelity images could be misused for creating deceptive content, and the computational demands of large-scale generation can have environmental impacts. To address these challenges, URAE emphasizes responsible development practices, including efficient training strategies and transparent model documentation.

A New Era for Text-to-Image Models

URAE represents a paradigm shift in high-resolution image generation, offering a practical and efficient solution to one of the field’s most pressing challenges. By leveraging synthetic data, optimizing parameter efficiency, and rethinking guidance strategies, URAE sets new benchmarks for 2K and 4K generation while minimizing resource requirements. As we look to the future, URAE’s innovations promise to unlock new possibilities in AI-driven content creation, empowering researchers, developers, and creators alike to push the boundaries of visual storytelling.

With its blend of technical ingenuity and practical applicability, URAE is not just a tool—it’s a catalyst for the next generation of text-to-image diffusion models. Whether you’re a researcher, artist, or developer, URAE offers a glimpse into the future of high-resolution image generation, where creativity knows no bounds.