Prioritizing Important Tokens to Achieve Over 3x Efficiency Improvement for 4K to 128K Token Lengths

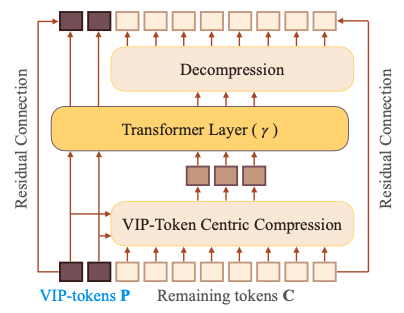

- Vcc (VIP-token centric compression) tackles the challenge of efficiently processing ultra-long sequences in Transformer models by compressing input representations.

- The method selectively compresses input sequences based on their impact on approximating the representation of VIP-tokens, resulting in more than 3x efficiency improvement.

- Vcc can be directly incorporated into existing pretrained models with additional training and achieves competitive or better performance on various tasks.

Transformer models are fundamental to natural language processing (NLP) and computer vision, but handling ultra-long sequences (e.g., more than 16K tokens) remains challenging due to the quadratic cost associated with sequence length. A recent paper proposes a method called VIP-token centric compression (Vcc) to significantly reduce the complexity dependency on sequence length by compressing the input representation at each layer.

The Vcc method exploits the fact that in many tasks, only a small subset of special tokens (VIP-tokens) are most relevant to the final prediction. By selectively compressing input sequences based on their impact on approximating VIP-tokens’ representation, Vcc achieves more than 3x efficiency improvement compared to baselines on 4K and 16K lengths, while maintaining or improving accuracy.

The researchers focused on 4K to 128K token lengths because shorter sequences’ computation requirements are not considered an efficiency bottleneck. Standard Transformers are sufficiently fast for shorter sequences. However, Vcc’s compression method works better when there is more compressible information, making it less effective for shorter sequences.

Vcc’s method is designed to excel in tasks where a subset of tokens are disproportionately responsible for the model prediction. The method selectively locates relevant information in the sequence for given VIP-tokens, leading to improved performance in many cases. However, the method may not be as effective in settings where an embedding must serve multiple tasks concurrently without prior knowledge of the tasks.

Vcc is a VIP-token centric sequence compression method that reduces the complexity dependency on sequence length without sacrificing model accuracy. It can be directly incorporated into existing pretrained models with some additional training and often has much higher efficiency compared to baselines while offering better or competitive model accuracy. Future work could involve extending the method to the decoder of encoder-decoder models to further boost Transformers’ efficiency while maintaining similar performance.