Breaking Barriers in AI-Driven Content Creation with Autoregressive Efficiency and User-Controlled Narratives

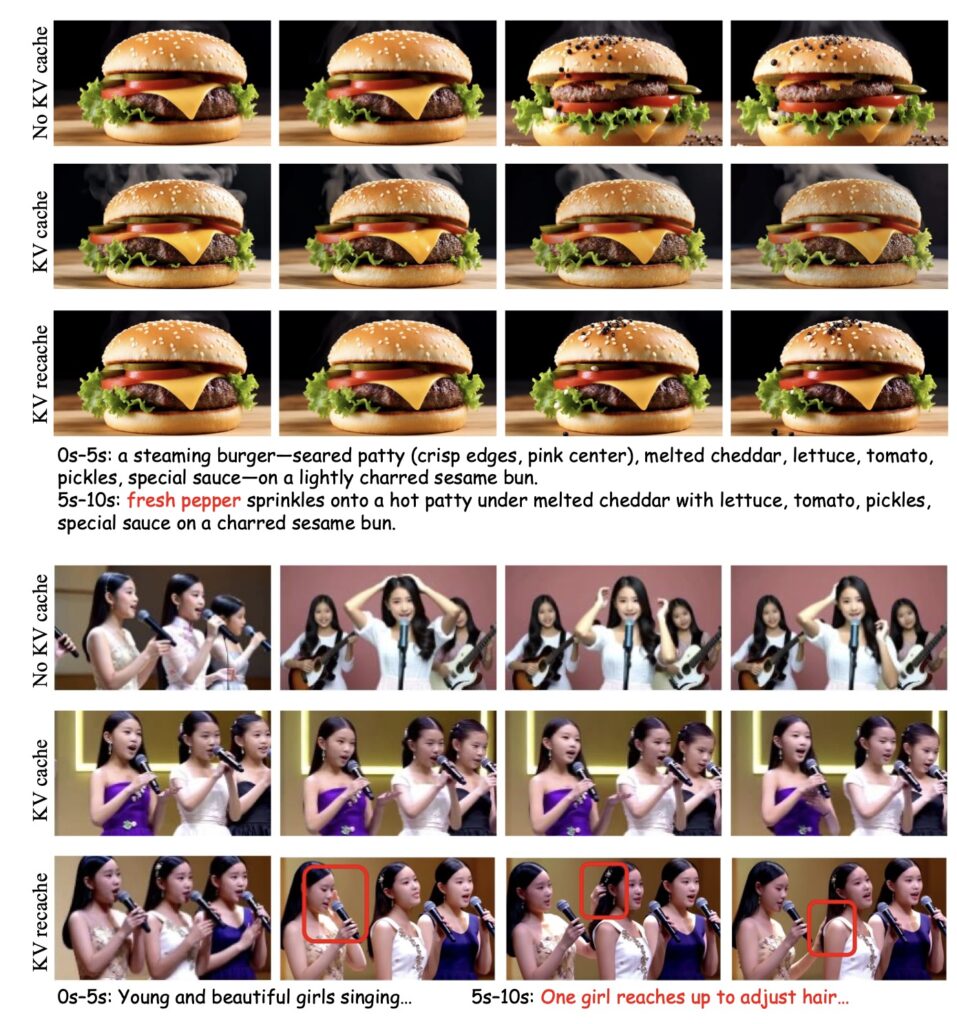

- Overcoming Key Challenges: LONGLIVE addresses the efficiency and quality hurdles in long video generation by leveraging a frame-level autoregressive framework, innovative KV-recache for seamless prompt switches, and streaming long tuning to enable high-fidelity outputs even in interactive scenarios.

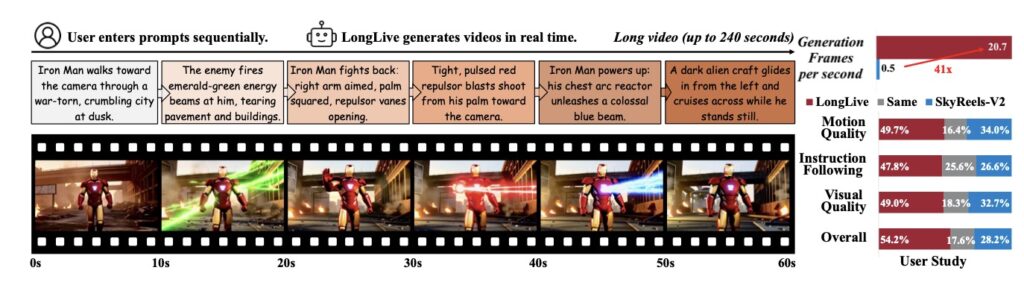

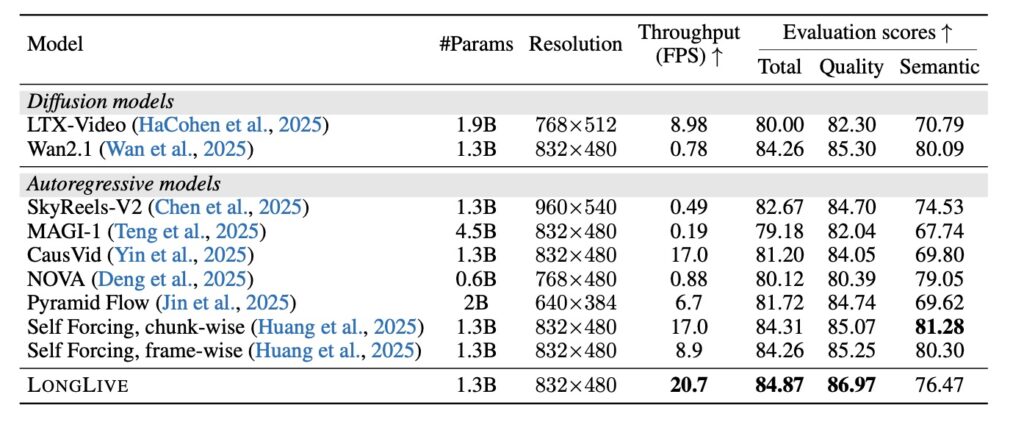

- Impressive Performance and Scalability: Fine-tuned on a 1.3B-parameter model in just 32 GPU-days, LONGLIVE delivers 20.7 FPS inference on a single NVIDIA H100 GPU, supports up to 240-second videos, and maintains quality with INT8 quantization for reduced memory usage.

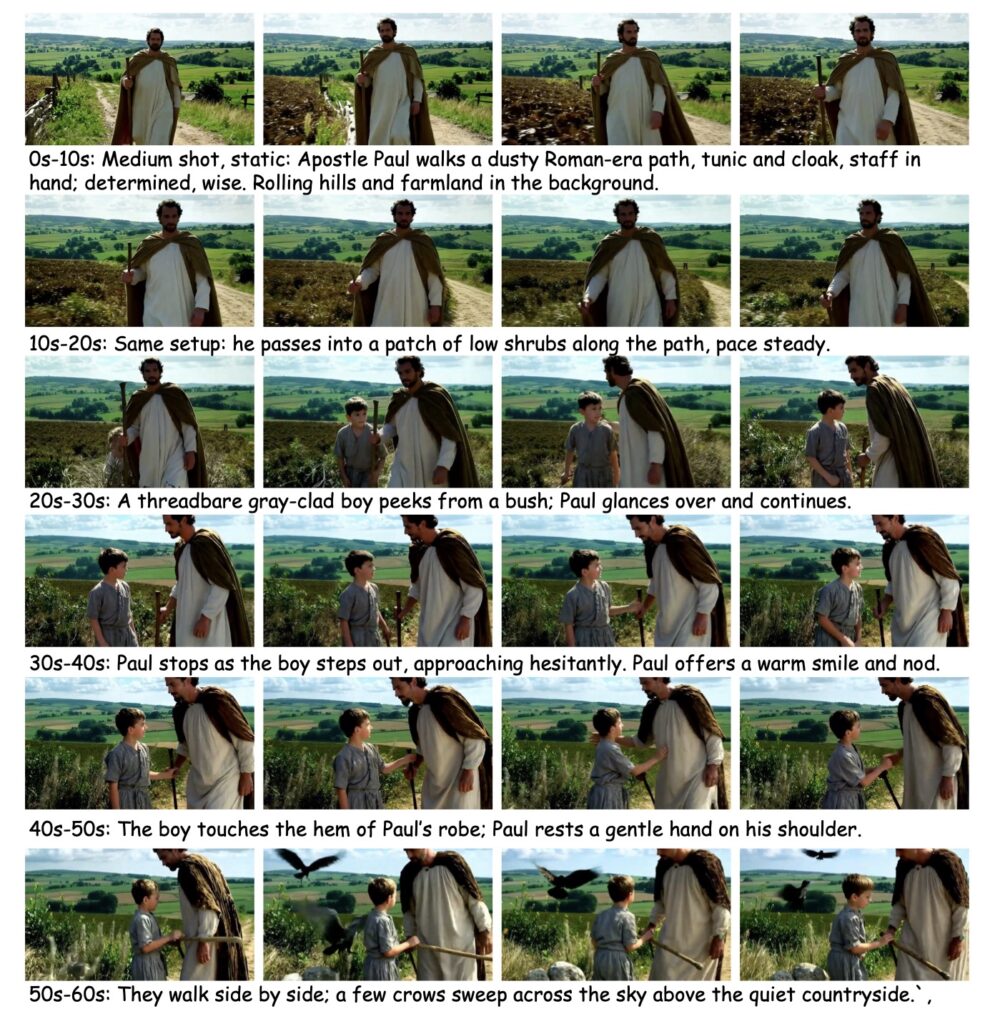

- Transforming Creative Applications: By enabling real-time user interactions like streaming prompts, LONGLIVE empowers dynamic storytelling in creative, educational, and cinematic fields, making long-form video generation more adaptable and user-friendly than ever before.

In the rapidly evolving world of AI-generated content, the ability to create long, coherent videos has long been a holy grail for creators, educators, and filmmakers. Traditional methods, such as diffusion models and Diffusion-Forcing approaches, excel in producing stunningly high-quality videos but falter when it comes to speed—bogged down by bidirectional attention mechanisms that make real-time generation impractical. Enter LONGLIVE, a groundbreaking frame-level autoregressive (AR) framework designed specifically for real-time and interactive long video generation. This innovation doesn’t just push the boundaries of what’s possible; it redefines how we interact with AI in content creation, allowing users to guide narratives on the fly while maintaining visual consistency and efficiency.

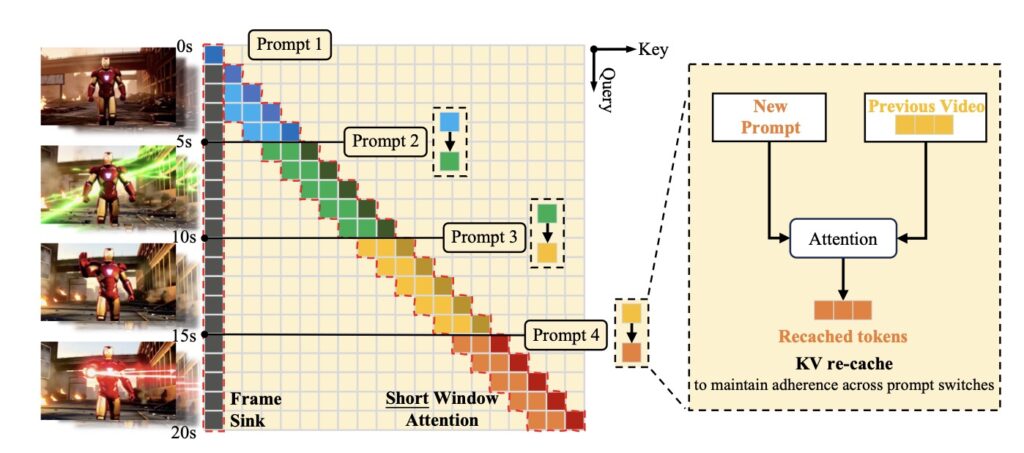

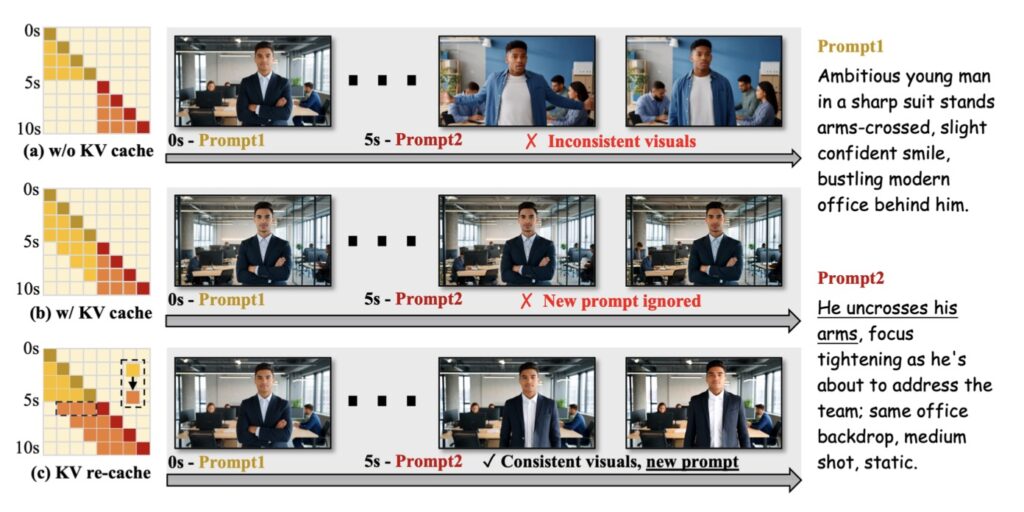

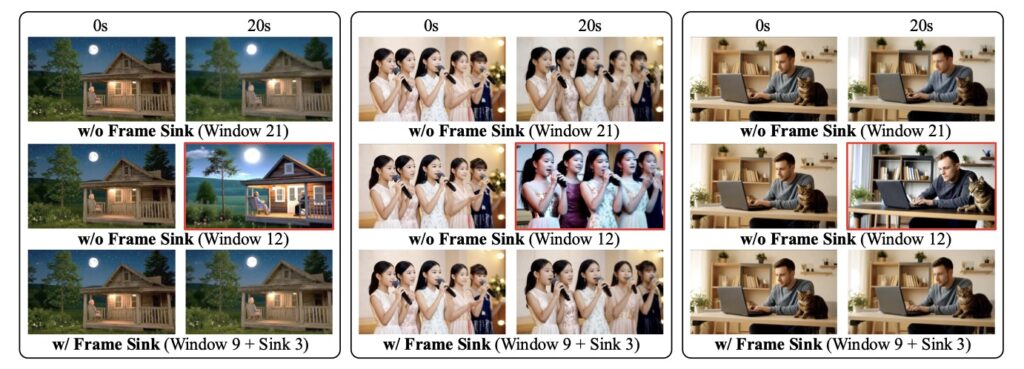

At its core, LONGLIVE tackles the dual challenges of efficiency and quality that have plagued long video generation. Causal attention AR models, while supporting KV caching for faster inference, often struggle with quality degradation over extended sequences due to memory constraints during training on long videos. LONGLIVE overcomes this with a suite of clever techniques: a KV-recache mechanism that refreshes cached states during prompt transitions, ensuring smooth and adherent switches without disrupting the flow; streaming long tuning, which aligns training and inference processes (train-long–test-long) to handle minute-long videos directly; and short window attention paired with a frame-level attention sink (shortened to frame sink), which preserves long-range consistency while accelerating generation. These elements work in harmony to make LONGLIVE not just viable, but exceptionally performant, fine-tuning a 1.3B-parameter short-clip model into a long-video powerhouse in a mere 32 GPU-days.

What sets LONGLIVE apart is its emphasis on interactivity, a feature that’s crucial for dynamic content creation. Static prompt-based generation, while useful, limits adaptability—users can’t easily adjust a video once it’s underway, and crafting detailed long-form prompts in one go is often overwhelming. LONGLIVE introduces streaming prompt inputs, enabling real-time guidance where creators can introduce new elements, shift visual styles, or steer the narrative mid-generation. This interactive paradigm transforms video creation from a rigid process into a collaborative one, but it comes with its own hurdles. Maintaining smooth transitions is tough; even minor mismatches in motion, scene layout, or semantics can shatter immersion. LONGLIVE’s KV-recache ensures visual smoothness and semantic adherence, preventing disruptions and keeping the video realistic and engaging.

From an efficiency standpoint, the demands of long videos are staggering. Generating a 180-second clip with models like Wan-2.1 requires processing over a million tokens, which is computationally intensive and memory-hungry. In interactive settings, any delay can ruin the user experience. LONGLIVE shines here, achieving an impressive 20.7 frames per second (FPS) on a single NVIDIA H100 GPU during inference, while supporting videos up to 240 seconds long—all without sacrificing fidelity or temporal coherence. It even excels on benchmarks like VBench for both short and long videos. For broader accessibility, LONGLIVE supports INT8-quantized inference, compressing model size from 2.7 GB to 1.4 GB with only marginal quality loss, making it feasible for more hardware setups.

The broader implications of LONGLIVE extend far beyond technical feats, revolutionizing applications in creative, educational, and cinematic domains. Long videos enable richer storytelling, complex temporal dynamics, and deeper scene development compared to short clips, fostering more immersive experiences. By adding interactivity, LONGLIVE makes this process controllable and adaptive—imagine educators dynamically adjusting lesson visuals based on student feedback, filmmakers prototyping scenes in real time, or artists experimenting with evolving narratives. This shift from static to interactive generation democratizes high-quality video production, empowering users who might struggle with exhaustive upfront planning.

Experimental results underscore LONGLIVE’s robustness, with qualitative showcases and detailed analyses (available in appendices) highlighting its edge over predecessors. Tuning on long videos isn’t just about quality; it’s a prerequisite for efficient inference techniques like window attention with frame sinks, which substantially boost speed. As AI continues to blur the lines between human creativity and machine assistance, frameworks like LONGLIVE pave the way for a future where long-form video generation is not only possible but intuitive, efficient, and endlessly interactive. Whether you’re a content creator dreaming up epic tales or an educator crafting engaging materials, LONGLIVE brings the power of real-time, user-driven video to your fingertips.