Inside the budget-friendly, open-source breakthrough rewriting the AI race—and what it means for U.S. tech supremacy.

- Deepseek’s Game-Changer: A mysterious Chinese AI lab called Deepseek shocked the world by building a model that rivals American giants, using just a fraction of the budget and weaker hardware.

- Open-Source Upsets the Status Quo: Deepseek’s breakthrough highlights a new dynamic where cost-efficient, open-source innovation can catch—or even surpass—closed-source models that require billions in funding.

- The Race Moves to Reasoning: As large language models become commoditized, top players like OpenAI are pivoting toward advanced “reasoning” capabilities, while China’s newest contenders are quickly following suit.

A Surprising Challenger from the East

At the dawn of 2024, industry insiders believed that the United States still held a comfortable lead in artificial intelligence. Then came a new model from a little-known Chinese lab called Deepseek, which blasted into view with the force of a technological shockwave.

Observers were amazed:

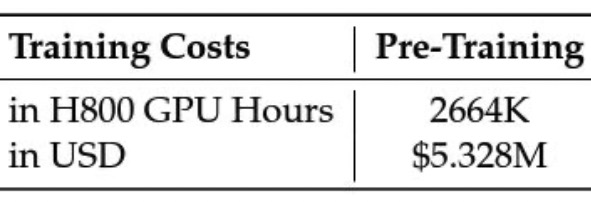

• Minimal Funding: Deepseek claimed it had spent a mere $5.6 million on its latest model, a microscopic sum compared to OpenAI’s estimated $5 billion annual spend or Google’s $50+ billion projected capital expenditures in 2024.

• Ultra-Fast Development: The Chinese lab reportedly needed only two months of training time to reach performance levels that took Google, Microsoft, Meta, and OpenAI years to develop.

• Open-Source Approach: Unlike the proprietary giants, Deepseek opened its model to the developer community, enabling free modification and customization.

In less than 60 days, Deepseek v3 had apparently raced past leading American models in a host of demanding benchmarks—from coding competitions and bug-spotting to a specialized AI math evaluation. This achievement rattled Silicon Valley’s assumptions about Chinese AI, highlighting that necessity truly can be the mother of invention.

The Budget vs. Performance Mystery

One of the most jarring revelations about Deepseek was how it circumvented U.S. semiconductor export controls. The United States government, hoping to limit China’s AI progression, had barred the most advanced chips from sale to Chinese labs. Deepseek, however, did not rely on top-tier GPUs like NVIDIA’s H100. Instead, it used more modest H800 chips—less performant by design—to train its model.

Yet the results spoke for themselves. The new Deepseek model matched or even outdid top-tier American models in recognized evaluations such as:

• Math and Science Benchmarks

• Code Generation and Bug Fixes

• General Knowledge and Reasoning Tasks

This display of hardware workarounds and low-budget mastery has turned conventional wisdom on its head: bigger budgets do not necessarily guarantee better models.

Inside the Mysterious Lab: Deepseek

Despite its shocking success, very little is publicly known about Deepseek. The lab is said to be a spinoff of a Chinese hedge fund called High Flyer Quant, which manages around $8 billion in assets. Beyond that, only a sparse mission statement can be found on its developer site:

“Unravel the mystery of AGI with curiosity. Answer the essential question with long-termism.”

No detailed charter, no explicit AI safety or responsibility guidelines—nothing like the more transparent manifestos of OpenAI or Anthropic. Deepseek’s founder, Liang Wenfeng, is practically unknown in U.S. tech circles. Attempts by journalists to contact Deepseek have mostly been met with silence.

Speculation abounds on how the lab assembled its talented researchers, found the data to train the model, or even acquired the necessary (albeit lower-tier) GPUs. Some suspect that Deepseek scoured the open internet for the outputs of ChatGPT and other American models, incorporating them into its own training data.

However, that only tells half the story. Deepseek’s approach is also innovative, using cutting-edge techniques such as mixture-of-experts architectures and lower-precision floating-point training to squeeze every ounce of performance out of limited resources. This blend of resourcefulness and secrecy leaves the global AI community wanting to learn more—fast.

America’s Reaction

Early in 2024, former Google CEO Eric Schmidt predicted that China lagged the U.S. by two to three years in AI. Only months later, Schmidt had changed his tune, noting that the Deepseek phenomenon showed China had “caught up in the last six months in a way that is remarkable.”

A Shifting Tech Tides

• OpenAI: Spends billions each year, with Microsoft alone investing over $13 billion to support its efforts. Once considered unassailable.

• Google: Its upcoming “Gemini” model had many believing it would threaten OpenAI’s leadership, but the Deepseek news suggests unexpected challengers may be even more disruptive.

• Meta: Hugely influential in open-source AI with its Llama family of models but now facing a competitor halfway around the globe that is snapping at its heels.

• Anthropic: Famous for its Claude and Claude Sonnet models, heavily funded, but also overshadowed by this new open-source upstart.

Faced with such developments, American tech leaders have begun to question the notion of a permanent “AI moat.” It used to be that only companies with access to billions in capital, proprietary data centers, and specialized chips could produce cutting-edge large language models (LLMs). Deepseek changed all that, showing that you can leap to the frontier with just a few million dollars, good engineering, and a creative approach.

The Great AI Debate: Open Source vs. Closed Source

Deepseek’s decision to make its model open-source may have the greatest long-term impact of all. In the AI community, open-source software often wins out over time because it encourages developers to build on and improve a common foundation.

Yet the scenario poses uncomfortable questions about trust and values:

• Chinese Censorship: By law, models in China must adhere to “core socialist values,” raising concerns that a popular open-source model could whitewash historical events such as Tiananmen Square, or omit other politically sensitive topics.

• U.S. vs. China: As open-source models become more capable, the concern is that the American developer community may increasingly rely on Chinese code and frameworks, ceding “mindshare” and influence to labs like Deepseek.

• Licensing Risks: Even if a model starts out open-source, that license can be changed or revoked, leaving some uncertain about building businesses on top of a foreign foundation.

Still, open-source’s appeal—particularly if it matches or surpasses closed-source performance—can be unstoppable. Deepseek v3’s cost advantages (reportedly down to 10 cents per million tokens in inference, compared to $4.40 for OpenAI’s GPT-4) mean that countless startups and dev teams could adopt it.

The Impact on U.S. AI Leadership

The U.S. government’s restrictions on advanced chips were designed to slow China’s AI progress. Ironically, they may have nudged Chinese labs to discover more cost-efficient alternatives. As one industry insider puts it:

“Necessity is the mother of invention. Because they had to go figure out workarounds, they built something more efficient.”

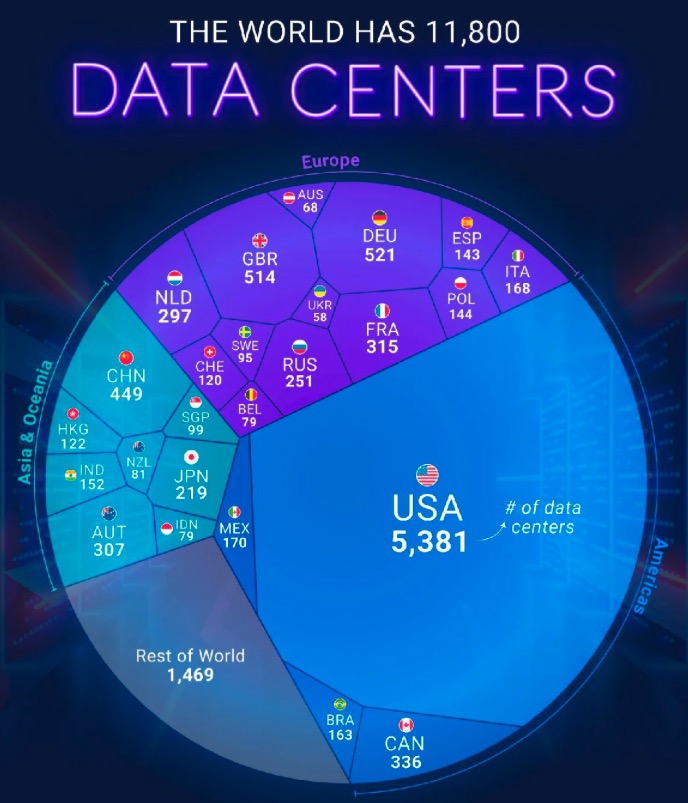

If developers worldwide begin switching to Deepseek’s open model for day-to-day tasks, the “platform” advantage may shift from the West to the East. Key American leaders who once championed restricting China’s access to top technology—hoping to protect U.S. AI leadership—are now acknowledging that China found a path regardless.

The Bigger Picture: “Democratic AI” vs. “Autocratic AI”

Experts warn that if Chinese open-source models dominate, they might eventually embed a China-centric worldview into everyday consumer applications. However, the development of multiple rival open-source efforts (such as Meta’s Llama) remains a possibility, ensuring a competition that might keep any single government’s values from monopolizing global discourse.

A Matter of Trust and Censorship

Large language models mirror the data and biases within their training sets. For American users, the thought of relying on a Chinese model triggers concerns about potential censorship. Studies show that models from Alibaba and Tencent systematically filter or deny politically sensitive topics.

If labs like Deepseek eventually release widely adopted models, developers might inadvertently incorporate subtle censorship frameworks, especially if they rely on “pre-trained weights” from a Chinese model. AI safety experts worry about hidden guardrails that could shape global discussions, especially if the model becomes a default solution for enterprise and consumer apps alike.

The Future: Reasoning, Efficiency, and Commoditization

As if open-source efficiency were not enough of a challenge, a new frontier in AI is emerging: reasoning. Traditional large language models often function as “autocomplete” engines, predicting tokens based on patterns in huge datasets. However, advanced reasoning capabilities aim to do more than just predict. They “think” step-by-step, interpret complex problem sets, and even gather additional data to refine their answers.

• OpenAI’s Next Move: CEO Sam Altman has hinted that future breakthroughs rely on “o1” reasoning models that effectively chain together multiple steps of logic.

• New Labs Enter: Zero One AI, founded by famed researcher Kai-Fu Lee, is exploring cost-effective ways to incorporate reasoning, as is Deepseek (with its rumored “R1”).

• Commoditization Looms: Just as large language model pre-training is getting cheaper, specialized “reasoning” steps may soon follow that same path. Yesterday’s moat could become tomorrow’s commodity.

A Conversation with Perplexity’s CEO

To get a clearer picture of these shifting currents, Deirdre Bosa interviewed Arvind Srinivas, co-founder and CEO of Perplexity—a neutral AI platform that integrates various models, from GPT-4 to the new Chinese contenders.

Key Insights from Their Discussion

China’s Creative Workarounds

• In Arvind’s view, Deepseek had no choice but to innovate on a small budget with weaker GPUs. That environment forced them to adopt advanced mixture-of-experts architectures, floating-point 8-bit training, and other engineering feats that surprised Western engineers.

Open-Source Edge

• Arvind emphasized that once open-source catches up to the best of closed-source, developers naturally gravitate toward the more accessible option. Using Deepseek’s open model within Perplexity is already in the works.

Trusting China’s Tech

• While you can host open-source models yourself (ensuring you’re not directly dependent on foreign servers), relying on a foreign codebase poses geopolitical and ethical dilemmas. Licensing can change, or subtle censorship might emerge.

The Commoditization of LLMs

• For Arvind, the rise of powerful open-source LLMs means that it no longer makes sense for startups to burn billions trying to catch up with GPT-4. The focus is shifting to productization—using these commoditized models to build user-friendly tools and businesses.

Advertising in AI Search

• Perplexity itself has begun experimenting with an advertising model, but Srinivas insists it must be done in a transparent way. Users should be able to ignore ads without losing any factual content or search objectivity.

Race to Reasoning

• Arvind believes the next big leap will be in “reasoning.” While OpenAI is spearheading efforts with its “o1” family of models, China’s labs and open-source communities are quickly closing in. Eventually, reasoners too will become commoditized.

A Turning Point in the Global AI Race

Deepseek’s meteoric rise from obscurity to frontier competitor has proven that the AI race is not locked up by Western juggernauts. A new era is unfolding—one where creativity and efficiency can trump vast capital expenditures, and where open-source models might unify global developers while also posing new risks of censorship and influence.

What happens next may depend on how quickly the rest of the world responds. The U.S. and Europe can attempt further restrictions on high-end chips, but as Deepseek’s success demonstrates, they may only force China into more ingenious alternatives. Meanwhile, American companies like Meta are racing to upgrade their own open-source offerings, hoping to maintain mindshare and forestall a future in which a Chinese model becomes the global default.

In the end, this is about far more than bragging rights or corporate valuations. It’s a historic pivot in technological innovation—with profound stakes for global power, economic influence, and the question of whose values will define the AI platforms of tomorrow.