15x Smaller, 500x Faster, Zero Effort—Vector Search Just Got a Supercharged Upgrade

- Instant vector search over millions of documents with no pre-indexing.

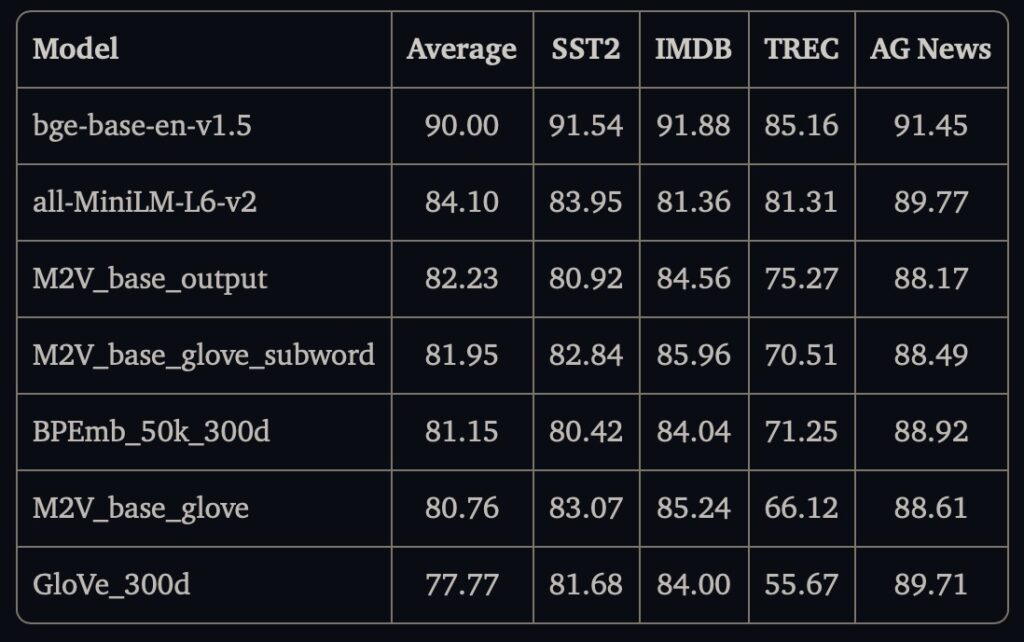

- Shrink models by 15x and accelerate them up to 500x faster with minimal performance drop.

- Plug-and-play integration with LangChain, HuggingFace, and other AI frameworks—no coding wizardry needed.

Imagine typing a question into a search bar and getting an answer before you finish blinking. Or sifting through millions of legal documents in seconds to find a single clause. This isn’t science fiction—it’s the promise of Model2Vec, a breakthrough in AI that’s making technology faster, greener, and accessible to everyone.

The Problem: Why AI Feels Slow (and Expensive)

Modern AI tools like ChatGPT or Google Search rely on massive language models that consume enormous computational power. Think of these models as gourmet chefs: they craft perfect answers by analyzing every word in context, but they cook each response from scratch. This makes them slow, energy-hungry, and impractical for real-time tasks—like finding a needle in a digital haystack.

Enter Model2Vec, a game-changing technique that trades “gourmet” for “fast food” without sacrificing quality.

Static Embeddings: The Fast Food of AI

At its core, Model2Vec uses static embeddings—a way to pre-process words into fixed numerical codes (vectors) that machines understand. Unlike today’s AI models, which recalculate meanings on the fly, static embeddings are like pre-chopped ingredients:

- Instant Results: No waiting for complex calculations.

- Tiny Footprint: Models are 15x smaller (think smartphone app, not supercomputer).

- Zero Energy Waste: Runs efficiently on everyday devices, even a decade-old laptop.

But static embeddings aren’t new. What makes Model2Vec special?

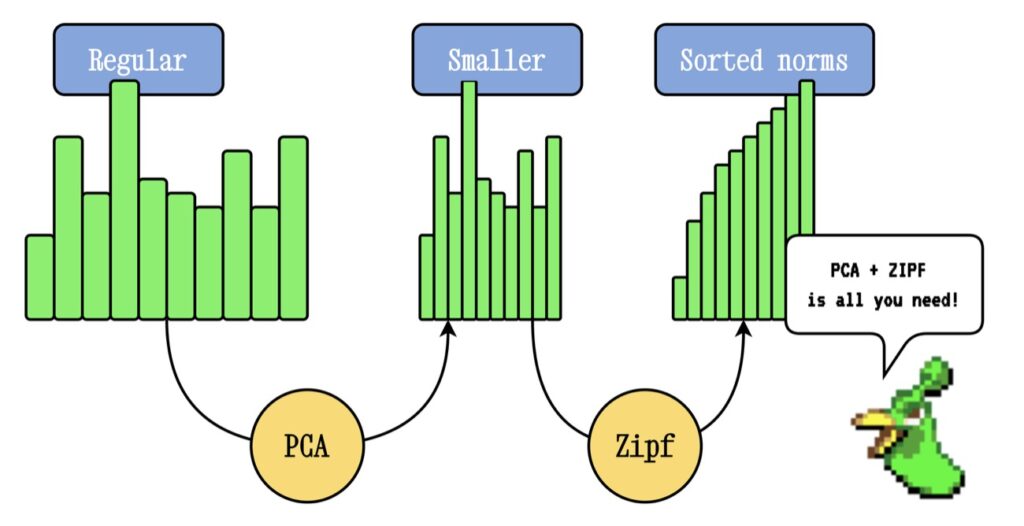

Model2Vec’s Magic: Three Simple Tricks

- Pre-Cooked Ingredients:

Model2Vec pre-processes every word and phrase in a language (e.g., “machine learning” becomes a pre-defined code). When you search, it simply mixes these codes—no heavy lifting required. - Smarter Compression:

Using a math trick called PCA, it shrinks these codes into compact versions, stripping noise while keeping the essence. Surprisingly, this often improves accuracy. - Ignoring the Noise:

Common words like “the” or “and” are downplayed automatically, thanks to Zipf’s Law—a linguistic rule that predicts how often words appear. Rare terms get priority, making searches sharper.

Why This Matters to You

- Healthcare: Diagnose rare diseases faster by scanning medical records in real time.

- Retail: Instantly recommend products from a catalog of millions.

- Everyday Apps: Voice assistants that respond without lag, even offline.

A law firm, for example, could use Model2Vec to search 10 million contracts in seconds for a specific clause. A farmer in a remote area could analyze crop data on a smartphone. The possibilities are endless.

When to Stick With Traditional AI

Model2Vec isn’t perfect. Tasks requiring nuance—like detecting sarcasm (“Sure, another meeting!”) or wordplay—still need bulkier models. But for 90% of practical uses (search, recommendations, basic chatbots), it’s a revelation.

The Bigger Picture: AI for Everyone

Model2Vec isn’t just about speed—it’s about democratization. By slashing costs and hardware needs, it puts powerful AI tools within reach of startups, schools, and nonprofits. It’s also greener: 500x less energy per query could drastically reduce tech’s carbon footprint.

Try It Yourself (No PhD Needed)

Pre-trained Model2Vec models are free on HuggingFace, a popular AI platform. With a few lines of code, anyone can integrate it into apps:

python

Copy

from model2vec import Model2Vec

model = Model2Vec.from_pretrained("model2vec-lightning")

embeddings = model.encode("Hello, world!")

Or use it seamlessly with tools like LangChain to supercharge chatbots.

Fast, Frugal, and Sustainable

As AI grows, so does its appetite for resources. Model2Vec offers a blueprint for a leaner future—where technology serves us instantly, without draining the planet. It’s not just a better algorithm; it’s a step toward AI that works for everyone, everywhere.