Unveiled at CES 2026, the new open-source ecosystem targets the “long tail” of driving hazards with chain-of-thought AI.

- Human-Like Reasoning: Unlike traditional systems that follow rigid rules, Alpamayo uses “chain-of-thought” processing to analyze complex, unpredictable road scenarios step-by-step.

- The Open Ecosystem: The launch includes the Alpamayo 1 model, the AlpaSim simulation framework, and massive open datasets, allowing developers to train and validate systems at scale.

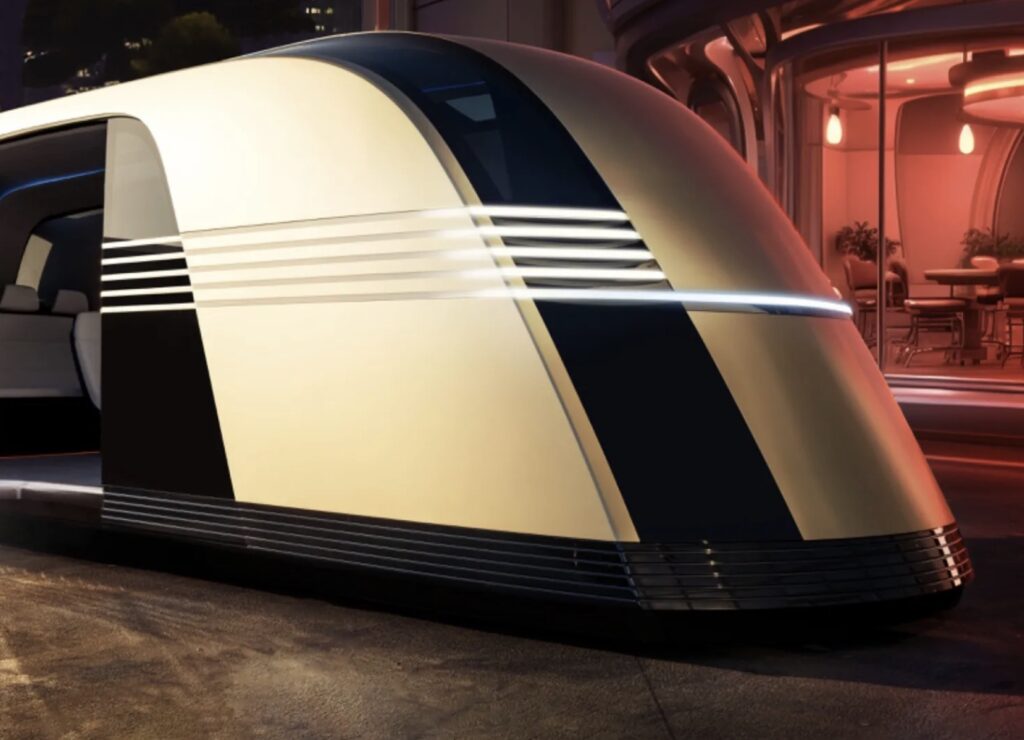

- Industry-Wide Adoption: Major players like Uber, Lucid, and Jaguar Land Rover are leveraging these tools to accelerate the deployment of safe Level 4 autonomous driving.

At CES 2026, Nvidia signaled a massive shift in the automotive industry by unveiling Alpamayo, a family of open AI models designed to solve the hardest problem in self-driving technology: how to make a car “think” rather than just react.

Nvidia CEO Jensen Huang described this development as the “ChatGPT moment for physical AI.” Just as large language models learned to understand text, Alpamayo is designed to understand the physical world. By introducing human-like reasoning to autonomous vehicles (AVs), Nvidia aims to move the industry past the stalling point of current technology and toward true Level 4 autonomy, where vehicles can operate independently without human supervision.

Beyond Rules: The Era of Physical AI

For years, autonomous vehicles have relied on a separation of “perception” (seeing a car) and “planning” (deciding to stop). While this works for standard driving, it often fails during the “long tail” of driving scenarios—rare, complex, and unpredictable events like erratic cyclists, unclear construction zones, or sudden weather changes.

Alpamayo addresses this by utilizing Vision Language Action (VLA) models. Instead of reacting instantly to sensor data based on pre-written code, Alpamayo evaluates the situation contextually.

The system uses “chain-of-thought” reasoning. It processes video input to generate trajectories while simultaneously creating “reasoning traces.” Essentially, the AI explains its logic step-by-step, allowing developers to understand why the car made a specific decision. This “explainability” is crucial for building trust and safety in intelligent vehicles.

The Alpamayo Ecosystem: A Three-Pillar Approach

Nvidia is not just releasing a model; it is releasing a development platform. Alpamayo is positioned as a “teacher model”—a massive system used to train smaller, more efficient models that will eventually run inside cars. The ecosystem consists of three distinct parts:

- Alpamayo 1: A 10-billion-parameter model available on Hugging Face. It connects visual data with decision-making, allowing vehicles to predict the behavior of pedestrians and other drivers.

- AlpaSim: A fully open-source, end-to-end simulation framework on GitHub. This tool allows developers to create realistic, closed-loop testing environments to validate safety before a physical car ever hits the road.

- Physical AI Open Datasets: To fuel these models, Nvidia released over 1,700 hours of real-world driving data collected across diverse geographies and conditions.

Solving the “Long Tail” Problem

The primary goal of Alpamayo is safety in edge cases. Traditional AV architectures struggle when they encounter a situation outside of their specific training data. Because Alpamayo reasons about cause and effect, it can handle novel scenarios it hasn’t seen before.

“Handling long-tail and unpredictable driving scenarios is one of the defining challenges of autonomy,” said Sarfraz Maredia, global head of autonomous mobility at Uber. By enabling vehicles to interpret body language and anticipate intentions, Alpamayo helps prevent errors caused by rigid automation. The system is further underpinned by Nvidia Halos, a safety framework designed to ensure robustness.

Why Open Source Changes the Game

Perhaps the most significant aspect of the announcement is that Alpamayo is open source. By removing proprietary barriers, Nvidia is inviting the global research community and startups to collaborate.

This strategy has already attracted support from mobility leaders including Lucid, Jaguar Land Rover (JLR), and Uber, as well as research institutions like Berkeley DeepDrive.

“Open, transparent AI development is essential to advancing autonomous mobility responsibly,” noted Thomas Müller, executive director of product engineering at JLR. The open nature of the project allows the industry to share testing and validation standards, potentially speeding up the timeline for safe self-driving cars globally.

Alpamayo represents a fundamental change in how vehicles are built. Developers can now use these large-scale models to “distill” intelligence into their own AV stacks, fine-tuning them on proprietary fleet data using the Nvidia DRIVE Hyperion™ architecture.