Jensen Huang kicks off CES 2026 with a six-chip platform designed to power the next generation of agentic AI and reasoning.

- Massive Leap in Power: The new Rubin platform is 5x more powerful than its predecessor, Blackwell, offering up to a 10x reduction in inference costs.

- Designed for “Agentic” AI: A new “extreme codesign” philosophy integrates six specialized chips to handle complex reasoning and long-term memory tasks.

- Instant Industry Adoption: Microsoft, AWS, and CoreWeave are already lining up to integrate Rubin into next-generation “AI superfactories” starting in late 2026.

The race for artificial intelligence supremacy has just accelerated. At CES 2026, NVIDIA founder and CEO Jensen Huang unveiled the company’s next-generation computing architecture: Rubin.

Described by Huang as “giant leap toward the next frontier of AI,” the Rubin platform is not merely a successor to the wildly successful Blackwell chips; it is a fundamental reimagining of how AI infrastructure functions. With the chips already in full production, NVIDIA is setting the stage for a year where “agentic AI”—systems that can reason, plan, and act—moves from theory to mass deployment.

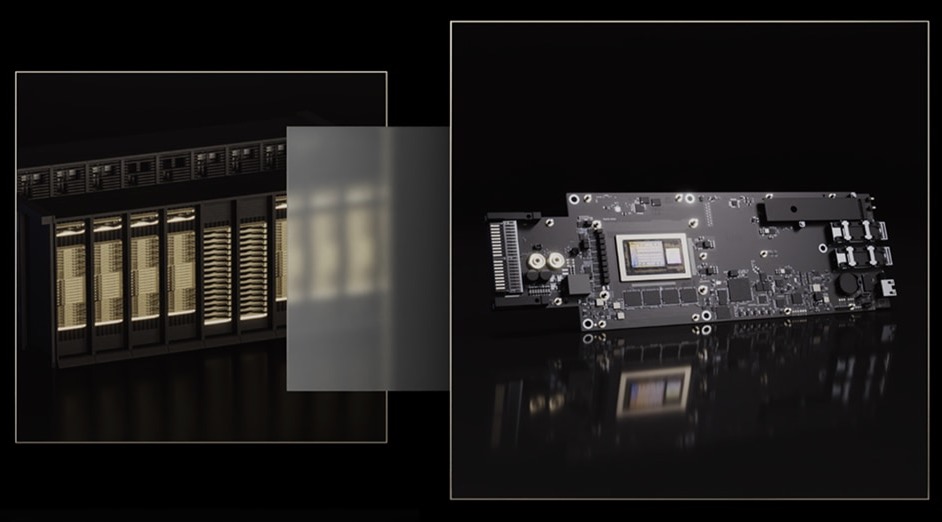

Extreme Codesign: The “Six-Chip” Strategy

The headline innovation of the Rubin platform is what NVIDIA calls “extreme codesign.” Rather than relying on a single powerful processor, the architecture orchestrates six distinct, high-performance components working in unison: the NVIDIA Vera CPU, Rubin GPU, NVLink 6 Switch, ConnectX-9 SuperNIC, BlueField-4 DPU, and the Spectrum-6 Ethernet Switch.

“Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof,” Huang told the audience.

This integrated approach yields staggering metrics. Compared to the previous Blackwell platform, Rubin requires 4x fewer GPUs to train massive Mixture-of-Experts (MoE) models and slashes the cost of inference token generation by 10x.

The Engine of “Agentic” AI

While raw speed is essential, the Rubin architecture is specifically engineered to solve the bottlenecks of modern AI: memory and reasoning.

As AI models evolve from simple chatbots to complex “agents” that perform multi-step tasks, they require vast amounts of context memory. To address this, NVIDIA introduced the Inference Context Memory Storage Platform, powered by the BlueField-4 DPU. This allows for the efficient sharing and reuse of data, enabling models to possess “longer memory” and better reasoning capabilities without the massive latency usually associated with such tasks.

The new Vera CPU plays a critical role here. Built with 88 custom cores, it is designed specifically for agentic reasoning, providing the efficiency needed for large-scale AI factories.

“The efficiency gains in the NVIDIA Rubin platform represent the kind of infrastructure progress that enables longer memory, better reasoning, and more reliable outputs,” noted Dario Amodei, CEO of Anthropic.

Building the “AI Superfactory”

The industry’s response has been immediate and immense. The world’s largest cloud providers and AI labs—including OpenAI, Google, AWS, and Meta—have committed to the platform.

Microsoft is leading the charge with plans for its next-generation “Fairwater” AI superfactories. These facilities will feature the NVIDIA Vera Rubin NVL72 rack-scale systems, which scale to hundreds of thousands of superchips. “We will empower developers and organizations to create, reason, and scale in entirely new ways,” said Microsoft CEO Satya Nadella.

Similarly, CoreWeave is integrating Rubin into its specialized AI cloud, utilizing its “Mission Control” software to manage the new architecture alongside existing systems. CEO Mike Intrator emphasized that Rubin is vital for handling the “reasoning, agentic, and large-scale inference workloads” of the future.

A $4 Trillion Infrastructure Boom

The launch of Rubin comes amidst a historic spending spree. Jensen Huang estimates that between $3 trillion and $4 trillion will be invested in AI infrastructure over the next five years.

The Rubin platform appears designed to capture the bulk of this investment by addressing the physical limitations of data centers. The new Spectrum-X Ethernet Photonics switch systems, for example, deliver 5x improved power efficiency, a critical metric as energy consumption becomes a primary concern for the tech industry.

Elon Musk, whose company xAI is among the adopters, summarized the sentiment surrounding the launch: “NVIDIA Rubin will be a rocket engine for AI. If you want to train and deploy frontier models at scale, this is the infrastructure you use.”