Empowering Creators with AI-Driven Precision on Everyday Hardware

- Simplified AI Image Editing: Black Forest Labs’ FLUX.1 Kontext [dev] model enables intuitive, language-based edits to images without complex setups, now optimized via NVIDIA’s NIM microservice for faster, more accessible workflows.

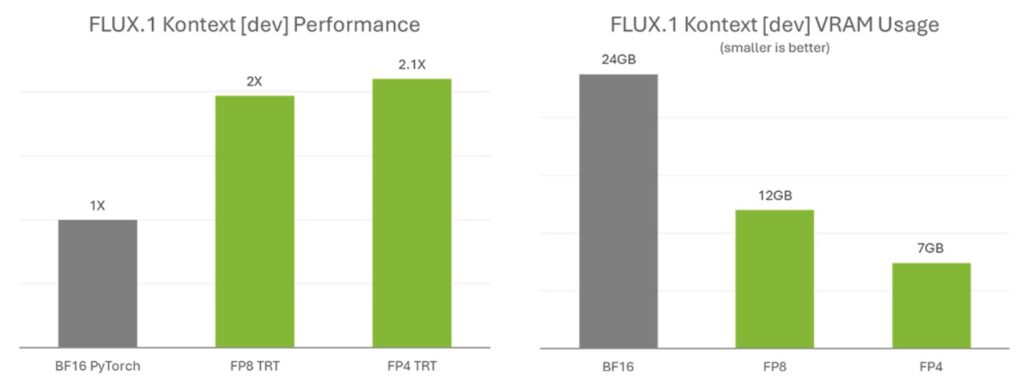

- Performance Boosts for All: Through quantization and TensorRT acceleration, the model shrinks in size and doubles speed on RTX GPUs, democratizing high-end AI tools for enthusiasts and professionals alike.

- Easy Integration and Future Potential: Available for one-click deployment in ComfyUI, this microservice paves the way for broader generative AI adoption, transforming creative processes across industries.

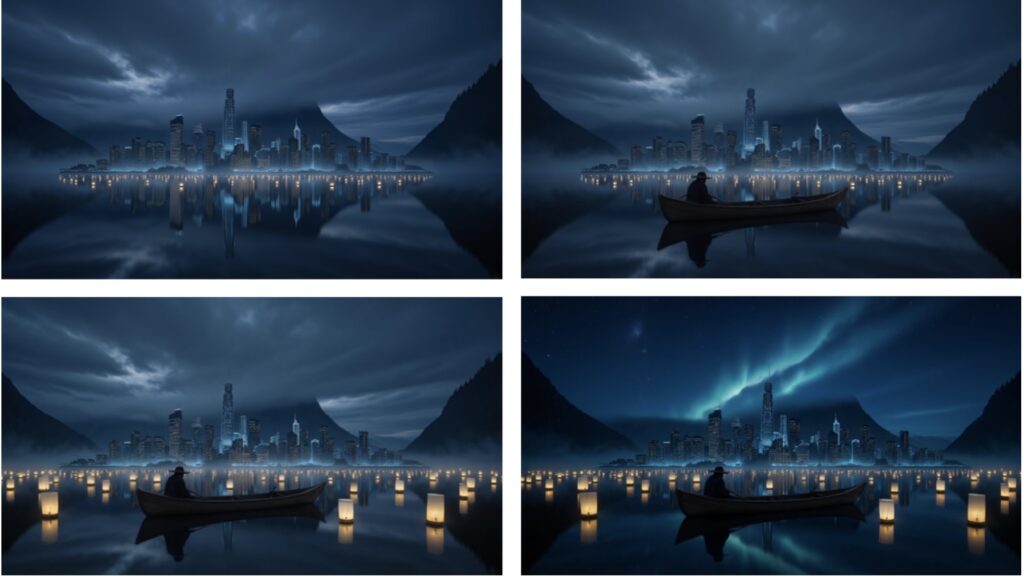

In the rapidly evolving world of generative AI, image editing has long been a domain reserved for experts with powerful hardware and intricate workflows. But that’s changing fast. NVIDIA‘s latest release of the FLUX.1 Kontext [dev] as an NIM microservice, in collaboration with Black Forest Labs, is breaking down those barriers. This open-weight model allows users to transform images using simple natural language prompts, combined with visual references, making it possible to refine details or overhaul entire scenes with remarkable coherence and quality. No more fine-tuning or convoluted processes—just describe what you want, and let the AI handle the rest. Powered by NVIDIA RTX GPUs and TensorRT technology, this tool isn’t just about editing; it’s about unlocking creative potential for everyone, from hobbyists tinkering on their home PCs to professionals streamlining production pipelines.

At its core, FLUX.1 Kontext [dev] stands out for its guided, step-by-step generation process. Unlike traditional models that might produce erratic results, this one accepts both text descriptions and image inputs, ensuring edits stay true to the original concept. Imagine starting with a photo of a serene landscape and instructing the AI to “add a vibrant sunset with glowing clouds”—the model evolves the image intuitively, maintaining visual consistency. This capability stems from Black Forest Labs’ innovative design, which emphasizes control and precision. Now, thanks to NVIDIA’s NIM microservice, deploying this power is simpler than ever. The microservice handles the heavy lifting: curating model variants, adapting inputs and outputs, and quantizing the model to slash VRAM requirements. What was once a 24GB behemoth is now condensed to 12GB in FP8 format for GeForce RTX 40 Series GPUs or a mere 7GB in FP4 for the upcoming RTX 50 Series using the novel SVDQuant method. These optimizations preserve image quality while making the model run efficiently on consumer hardware, optimized for RTX AI PCs.

The real game-changer here is the performance acceleration provided by NVIDIA TensorRT, a framework that taps into the Tensor Cores of RTX GPUs for maximum efficiency. Users can expect over twice the speed compared to running the original BF16 model in PyTorch, turning what used to be sluggish processes into seamless experiences. Previously, such gains were the privilege of AI specialists with deep infrastructure knowledge. Now, with the NIM microservice, even enthusiasts can dive in without a steep learning curve. It’s available as prepackaged, optimized files ready for one-click download through ComfyUI NIM nodes, hosted on Hugging Face alongside TensorRT enhancements. This collaboration between NVIDIA and Black Forest Labs not only reduces model size but also broadens accessibility, fostering a new era where generative AI isn’t confined to data centers but thrives on personal devices.

Getting started is straightforward, designed to minimize friction for users eager to experiment. Begin by installing NVIDIA AI Workbench, then grab ComfyUI—a popular interface for AI workflows. From there, add the NIM nodes via the ComfyUI Manager, accept the model licenses on Black Forest Labs’ FLUX.1 Kontext [dev] page on Hugging Face, and hit “Run.” The node automates the rest, preparing workflows and downloading necessary models. This ease of use extends to the broader NIM ecosystem, which includes optimized microservices for popular community models, all tailored for NVIDIA GeForce RTX and RTX PRO GPUs. For those looking to explore further, resources abound on GitHub and build.nvidia.com, offering a gateway to building custom AI applications.

From a broader perspective, the FLUX.1 Kontext [dev] NIM microservice represents a pivotal shift in the democratization of AI. In an industry where generative tools are exploding—from art creation to content marketing and beyond—this release lowers the entry barriers, enabling more diverse voices to participate. It aligns with the growing trend of edge AI, where processing happens on local devices rather than distant clouds, enhancing privacy, speed, and cost-efficiency. As NVIDIA continues to push boundaries with technologies like Blackwell architecture and advanced quantization, we can anticipate even more innovations that blend creativity with cutting-edge hardware. Whether you’re an artist refining digital masterpieces or a developer integrating AI into apps, this tool invites you to harness the power of generative AI in ways that feel natural, powerful, and profoundly accessible. The future of image editing isn’t just here—it’s downloadable, optimizable, and ready to transform your ideas into reality.